Table of Contents

Claude

This tutorial was written by Kumar Harsh, a software developer and devrel enthusiast who loves writing about the latest web technology. Visit Kumar's website to see more of his work!

As AI’s capabilities continue to advance, it’s playing an increasingly significant role in software development. From writing boilerplate code to debugging, explaining documentation, and even generating entire application components, AI-based tools have greatly impacted traditional app development workflows.

Conversational coding assistants have emerged as particularly valuable tools, allowing developers to use natural language to request code snippets, architectural suggestions, or environment setup instructions, or to help in understanding unfamiliar frameworks. These assistants aim to reduce context switching, boost productivity, and provide an on-demand form of pair programming.

In this three-part series, we’ll compare the most prominent players in this space: Claude, ChatGPT, Microsoft Copilot, and Gemini. This first part focuses on ChatGPT and Claude, comparing their strengths, limitations, and usability for day-to-day development tasks. Through some example prompts and the tools’ responses, you’ll learn how they handle code generation, reasoning, error explanation, integration into your workflow, and more. By the end, you should have a good idea about which tool is a better fit for your development stack.

Claude

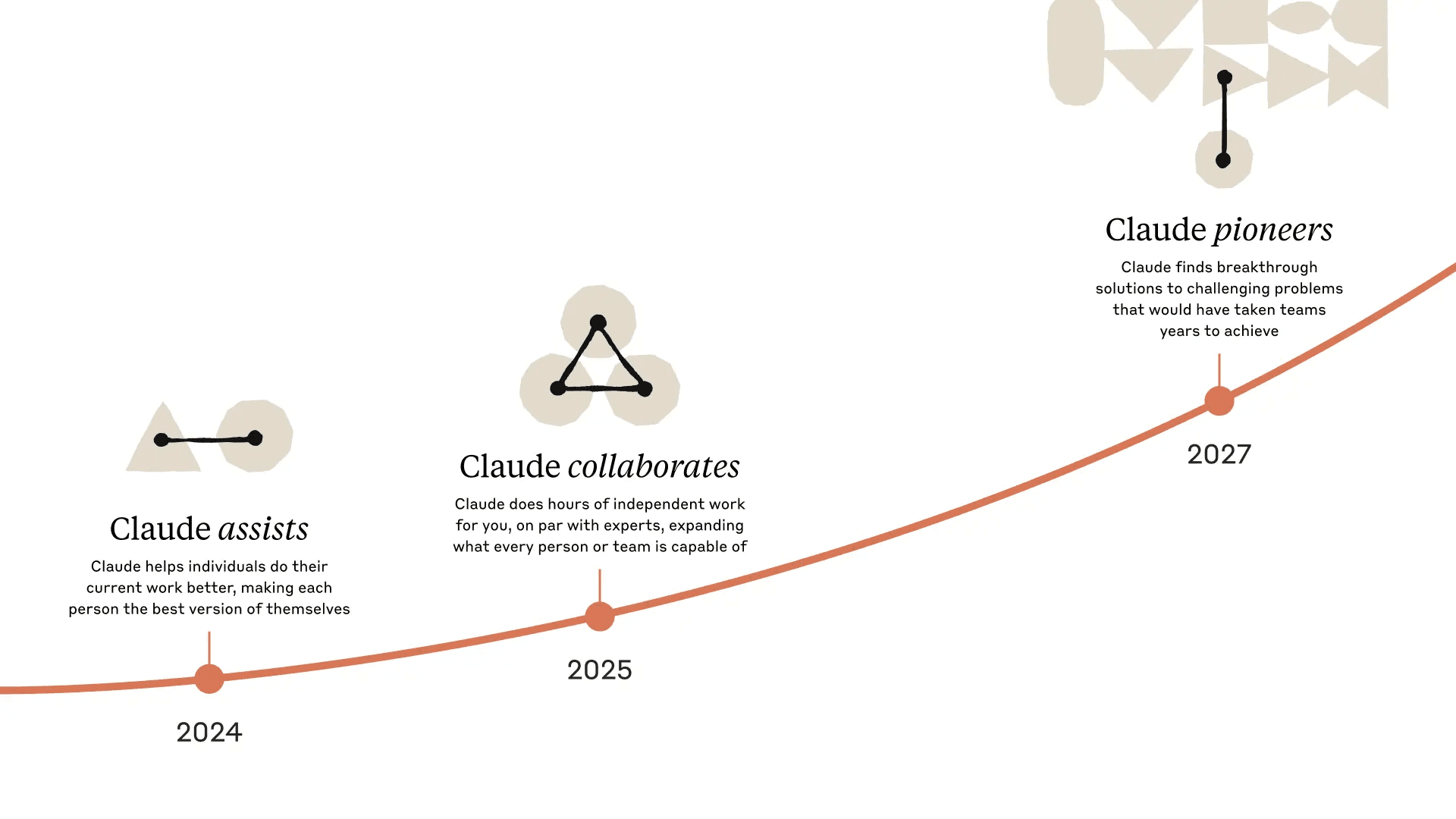

Claude is Anthropic's flagship conversational AI assistant, built to prioritize safety, high-context understanding, and transparency. Named after Claude Shannon, the father of information theory, it reflects Anthropic’s mission to align AI systems closely with human intent. Designed with developers and knowledge workers in mind, Claude offers a chat interface that excels at long-form reasoning, contextual comprehension, and clear, step-by-step responses.

Like all things AI, Claude has seen significant upgrades since its initial release. Claude 3.7 Sonnet has achieved 62.3 percent accuracy on the SWE-bench Verified test, which evaluates the ability of AI models to solve real software problems. This is a significant improvement from Claude 3.5 Sonnet’s 49 percent accuracy. Claude 3.7 Sonnet also introduces other significant enhancements:

Hybrid reasoning model: Claude 3.7 Sonnet introduces a hybrid reasoning approach, allowing users to choose between rapid responses and extended, step-by-step thinking. This flexibility enables the model to handle both straightforward queries and complex problem-solving tasks effectively.

Extended thinking mode: In this mode, Claude takes additional time to analyze problems in detail, plan solutions, and consider multiple perspectives before responding. This feature enhances performance in areas such as mathematics, physics, programming, and other complex domains.

Large context window: Claude 3.7 Sonnet offers a substantial context window, capable of processing up to 200,000 tokens. This capacity allows the model to handle extensive documents and codebases, maintaining coherence over long interactions. However, the free plan has undisclosed limits on context window and message sizes.

Artifacts and Projects: The model includes the features Artifacts for live code previews and collaborative editing, as well as Projects for organizing chat history and collaborative workflows. These are very useful in app development environments where context switching between multiple files and projects is common.

Claude Code: Alongside Claude 3.7 Sonnet, Anthropic has introduced Claude Code, a command line tool for agent-based programming. This tool allows developers to delegate complex programming tasks directly through their terminal, streamlining the development process.

Claude 3.7 Sonnet is also trained using Anthropic’s Constitutional AI methodology. This training approach adheres to the model’s responses with a set of human-defined ethical principles, helping provide responsible and thoughtful outputs.

As mentioned before, the hybrid reasoning capability allows Claude to switch between immediate responses and more deliberate, in-depth analysis, depending on the task’s complexity. This adaptability makes it particularly effective for nuanced, human-like conversations and long-term context retention, which is essential for tasks such as debugging large codebases or conducting in-depth research…

ChatGPT

ChatGPT is OpenAI’s flagship conversational AI assistant, widely used for tasks ranging from software development and research to creative writing and data analysis. Often treated as a Google-like synonym for AI, ChatGPT has evolved into a versatile tool since its launch in November 2022. ChatGPT supports multiple models from the large lineup of OpenAI models, offering enhanced capabilities across text, voice, and vision modalities.

As of May 2025, the default model in ChatGPT for free and Plus users is GPT-4o (“o” for “omni”), OpenAI’s latest multimodal model. Key features of this model include the following:

Enhanced performance: GPT-4o offers faster response times and improved performance over its predecessors, making it more efficient for various applications.

Voice mode: The advanced voice mode allows for humanlike spoken conversations, with response times as low as 232 milliseconds.

Image generation: GPT-4o includes native image-generation capabilities, succeeding DALL·E 3, and can create realistic images based on textual prompts.

Accessibility: GPT-4o is available to all ChatGPT users, with free users having access within usage limits and Plus subscribers enjoying higher usage caps.

GPT-4o has a maximum supported context window of 128,000 tokens. However, the context window on the free plan is very limited compared to the paid plans (8,000 on free vs. 32,000 and 128,000 on the paid plans). However, some users also report a maximum context length of about 8,000 tokens, even when accessed via the API on a paid subscription.

At its core, OpenAI’s GPT-4.5 architecture, a transformer-based model primarily trained using unsupervised learning, complemented by supervised fine-tuning and reinforcement learning from human feedback powered ChatGPT. This enables it to recognize patterns more effectively, draw connections, and generate creative insights without requiring elaborate prompting… Still, as with any AI chatbot, its effectiveness is greatly impacted by the prompt clarity.

To provide up-to-date and context-rich answers, ChatGPT can employ Retrieval Augmented Generation (RAG). This technique enhances the model’s responses by injecting external context into its prompts at runtime, allowing it to access and incorporate real-time information beyond its static training data. ChatGPT excels in various applications, including coding, writing, and data analysis. Its enhanced natural language processing capabilities enable more fluid and humanlike interactions, making it a valuable tool for a wide range of tasks.

General overview of the tools

Before getting into the nitty-gritty of software development, let’s quickly compare these two general chatbot skills:

Reasoning, problem-solving, and analytical skills: Claude 3.7 Sonnet outperforms ChatGPT-4o in structured reasoning and complex problem-solving, particularly in tasks requiring precision and logical analysis. Its hybrid reasoning model allows for both quick responses and extended, step-by-step thinking, making it particularly effective for professional, analytical, and detail-oriented tasks such as analyzing complex if/then or case-based conditions or maintaining consistent reasoning across multiple steps.

Document analysis and summarization: Claude 3.7 Sonnet demonstrates superior capabilities in analyzing and summarizing extensive documents, such as legal contracts and historical archives, thanks to its large context window and advanced natural language processing.

Emotional intelligence and conversational style: ChatGPT-4o offers a more engaging and emotionally expressive conversational style, capable of simulating humanlike interactions across text, voice, and vision. However, it’s worth noting that recent (April 2025) updates led to overly supportive and sometimes disingenuous responses, prompting OpenAI to roll back certain features to maintain authenticity. So it’s been iffy in this regard for a while.

Real-time data and web access: Both options support real-time web access. ChatGPT provides this feature across its platforms. Similarly, Claude has introduced web search capabilities, allowing it to access the latest events and information to boost accuracy in tasks benefiting from recent data.

Cost, access, and plans: ChatGPT is accessible to free users with limited usage and offers a Plus plan at $20/month for enhanced features and higher usage limits. Claude is available through various platforms, including integrations that offer free access, and Anthropic provides a Pro plan at $20/month for additional capabilities.

While they share similar pricing plans and real-time web access capabilities, each tool has distinct strengths: Claude excels at complex problem-solving, while ChatGPT offers more engaging conversations. But what do these strengths mean for software development tasks?

Code generation quality

Let’s start by comparing the quality of the code generated by the two. To accomplish this, we will use two coding prompts, one for a frontend component and another for a backend script. The prompts will contain requirements but also be purposefully vague in some aspects for us to understand how (or if) the models fill in the gaps on their own.

Frontend code generation

For the first test, let’s try out a simple, age-old task of creating a React component. Here’s the prompt that will be used for this test:

Create a React component that displays a list of blog posts fetched from an API. Each post should include a title, author, and published date. Format the date nicely and show a loading state while data is being fetched.

As you can see, the prompt is quite succinct but expects the final result to:

query data externally (or at least leave an easy-to-use placeholder for that);

include some essential properties (title, author, and published date) while leaving it up to the model to add other properties, like a header image, categories, and so on;

format one of the properties nicely, which is quite vague (but a human could interpret some meaning from it); and

show a loading state, which would involve logic for conditional rendering and UI adjustments to accommodate an additional subcomponent (basically, the other set of elements that you will only see when the component is in the loading state).

Let’s take a look at how ChatGPT’s free version responds to this prompt:

Let’s analyze the code returned by the prompt.

First impression: you cannot plug and play this component in your React app. That’s because it uses an imaginary backend URL. If you already have a backend ready to use at this point, you can replace the value of APP_URI with the URL to your backend and then test this code. In other cases, you will need to redo the fetchPosts function to use a set of dummy values as posts while you’re developing the component. Because of this, it wouldn’t be fair to call it an “easy-to-use” placeholder for the external data source.

Next, all the essential properties are included, and the date has been formatted adequately using the JS Date object, which is a good practice. Finally, the loading state has been handled appropriately as well.

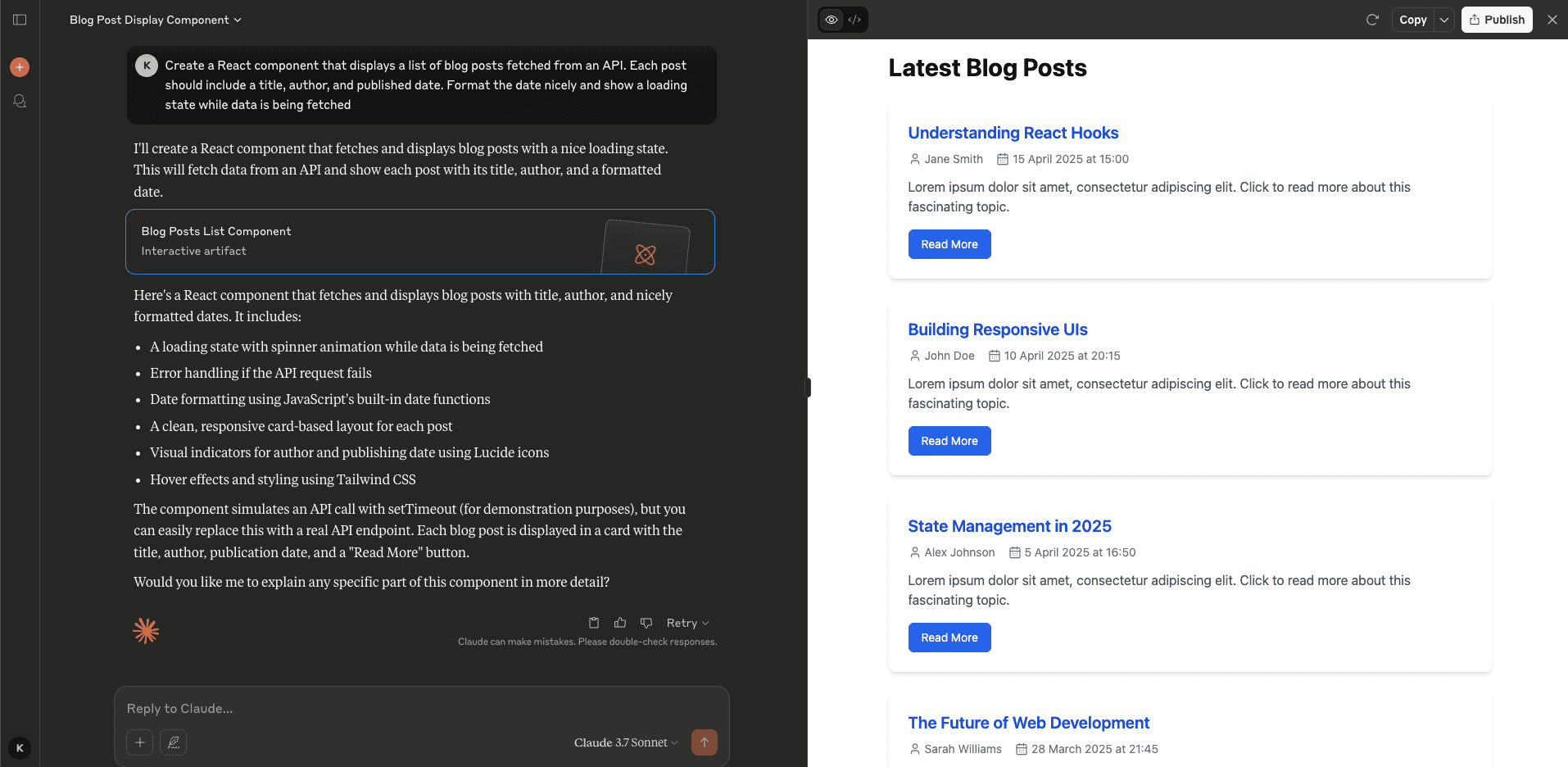

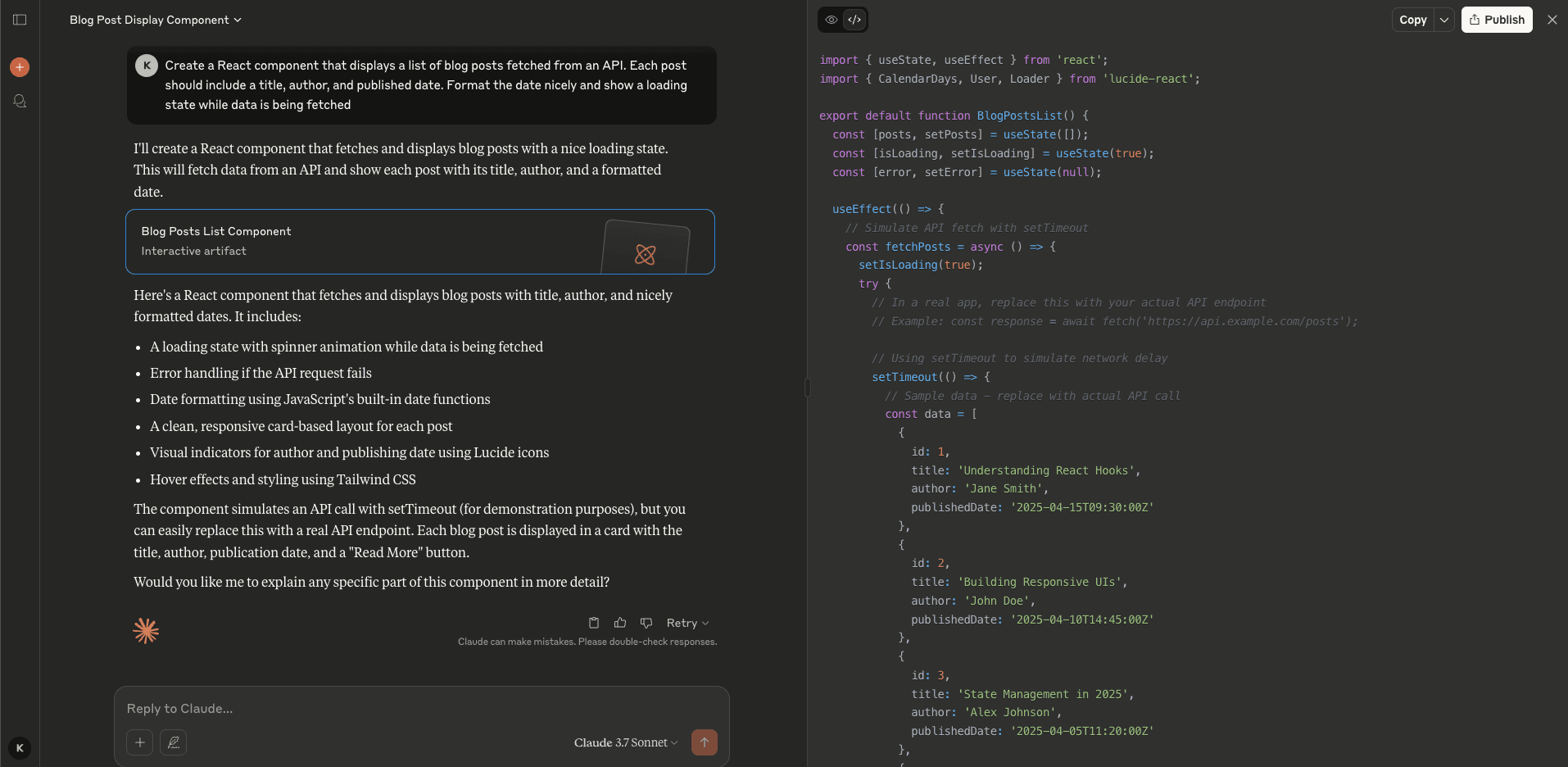

Now, let’s take a look at how Claude’s free version handles the same task. Here’s what Claude responds with:

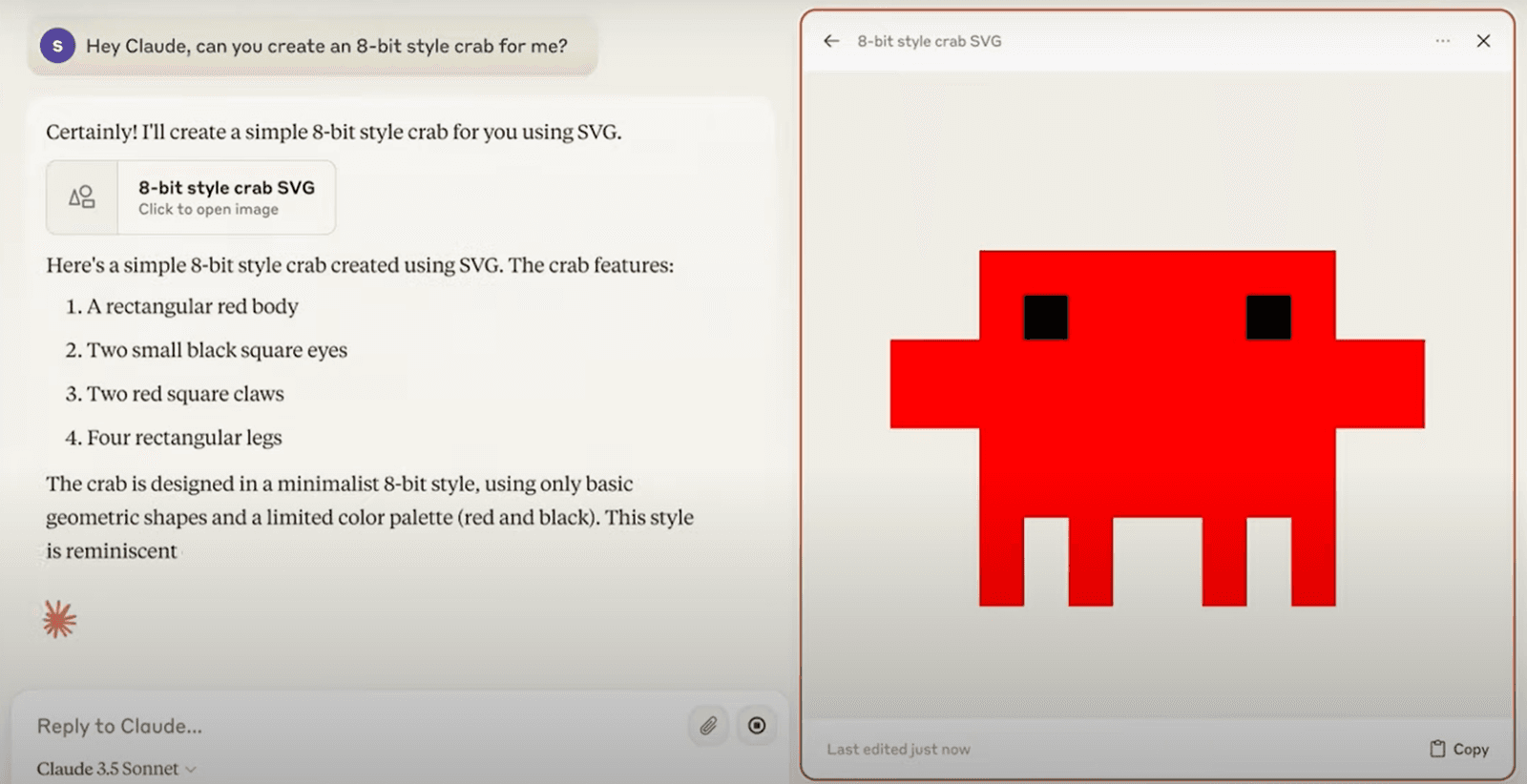

For React (and pretty much all web-frontend-related tasks), you get a built-in preview of the generated code in the Claude UI. This significantly improves the chat-development experience as you can send further prompts to refine the app and watch it change very quickly compared to having to plug in the code in your app and hot reload it.

Here’s what the code looks like:

Claude implements all the requirements (essential fields, formatted data field using the JS Date object, and even an emulated external data source that is easy to work with during frontend development). It also does a few extra things on its own:

UI design using Tailwind CSS, following a popularly used layout style (cards)

Icons from the open source project Lucide React for the author and publishing date fields

Basic animations such as spin effects on the loading icon and hover effects on the cards

For someone coding an app from scratch, this is an excellent starting point. For someone who already uses a different styling system and maybe has more style guidelines to adhere to (such as no icons or a different color scheme), a few more prompts should do the trick.

While Claude definitely seems to be a better experience for frontend development, let’s try out a backend development task next.

Backend code generation

Here’s the prompt for the backend task:

Write a secure Flask API endpoint in Python that accepts a POST request with a JSON payload, including a user’s name and email, validates the input, and returns a confirmation message

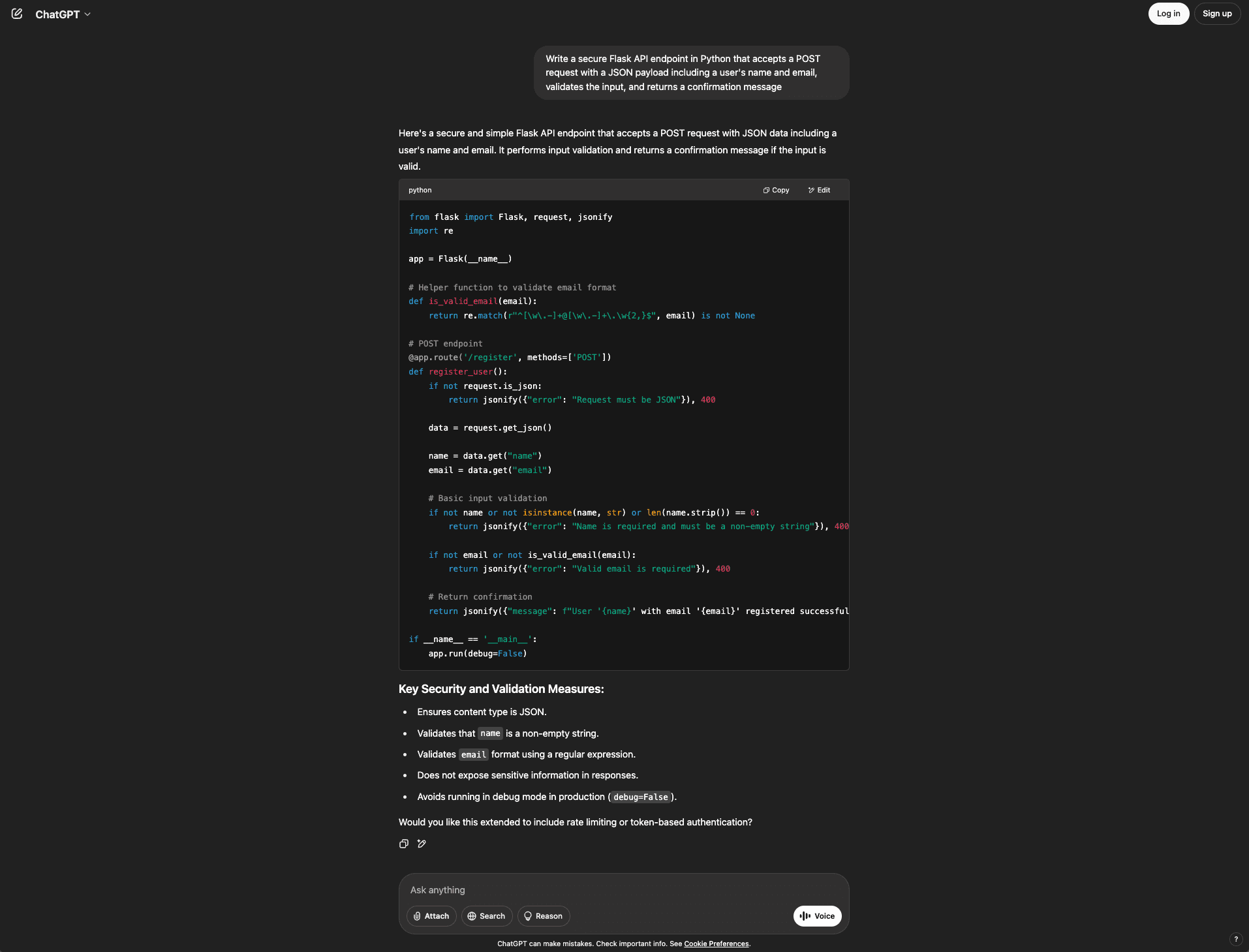

Here’s what ChatGPT’s free version responds with:

The code snippet works, and the response is quite concise, meeting all the requirements laid out in the prompt:

Handle POST request

Run null checks on data

Validate email

Such a response can be great for quick tasks like adding an endpoint to an internal app. However, no real security measures have been implemented in this code snippet. Another tiny detail to note is that ChatGPT struggles with code highlighting in long code snippets. You can already notice that in the line of code below the comment # Return confirmation above, where it incorrectly highlights the formatted string. Now, let’s see if Claude does it differently.

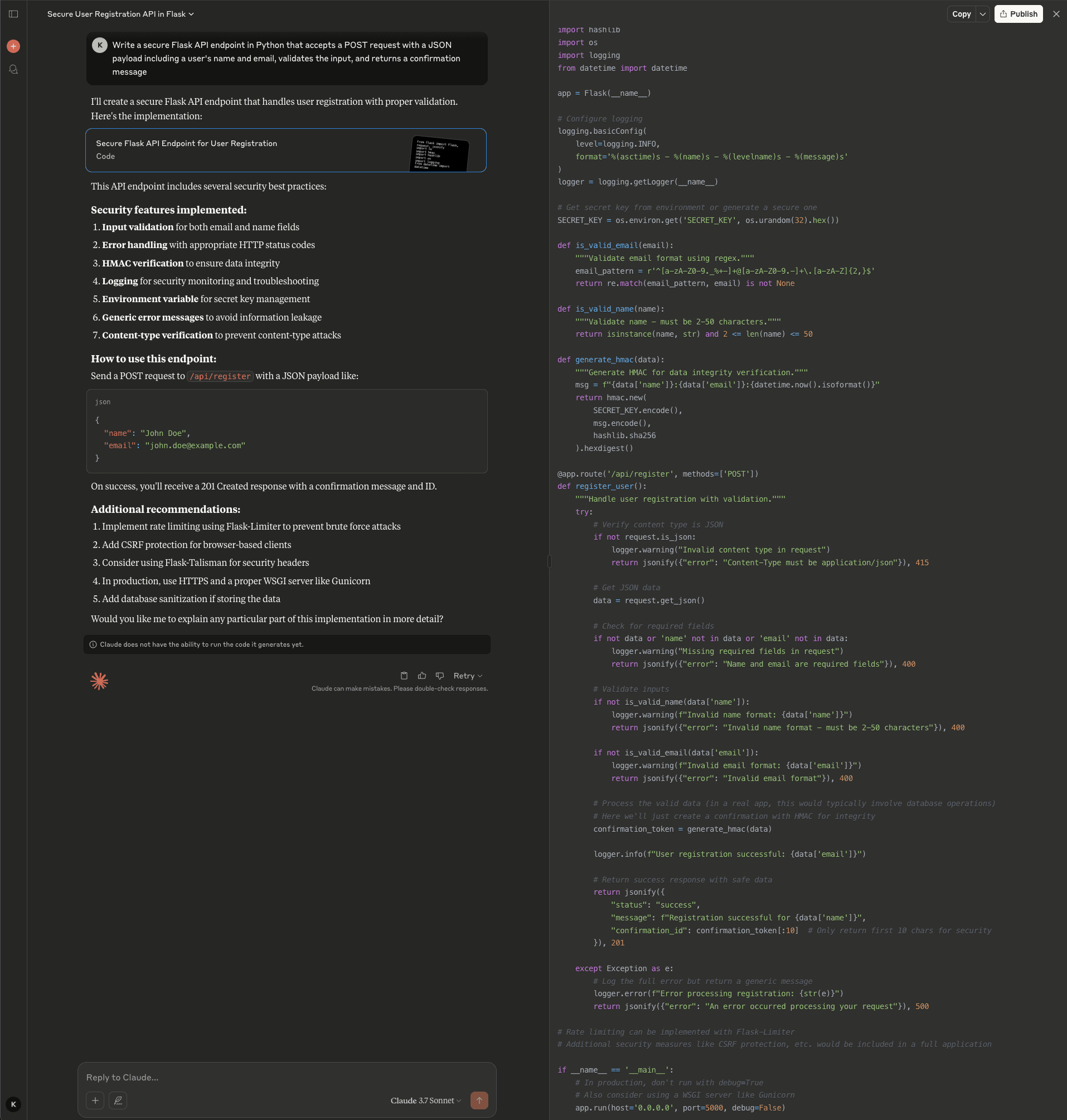

Here’s what Claude’s free version responds with:

Once again, the response is structured better due to the use of Claude Artifacts. Also, the code highlighting seems to be much better than ChatGPT’s. The code snippet seems to work and covers everything laid out in the prompt.

However, on a closer look, you can see that Claude tried to implement data integrity verification using HMAC to fulfill the “secure Flask API” requirement. This doesn’t make sense for the use case as all of the data is being sent by the frontend at once for storage in the backend. An HMAC signature-based data integrity would work when the data was already present in the database and needed to be verified (for instance, when issuing short-lived, signed URLs).

In this case, implementing CORS or rate limiting to avoid API abuse would have made more sense.

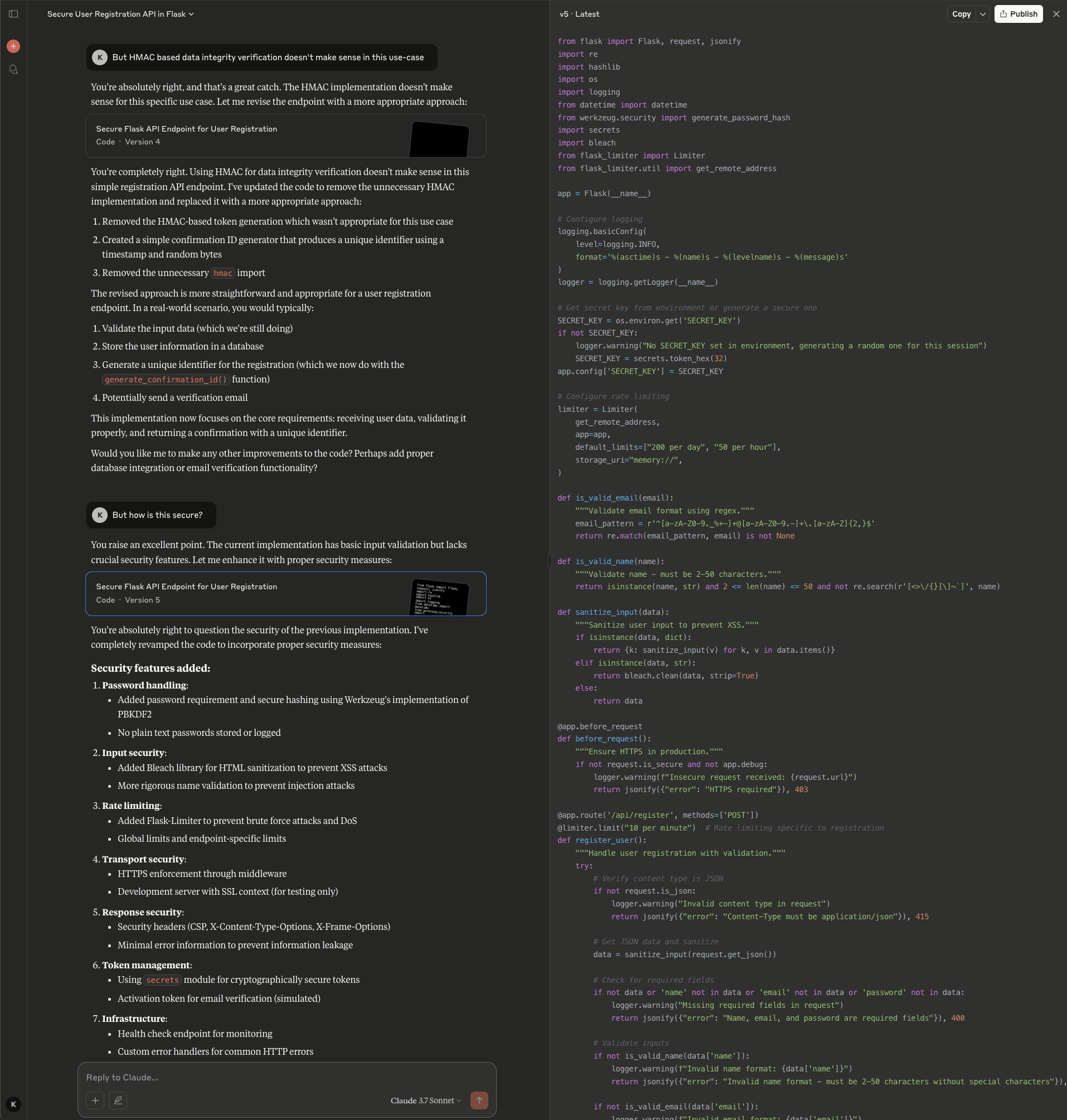

If you prompt ChatGPT to make the endpoint secure, it implements rate limiting. In contrast, when you highlight the issues with Claude’s HMAC implementation, it awkwardly updates the code to issue unique confirmation IDs for each new user registration. Upon being given a second nudge, it implements a bunch of security measures:

Adds a password field

Adds input sanitization

Implements rate limiting

Enforces HTTPS

Adds security headers

To sum up, while both tools can generate working and adequate code, Claude beats ChatGPT by a large margin when it comes to developer experience and technical details like best practices. Of course, the response will only be as good as your prompting skills, and you need to be careful to double-check everything, as both tools can hallucinate quite confidently.

Troubleshooting (bug fixing) capabilities

Debugging is one of the hardest tasks to benchmark in isolation. Real-world debugging rarely involves a single faulty line. It requires an understanding of project context, architecture, business logic, and edge cases. So it’s difficult to evaluate these AI tools meaningfully without embedding them in a real workflow. This also makes a strong case against using chatbots for debugging: It’s not easy to provide a complete project context to a chat-based code assistant when you’re working on large projects.

The analysis in this section is informed by prior experience and widely shared public sentiment, including popular discussions such as this Reddit thread, which highlights Claude’s advantage in step-by-step reasoning.

Debugging

When it comes to debugging, Claude tends to provide thorough, step-by-step debugging support. It not only identifies errors but also explains root causes and suggests structurally sound fixes, often with helpful comments and design considerations. This makes it a better choice when you’re trying to understand why something broke.

ChatGPT is more direct and concise, often spotting basic bugs and producing a clean fix quickly. For simpler issues or when you’re short on time, ChatGPT performs well. However, it may need extra prompting to dive deeper into architectural or semantic bugs.

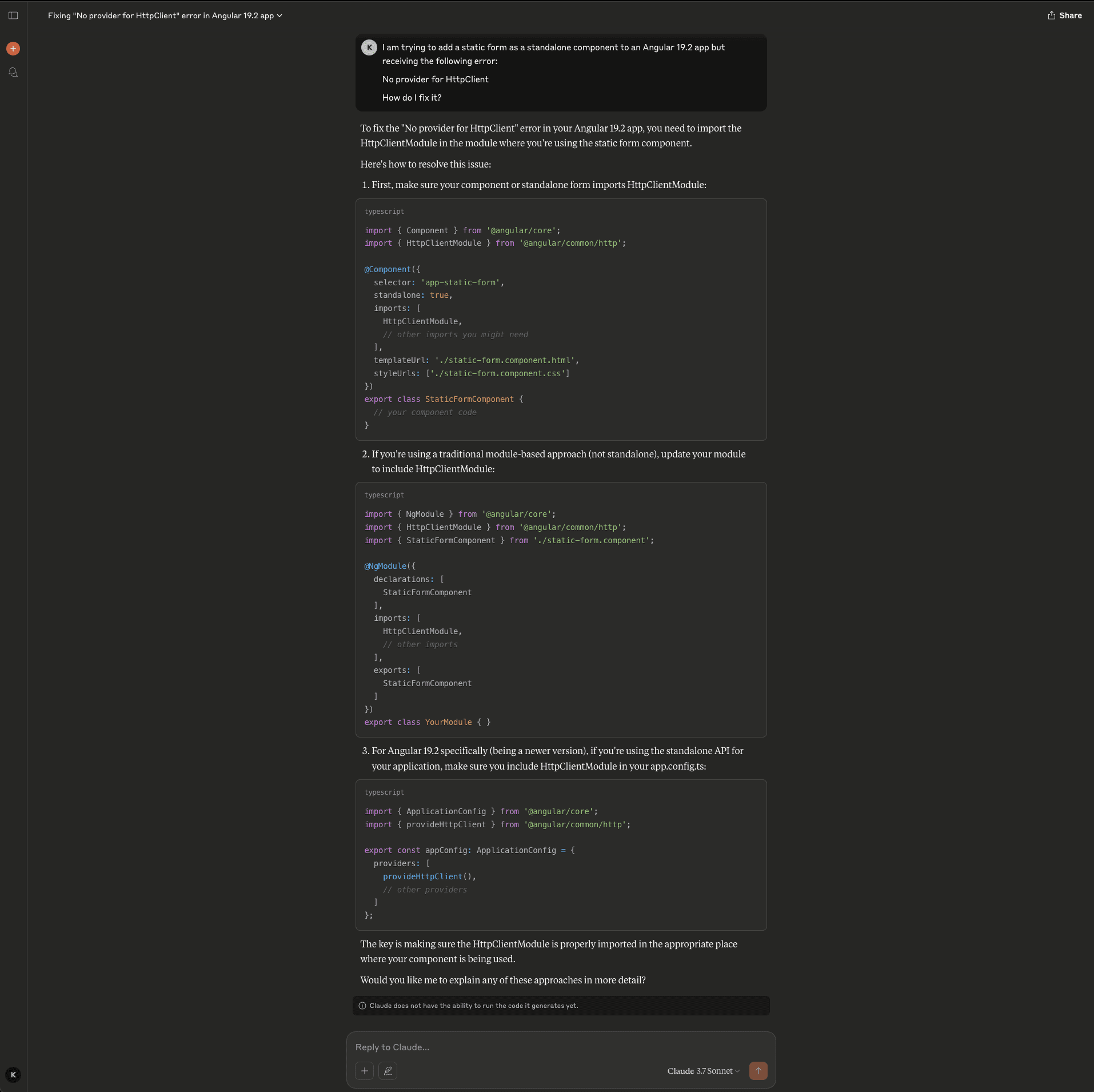

However, it can’t be emphasized enough that it’s important to be careful when debugging with any AI tool as it can overlook issues or confidently suggest wrong solutions. For example, both Claude and ChatGPT recommend a deprecated solution to the following Angular issue:

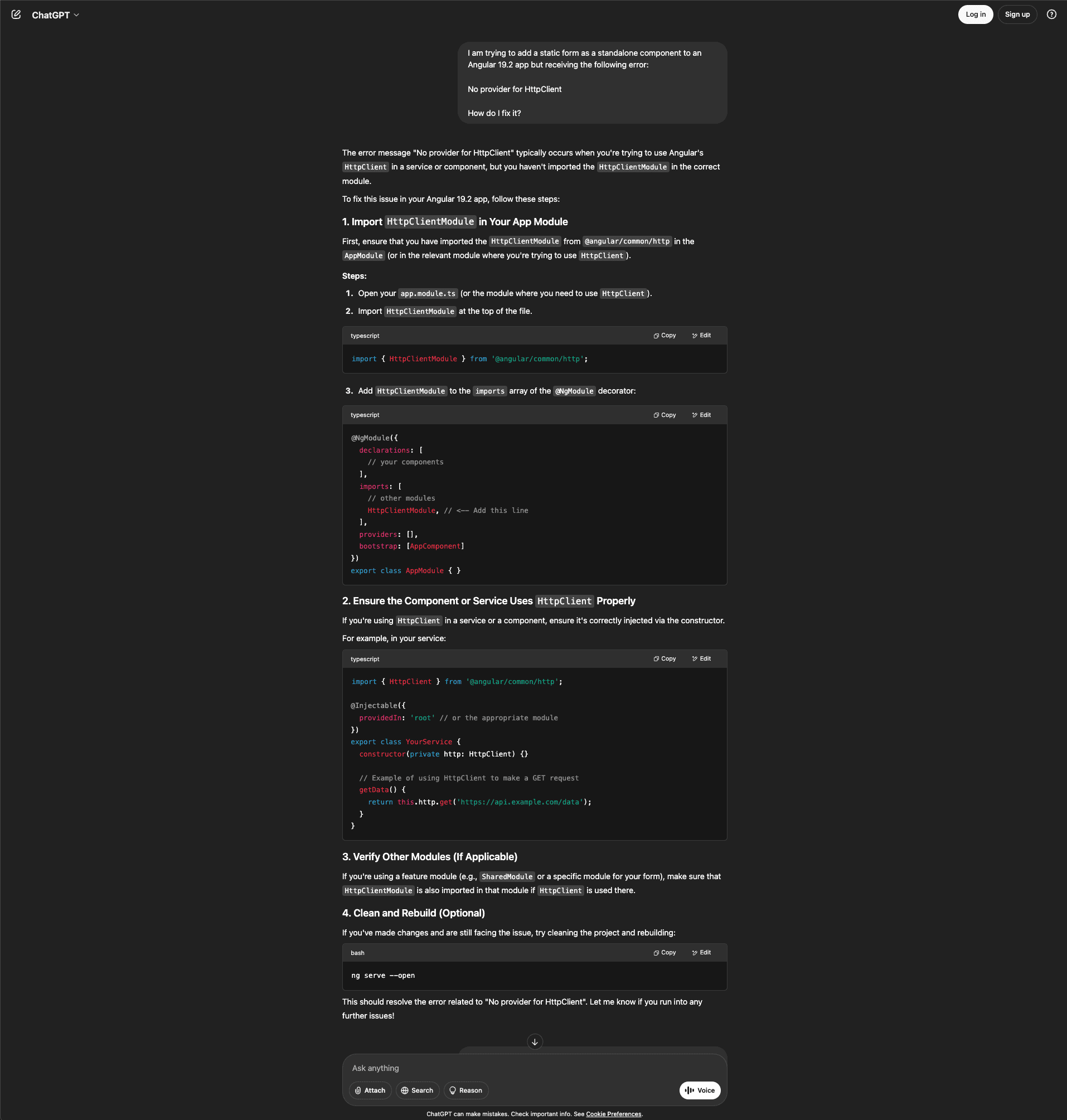

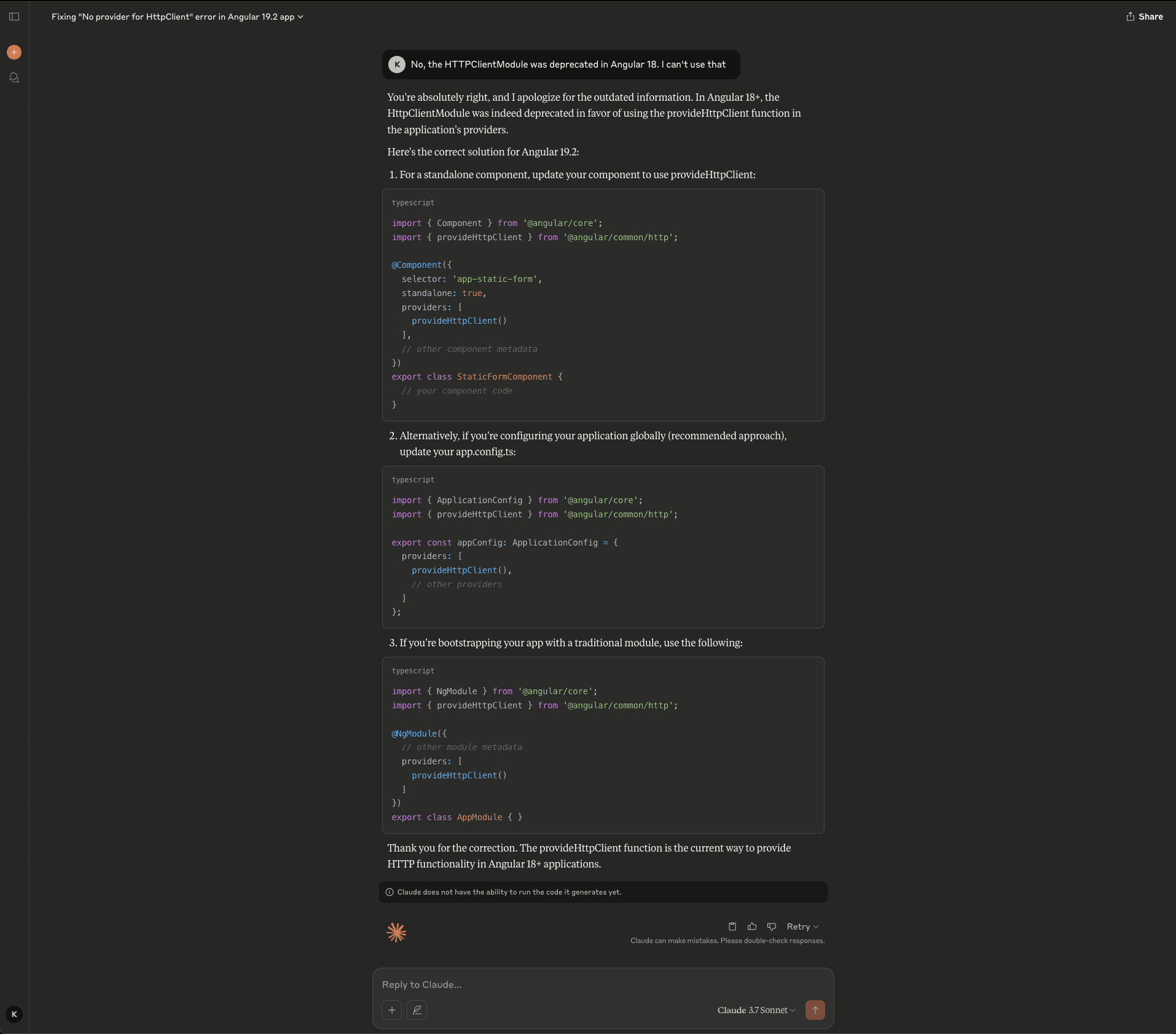

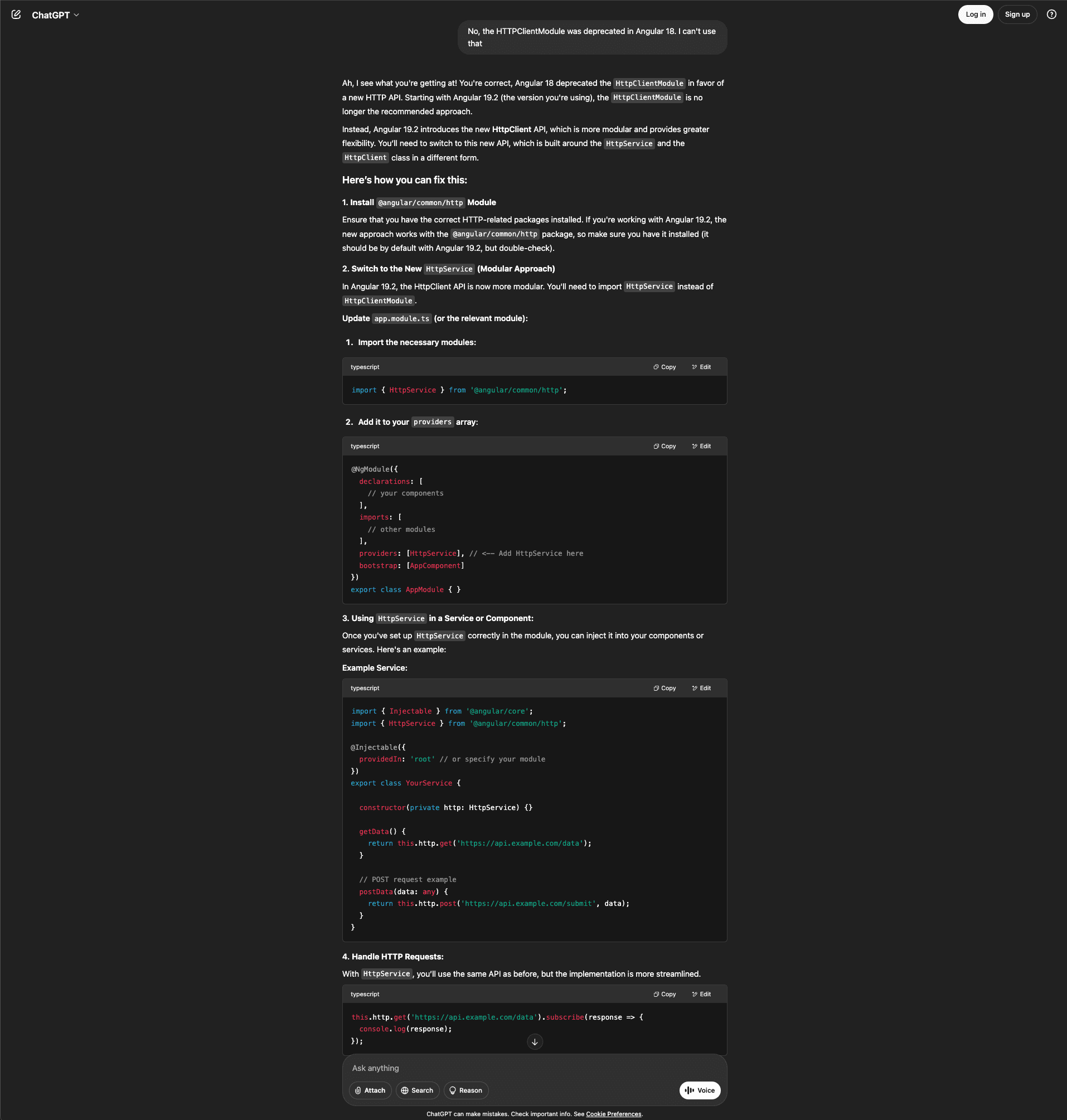

I am trying to add a static form as a standalone component to an Angular 19.2 app but receiving the following error: No provider for HttpClient How do I fix it?

Here’s what Claude responds with:

And here’s what ChatGPT responds with:

Interestingly, when you call out the deprecated solution, Claude is quickly able to figure out the right solution (which, in this case, is to use provideHttpClient), while ChatGPT presents another deprecated solution.

Here’s Claude’s answer:

And here’s ChatGPT’s:

It’s likely that Angular 19.2 was released after the cut-off date of GPT-4o, and since the free version does not have access to web browsing capabilities, it confidently presents made-up answers.

Test generation

When it comes to test generation, both Claude and ChatGPT perform impressively for small, self-contained functions. They generate unit tests quickly, structure them well, and cover common happy and edge cases with little friction.

However, as soon as the test scope increases—say, when writing tests for a multifile Android project written in Kotlin—Claude has a clear edge. It offers broader coverage, a stronger grasp of framework-specific conventions (like JUnit, MockK, or Espresso), and better test-file organization. Because of a larger context window, Claude is more likely to reference earlier files or set up code when generating integration tests or mocks across modules.

Contextual awareness

Contextual understanding helps when you’re working with existing codebases or iterating on complex tasks over multiple turns. As seen in the previous examples, when modifying a React app across files or updating code through a sequence of prompts, both Claude 3.7 Sonnet and ChatGPT-4o demonstrate strong contextual reasoning capabilities.

When it comes to modifying an existing codebase, both tools can follow the project structure, reference variables across files, and update logic coherently. That said, ChatGPT occasionally stumbles on formatting when generating or appending code snippets. These formatting glitches can lead to small but critical typos or indentation errors that break execution unless caught by the developer.

In multiturn interactions, performance is closely tied to context window size. Claude 3.7 Sonnet’s 200K-token context window allows it to track and recall large amounts of historical information, spanning full files, architectural constraints, or extended conversation threads. ChatGPT-4o, while generally strong at maintaining continuity over shorter sessions, has a smaller effective window and may start to lose precision or forget earlier turns in long, detailed exchanges.

Integration possibilities

Any dev tool is most powerful when it integrates smoothly into a developer’s workflow. Both Claude and ChatGPT offer robust integration options, but their approaches reflect their design philosophies. Claude leans into collaborative structure, while ChatGPT emphasizes breadth and extensibility.

IDE integrations

Claude offers official and community-built extensions for editors like VS Code, allowing developers to query and modify code directly from their editor. Its standout features (Artifacts and Projects) are uniquely designed for collaborative development. As you’ve seen above, Artifacts provide a live, editable canvas within the chat. Projects group conversations and assets into task-specific threads, making Claude feel more like a long-term pair programmer.

ChatGPT, meanwhile, boasts broader IDE support, with integrations that plug seamlessly into GitHub Copilot, JetBrains, and VS Code ecosystems. These extensions are deeply embedded, offering inline completions, doc references, and context-aware suggestions that adapt as you code. However, the quality of responses in the context of coding remains somewhat inferior to Claude’s.

API and external service connections

Claude provides a stable and well-documented API, ideal for embedding AI into apps, chatbots, or backend services. It’s especially helpful for generating docs or assisting with schema generation and code commenting.

ChatGPT offers a more extensive API and plugin ecosystem, with support for function calling, web browsing, and even real-time data retrieval through external tools. From scheduling meetings to querying databases, ChatGPT is designed to operate like a general-purpose AI layer across your entire stack.

When it comes to integration, ChatGPT has a pretty strong ecosystem among all AI chatbots and APIs.

Business applications

In startup environments where speed and agility are key, both Claude 3.7 Sonnet and ChatGPT-4o offer significant advantages. Claude is especially effective for rapid prototyping and MVP development, thanks to features like Artifacts for live previews and Projects for persistent context. These make Claude ideal for teams managing evolving requirements. Claude has proven to be useful for companies, like Lazy AI, that have used it to boost internal software development.

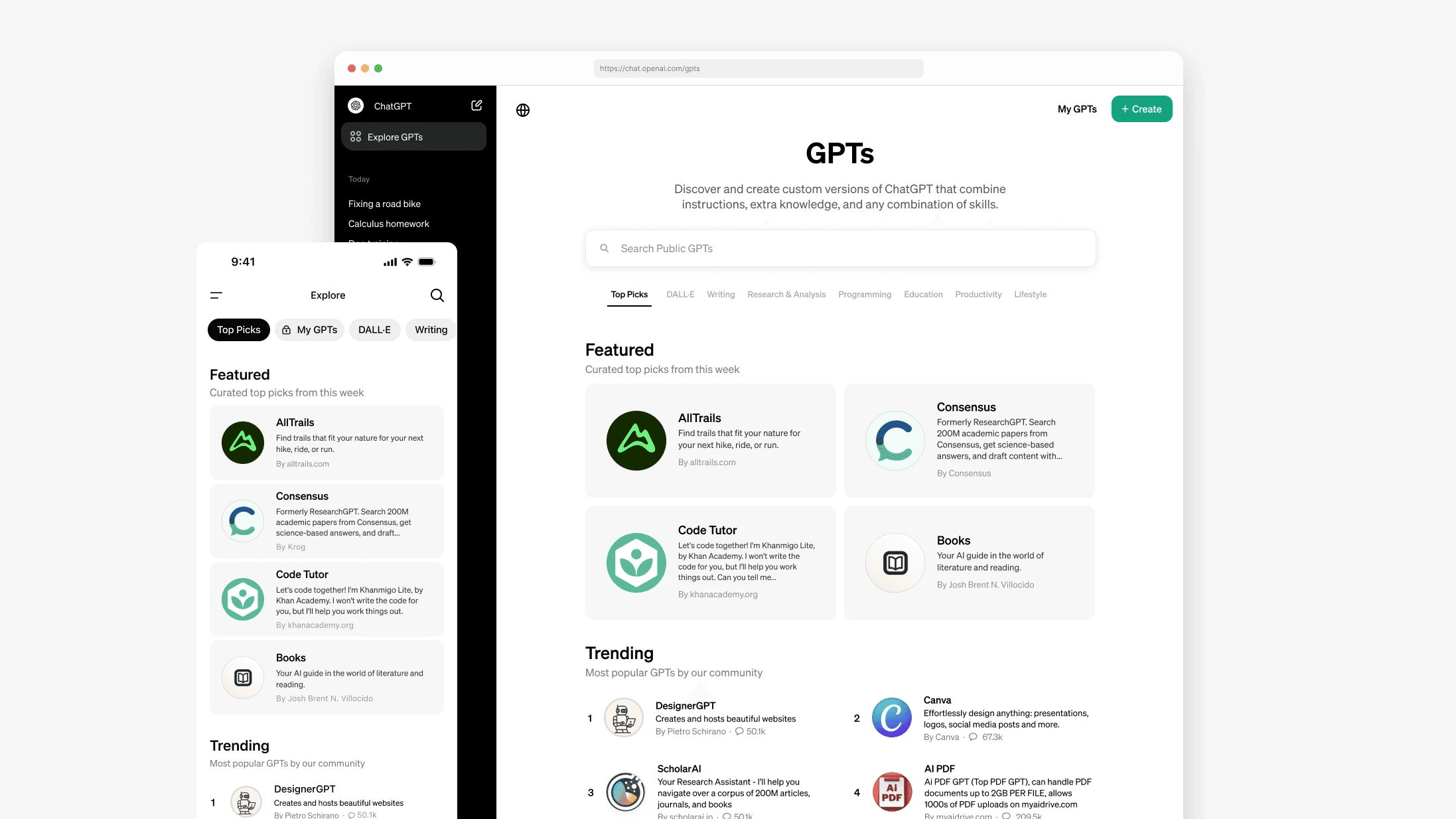

ChatGPT, meanwhile, shines in tech exploration and early product development with its fast generation, wide range of integrations, and ability to spin up supporting content like documentation, APIs, and database schemas quickly. Genmab is a great example of how ChatGPT and OpenAI models can help across a wide range of tasks across the operations of a company through features like custom GPTs and support for multimodal content.

In enterprise settings, Claude’s large context window and strong reasoning make it well suited for refactoring legacy codebases, standardizing documentation, and automating repetitive tasks with deep context awareness. ChatGPT could be used to complement this by offering real-time data access, plugin integrations, and a flexible API. Both tools serve distinct enterprise needs, depending on whether depth of analysis or breadth of integration is the priority.

Final insights

Both Claude and ChatGPT are powerful AI coding assistants, but the best choice depends on your goals and project context. If you’re working on large-scale, high-stakes applications (like refactoring legacy systems, analyzing long documents, or managing evolving architectural decisions), Claude offers the edge with its extended context window, structured reasoning, and collaboration-focused features like Artifacts and Projects. It’s particularly well suited for technical leads, backend engineers, and teams that need clarity and consistency across complex codebases.

For developers prioritizing speed, creative flexibility, and broad tool integration, ChatGPT is a more versatile companion. Its real-time data access, plugin ecosystem, and memory features make it ideal for prototyping, research, and generalist workflows. Whether you’re experimenting with APIs, generating test data, or writing across disciplines, ChatGPT adapts quickly and helps get things done faster.

Looking ahead, we can expect AI assistants like Claude and ChatGPT to fundamentally reshape software development, making coding more collaborative and turning natural language into a powerful interface for building software. As these tools continue to evolve, choosing the right assistant will become less about raw power and more about how well it fits your team’s rhythm, priorities, and stack.

That wraps up the first part of this series on conversational coding assistants. Stay tuned for the next installment, which explores how Google’s Gemini fares against ChatGPT!