Table of Contents

Gemini vs. ChatGPT at a glance

This tutorial was written by Kumar Harsh, a software developer and devrel enthusiast who loves writing about the latest web technology. Visit Kumar's website to see more of his work!

Artificial intelligence (AI) is no longer just a buzzword floating around tech conferences or your Twitter feed. It's sitting right next to you, reviewing your code, naming your functions, and occasionally hallucinating—with surprising confidence. For modern full-stack developers, AI assistants have become essential tools, speeding up boilerplate tasks, helping debug errors, and even suggesting entire architectures when you're just trying to rename a variable.

This three-part series takes a closer look at today's most talked-about AI copilots: ChatGPT, Claude, Gemini, and GitHub Copilot. Part one pitted Claude against ChatGPT. This second installment compares Gemini vs. ChatGPT, focusing on how these two chatbots perform in real-world coding workflows. You'll learn where each shines, how they integrate into your workflows, and whether Google's Gemini is really the serious contender it claims to be.

This article covers:

Gemini vs ChatGPT for coding generation quality

Gemini vs ChatGPT in troubleshooting capabilities

Gemini vs ChatGPT in integration possibilities

Gemini vs ChatGPT in business applications

Gemini vs. ChatGPT at a glance

Before we get into a hands-on comparison of coding tasks between Gemini and ChatGPT, it’s helpful to establish some baseline comparisons. Here’s what sets these two programs apart at a high level:

| Gemini | ChatGPT |

|---|---|---|

Reasoning, problem-solving, and analytical skills | Gemini 2.5 Pro excels in structured reasoning tasks and breaking down complex problems into manageable steps, making it particularly effective for in-depth analyses and codebase refactoring. | ChatGPT (GPT-4.1) demonstrates superior performance in coding and instruction-following tasks, with significant improvements over previous models that make it highly efficient for software development and debugging. |

Document analysis and summarization | Gemini may have a slight edge in processing scale, | ChatGPT excels in summarization quality. |

Emotional intelligence and conversation style | Gemini is known to maintain a professional and factual tone, suitable for tasks requiring precision and clarity. | ChatGPT offers a more conversational and adaptive interaction style, capable of adjusting its responses based on user tone and making it ideal for collaborative and creative tasks. |

Real-time data and web access | Gemini integrates with Google Search to provide real-time information, enhancing its responses with up-to-date data. | ChatGPT features real-time web browsing capabilities that allow it to access and cite current information from the internet, thereby improving the relevance and accuracy of its responses. |

Cost, access, and plans | Gemini 2.5 Pro is available through the Gemini Advanced plan for $19.99/month, which includes Google One storage perks and more. The model is also available for preview in Gemini's free plans, although it is greatly restricted. | ChatGPT offers the smaller GPT-4.1-mini model on the free plan with generous usage restrictions, while the full GPT-4.1 model is accessible on the Plus plan at $20/month and above, providing access to advanced tools like memory, vision, file handling, and plugins. |

On the whole, some of the most impactful differences between the models are in the logic factors. Gemini is ideal for comprehensive, structured problem-solving, while ChatGPT is better suited for rapid, code-centric tasks. The two AI coding tools’ biggest point of convergence is in document analysis and summary. With a context window of 1 million for the best models available from both chatbots, both models are capable of handling large documents.

Now, let’s take a deeper dive into what exactly Gemini and ChatGPT are and how each AI coding tool performs on its own.

Understanding Gemini’s utility for coding

Gemini is Google's next-generation family of large language models (LLMs), developed by Google DeepMind. Introduced in December 2023, Gemini is a multimodal AI system capable of understanding and generating text, images, audio, and video. The Gemini models are optimized for various use cases and come in different sizes: Pro, Flash, and Flash-Lite.

The following are its key features and capabilities:

Multimodal processing – Gemini 2.5 Pro natively supports text, code, images, audio, and video inputs, enabling seamless integration across various data types.

Extended context window – It boasts a context window of up to 1 million tokens, allowing for the processing of extensive documents and codebases. This capability is great for improving productivity for tasks that involve multiple, possibly humongous files. More on this below.

Advanced reasoning – Similarly to Claude 3.7, Gemini 2.5 Pro employs a "thinking" approach to break down complex problems into manageable steps, enhancing its problem-solving capabilities.

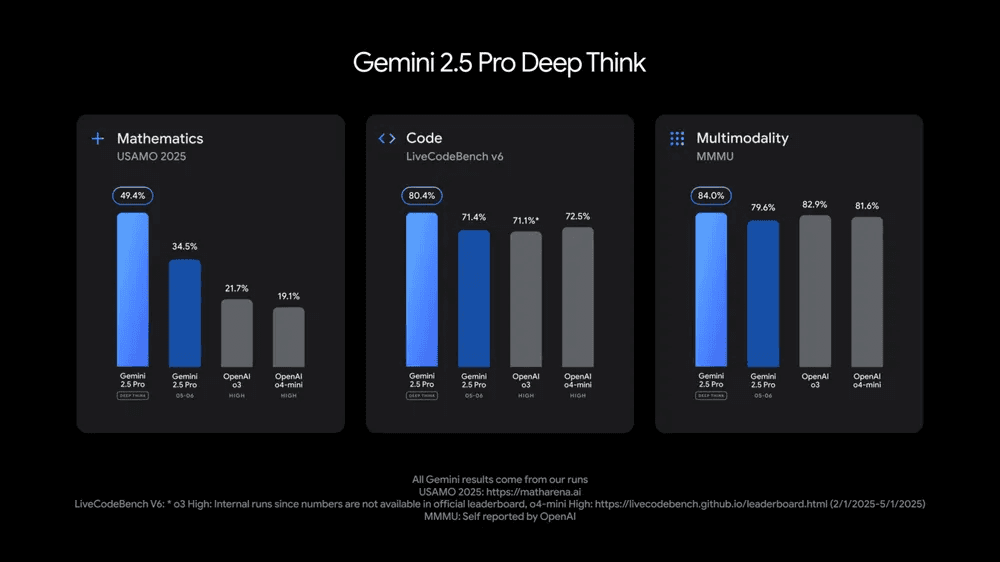

Enhanced coding performance – Gemini 2.5 Pro excels in code generation and transformation, scoring 63.8 percent on the SWE-Bench Verified benchmark, indicating its proficiency in software engineering tasks.

Integration with the Google ecosystem – It integrates seamlessly with Google Workspace and Cloud services, which eases adoption, especially in Enterprise setups.

Gemini 2.5 Pro utilizes a transformer-based architecture optimized for multimodal inputs. The model's extended context window supports in-depth analysis of large data sets, making it suitable for tasks like summarizing lengthy documents or analyzing extensive code repositories.

Most commercially available models (i.e., Claude's and OpenAI's non-4.1 models) reportedly show significant drops in response quality after using 32,000 tokens, which equates to at least approximately 16 percent of their total context window (Claude offers 200,000, and OpenAI 128,000). If you were to use that ratio for a 1 million context window, you still get to use over 160,000 tokens in a single high-quality conversation, which is much more than the competitors. However, it's important to note that this is only available in the Gemini Advanced plan, not the regular free Gemini plan.

Understanding ChatGPT’s utility for coding

ChatGPT is OpenAI's flagship conversational AI, widely used for coding, research, and creative tasks. It's popular across domains like software development, academic research, creative writing, and data processing. Since its debut in November 2022, it has become nearly synonymous with consumer-facing AI tools. ChatGPT supports a range of models from OpenAI's model family, with capabilities spanning natural language, image, and audio inputs.

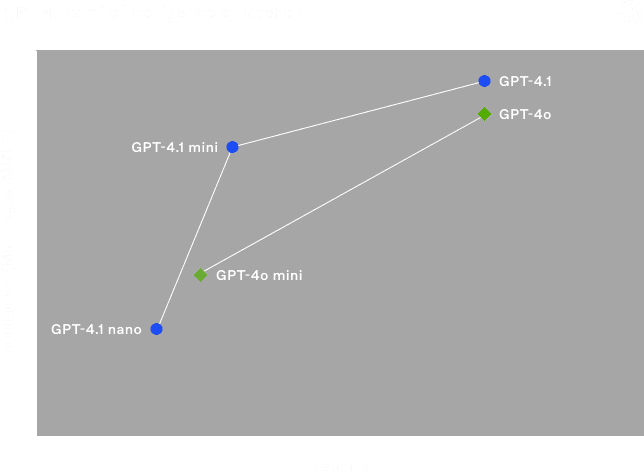

As of May 2025, the default model in ChatGPT is GPT-4o (short for "omni"), a multimodal model designed for fast, context-rich, and interactive use across text, vision, and speech. However, ChatGPT has very recently (May 14, 2025) started offering its GPT-4.1 and GPT-4.1-mini models.

GPT-4.1 brings significant enhancements over its predecessors. It's designed to excel in coding tasks, instruction following, and long-context comprehension. It supports a context window of up to 1 million tokens, enabling the processing of extensive documents and codebases effectively. It is available to subscribers on ChatGPT Plus, Pro, and Team plans, with the GPT-4.1-mini available to free users.

Its key features are as follows:

Enhanced coding capabilities – GPT-4.1 exhibits superior performance in coding tasks, surpassing previous models like GPT-4o and GPT-4.5 in benchmarks such as SWE-Bench Verified.

Improved instruction following – The model exhibits better adherence to complex instructions, making it more reliable for tasks requiring multistep reasoning.

Expanded context window – With support for up to 1 million tokens, GPT-4.1 can handle large-scale documents and data sets without the need for chunking or summarization.

Multimodal capabilities – GPT-4.1 maintains multimodal functionalities that allow it to process and generate text and image inputs, enhancing its versatility across various applications.

The newly launched GPT-4.1 supports a large context window of up to 1 million tokens (through the OpenAI APIs), bringing it on par with Gemini's offerings. However, the current ChatGPT pricing page reflects that even ChatGPT Plus users are limited to a maximum context window of 32,000 tokens to any OpenAI model, which is quite limited, to say the least.

ChatGPT is built on OpenAI's GPT-4.5 architecture, a transformer-based model fine-tuned through a combination of unsupervised learning, human-in-the-loop supervision, and reinforcement learning with human feedback (RLHF). This setup enhances its pattern recognition and creative generation abilities. However, prompt quality still plays a major role in getting effective results.

Gemini vs. ChatGPT: Code generation quality

Similarly to the comparison of Claude and ChatGPT in part one, we’ll use two coding prompts—one for a frontend component and another for a backend script—to evaluate the coding abilities of the two. The prompts will contain requirements but will also be purposefully vague in some aspects to see how (or if) the models fill in the gaps on their own.

From the frontend coding tests, both tools performed well. ChatGPT seems to give ready-to-use, up-to-date code snippets, while Gemini is extremely detailed in its code explanations.

The backend coding tests were more revealing in terms of differences. ChatGPT is great for quick backend tasks like adding an endpoint to an internal app. However, no real security measures were implemented in its code snippet, apart from adding a few headers. Conversely, Gemini has gone all out to make sure it satisfies each requirement on the list. It's great for vibe coding but not very accessible for devs looking for a quick starting point for their app.

Frontend code generation with both AI coding tools

For the first test pitting Gemini against ChatGPT, let's start with the fundamental frontend coding task of creating a React component. Here's the prompt we’ll use for this test:

Create a Next.js component that displays a list of products fetched from an API endpoint. Each product should show its name, price, and availability status. Format the price as currency (e.g., $12.99), and display a loading indicator while the data is being retrieved. Ensure the component handles errors gracefully by showing an error message if the API request fails.

Importantly, this prompt:

Specifies a framework (Next.js) as opposed to a plain React component;

Requires API data fetching and asynchronous handling;

Instructs on data formatting (currency);

Includes UI states (loading and error handling); and

Asks for multiple data fields per item (name, price, and availability).

These factors will inform what we look for as we evaluate each tool’s outputs.

ChatGPT’s response to our frontend coding test

Let's take a look at how ChatGPT's free version responds to this prompt:

ChatGPT first summarizes how it will address the prompt and then generates a code snippet for the Next.js component. Then, it includes a sample backend API response format to make it easy for you to understand the backend data schema that it uses. Finally, it provides a short snippet demonstrating how to use the Next.js component on a page.

Let's analyze the code returned by the prompt.

The first thing that stands out: you cannot plug and play this component in your Next.js app because it uses an imaginary backend URL (/api/fetch). If you already have a backend ready to use at this point, you can replace the value of APP_URI with the URL to your backend and then test this code. In other cases, you would need to redo the fetchProducts function to use a set of dummy values as posts while you're developing the component. Because of this, it wouldn't be fair to call it an "easy-to-use" placeholder for the external data source.

Next, all the mentioned properties are included, and the price has been formatted as requested in the prompt using the JS Intl object, which is a good practice.

Finally, the loading state has been handled appropriately as well.

You'll also find an example API object that the component expects, along with an example of how to import and use the component. These are nice to have, and you can easily construct an API in Next.js using the example object to try out the component.

Gemini’s response to our frontend coding test

When tasked with the same prompt, here's what Gemini responds with:

Gemini leads with the code for the Next.js component, followed by a detailed explanation of how it works. Towards the end, it provides the code for an API endpoint you can temporarily create if you want to test out your component with an actual backend API.

Similarly to the ChatGPT response, you cannot plug and play this component in your Next.js app. It uses the imaginary backend URL (/api/fetch) as well. However, it realizes that Next.js supports creating APIs easily and hence also provides an example API code. But if you look at the file location mentioned in the prompt for creating the API route, as well as the function declaration style export default function handler(req, res) {, you'll realize that these are meant for an older version of Next.js. This hints that Gemini might be working with outdated Next.js training data.

The JSX structure of the component looks pretty much the same as ChatGPT's version. However, another important detail it missed is the "use client" declaration at the beginning of the file. It uses hooks in the component, which require the component to be a client-side-only component for them to work in Next.js 13 and above. Gemini missed this detail, most probably because it meant to write the code for a version of Next.js older than 13. The code explanation that it provides is quite detailed, though.

The use of Tailwind classes is a major difference from the ChatGPT response. It's a good practice since the default create-next-app CLI currently gives you the option to install Tailwind when creating a new project. This means it is going to come in handy for a large number of Next.js developers, regardless of whether they are experienced devs or "vibe coders."

For someone coding an app from scratch, this is an excellent starting point. For someone who already uses a different styling system and maybe has more style guidelines to adhere to (such as no icons or a different color scheme), a few more prompts should do the trick.

Backend code generation with both AI coding tools

Here's the prompt for the backend task:

Write an Express.js API endpoint in Node.js that accepts a POST request with a JSON payload containing a product's name, price, and category. The endpoint should validate the input and store the product details in a product database. Also, it should handle errors reasonably. Make sure your implementation considers common security best practices.

As we evaluate each AI coding tool’s response, we’ll be looking for how well it:

Handles the POST request

Implements validations

Stores the details in a dummy database

Responds with descriptive error details

Uses Helmet to add secure HTTP headers to the response

Let’s see how each model performs.

ChatGPT’s response to our backend coding test

Here's what ChatGPT's free version responds with:

ChatGPT starts with a quick summary of how it will address the prompt and then provides the code for the endpoint along with an npm command to install the required dependencies. Towards the end, it summarizes the security considerations it took into account when generating the code.

The code snippet works, and the response is quite concise itself, with the code snippet meeting all of the requirements laid out in the prompt. As an added benefit, it uses Joi for validations, which is better than manually writing them in cases like these.

Gemini’s response to our backend coding test

Here's what Gemini's free version responds with:

Gemini lists out the components it chooses to build the solution with, then spends some time explaining how to create a new Node.js project and set it up. It also includes a database setup file and detailed instructions on creating validation middleware and the API endpoint. Towards the end, you will also see plenty of instructions on how to test out the API once you have set it up.

As you can see, it's an extremely lengthy response compared to ChatGPT's. It uses Helmet and Joi as well. However, it goes a step further and gives you the file structure of the project, along with a detailed dummy database. The validation setup is quite detailed, providing a surplus of error messages and allowing you to easily remove any that you do not want in your code.

You'll also find detailed instructions on how to set up the code and run the app, along with plenty of explanations on how the code works.

Gemini vs. ChatGPT: Troubleshooting capabilities

Debugging and writing test cases are inherently context-heavy tasks, which make them difficult to evaluate without the specific structure and quirks of a real-world project. This section is based on some hands-on experience as well as widespread community feedback. For instance, many developers on platforms like Reddit have echoed similar sentiments when comparing Gemini and ChatGPT for everyday software maintenance tasks.

On the whole, debugging works differently with each AI coding tool. Gemini is methodical and excellent at sticking to best practices, whereas ChatGPT can be more flexible and adaptable. In a similar vein, ChatGPT has an edge in test generation in complex, dynamic environments.

Both models are generally on par in terms of contextual awareness, with Gemini offering more stable formatting and longer-term memory in extended sessions, while ChatGPT offers more fluid, natural interaction, but sometimes at the cost of precision in code formatting.

Debugging with both AI coding tools

Gemini performs best when debugging is framed as a step-by-step reasoning task. It excels at identifying logical flaws, uninitialized variables, and inconsistent states when given well-structured, isolated code. It is also great at sticking to best practices, which can sometimes help avoid debugging detours on the whole. However, it tends to struggle with ambiguous or incomplete context, especially in cases where understanding a broader system or multiple file relationships is critical. Its debugging output can feel methodical but sometimes lacks flexibility when the problem isn't clearly defined.

ChatGPT handles debugging in a more conversational and exploratory style. It's capable of asking clarifying questions, simulating test scenarios, and even suggesting minimal code diffs. In real-world usage, it tends to be more effective at identifying root causes across loosely scoped snippets and explaining the implications clearly. Its strength lies in adaptability; even when prompts are vague, ChatGPT often gets closer to what the developer intended compared to Gemini. ChatGPT is generally preferred for live debugging scenarios due to its responsiveness, conversational depth, and ability to reason across incomplete or messy inputs.

Comparing Gemini and ChatGPT responses

The tendencies described above illustrate what developers can expect when debugging with Gemini or ChatGPT, respectively. However, these tendencies do not always hold true.

For example, let's take the same problem from the first part of this series, where we saw ChatGPT struggling to provide the right, updated solution:

“I am trying to add a static form as a standalone component to an Angular 19.2 app, but receiving the following error: No provider for HttpClient How do I fix it?”

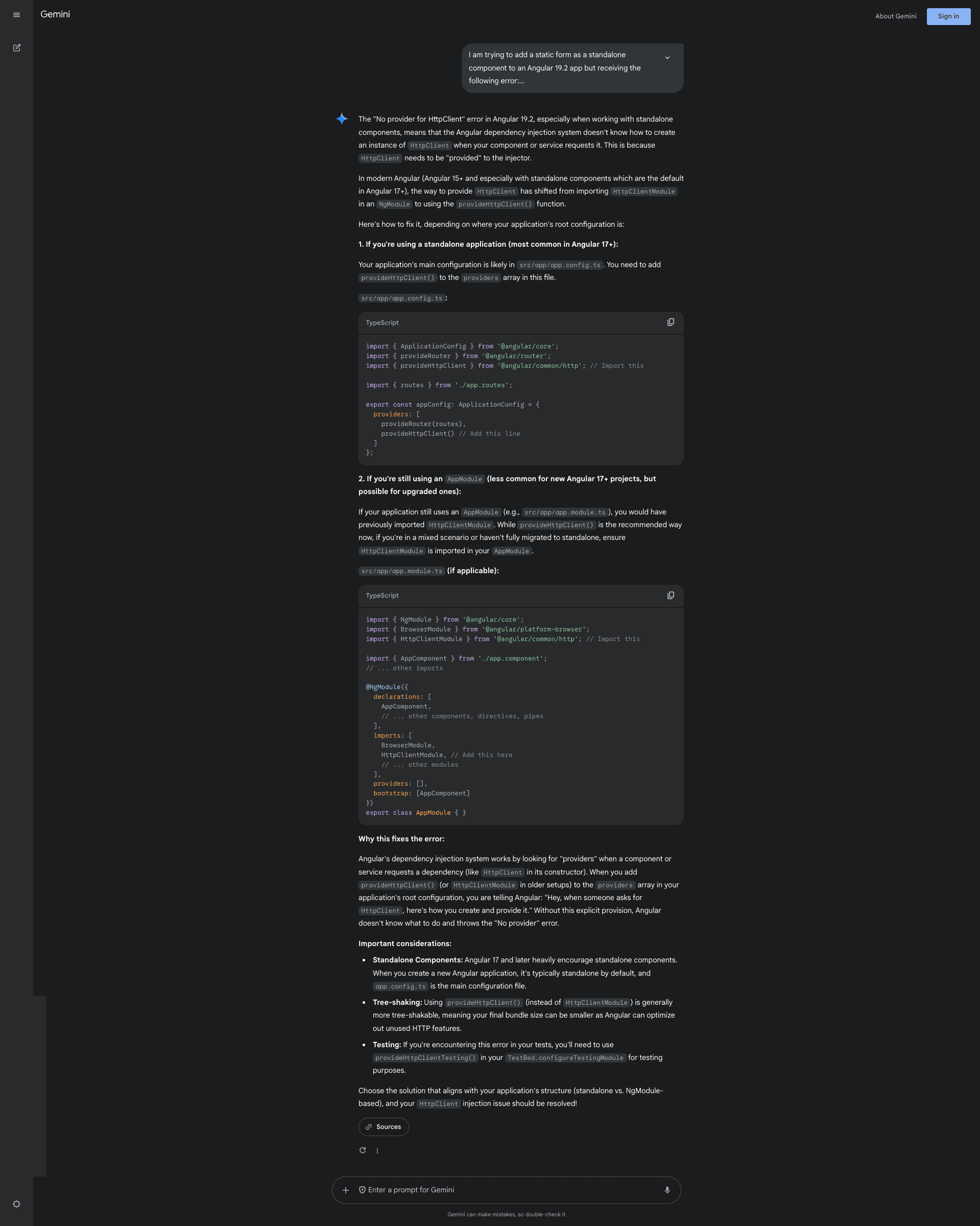

Here's what Gemini responds with:

Gemini describes the potential cause of the problem and then recommends solutions depending on the Angular version your project is using. Then, to help the users understand the issue better, it also goes on to explain why these solutions fix the problem.

Unlike ChatGPT (and Claude in part one of the series), Gemini is able to pinpoint the issue and the correct, up-to-date solution for it. Surprisingly, if you ask its cutoff date, it turns out to be 2023, when Angular 19.2 was nowhere near release. And yet, it is able to put together the right solution because, in reality, the right solution has been present since Angular 17+, and the old HttpClientModule solution was deprecated only in Angular 18 in 2024.

This means that Gemini demonstrated a better understanding of best practices by sticking to an up-to-date, recommended solution rather than blurting out the solution that it has seen more times in its training data.

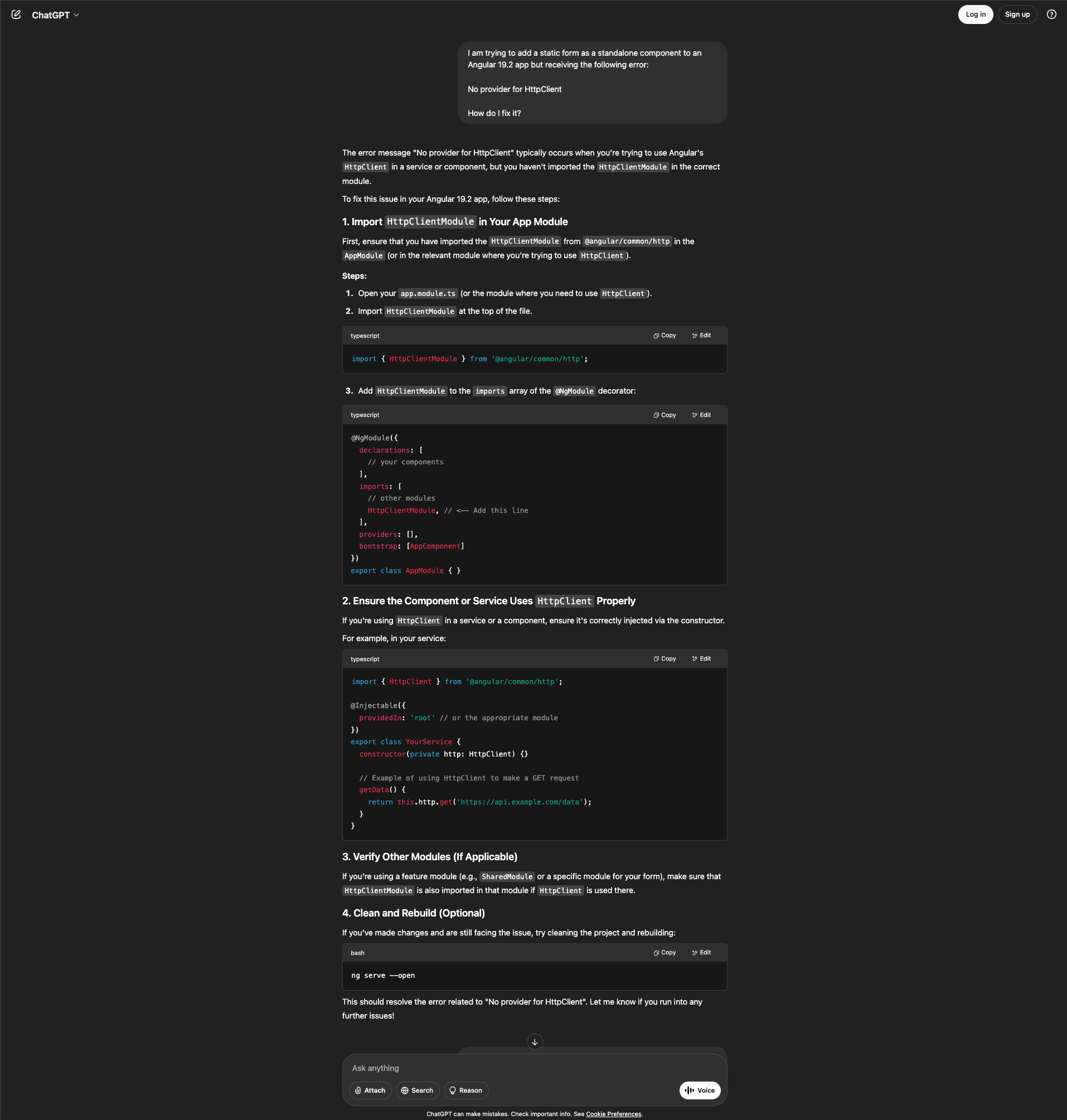

For reference, here's what ChatGPT responded with:

ChatGPT recommended the deprecated solution of using HttpClientModule to solve the problem.

Test generation across both AI coding tools

Gemini 2.5 Pro is fully capable of generating unit tests for straightforward components. ChatGPT with GPT-4.1, on the other hand, delivers strong results in small test generation tasks and scales better in more complex use cases. When working with projects that span multiple files or languages, such as a multimodule Android app in Kotlin, ChatGPT can infer dependencies, generate mocks, and select appropriate test frameworks more reliably.

While both tools handle simple unit test generation well, ChatGPT pulls ahead in complex environments where framework compatibility and test coverage matter most.

Contextual awareness of both AI coding tools

Both Gemini 2.5 Pro and ChatGPT (GPT-4.1) demonstrate strong contextual awareness when it comes to understanding and editing existing codebases. As seen in previous examples throughout this article, both tools can infer variable relationships, follow control flow, and suggest changes that align with the logic and style of the original code.

That said, ChatGPT occasionally stumbles with formatting, especially when generating longer code snippets, sometimes introducing typos or indentation issues that wouldn't compile without tweaking. Gemini, by contrast, tends to produce more cleanly formatted suggestions out of the box, though it may require slightly more guided prompting in loosely scoped edits.

In back-and-forth conversations, both tools are capable of maintaining context and building upon prior messages. Of course, it is imperative that the prompts are clear and scoped to a consistent thread. However, ChatGPT (GPT-4.1) can occasionally "forget" or slightly drift from the previous context in complex multiturn exchanges, especially if they involve formatting-sensitive outputs like HTML, YAML, or code blocks.

Gemini vs. ChatGPT: Integration possibilities

The best AI tool is the one that can most easily integrate into your existing workflows. Here's a comparison of Gemini and ChatGPT (and their models) in terms of their integration possibilities.

With respect to integrated development environment (IDE) integrations, both AI coding tools support key capabilities like Visual Studio Code. They also offer a clean and polished feel to all integrations, with the caveat that response quality can vary with prompt complexity.

In terms of API and external connections, each has clear utility. If you're building inside the Google Cloud ecosystem or integrating with Google Workspace tools, Gemini 2.5 Pro is the natural fit. However, for broader adoption, GPT-4.1 provides a more flexible, developer-first API experience with faster onboarding, easier experimentation, and support for a wider range of workflows out of the box.

IDE integrations in both AI coding tools

Gemini offers official support for IDEs, like Visual Studio Code and JetBrains IDEs (including IntelliJ), through its Gemini Code Assist extension. These plugins bring AI-powered autocomplete, code explanations, and debugging tips directly into the development environment. The experience is tightly integrated and designed to reduce context switching, letting developers chat with Gemini, request code completions, or ask for improvements without leaving their editor. However, while Gemini Code Assist is generally reliable, some early reviews have pointed out inconsistencies in code suggestion quality, especially for less common frameworks or languages.

ChatGPT also supports Visual Studio Code through community-developed extensions. These provide live access to GPT-4o for code generation, explanation, and problem-solving tasks. The experience is polished, with ChatGPT typically accessible via a sidebar or command palette. Reliability is strong overall, with consistent results and minimal lag, though (as with Gemini) the quality of responses can vary based on the clarity of the prompt and the complexity of the task.

External service connections in both AI coding tools

Gemini 2.5 Pro is accessible via the Gemini API, hosted on Google Cloud, and supports a wide set of features for developers integrating AI into production environments. Authentication is handled through standard Google Cloud IAM and API key mechanisms, with granular project-level access controls. Gemini's function calling support allows developers to define callable backend functions for setting up AI-powered workflows across applications. Its documentation is polished and enterprise-friendly, though some users may find the setup process more Google Cloud–native than developer-friendly, especially those not already embedded in the ecosystem.

ChatGPT with GPT-4.1, on the other hand, is available via the OpenAI API and is widely regarded for its ease of integration. Authentication uses simple API keys, and the platform supports function calling, tool use, and Retrieval Augmented Generation (RAG) out of the box. GPT-4.1 brings improvements in multistep API workflows and is compatible with custom GPTs, allowing developers to inject external APIs and tools into personalized assistants. OpenAI's developer documentation is extensive, example-rich, and easy to onboard with, even for teams without deep infrastructure experience.

Gemini vs. ChatGPT: Business applications

Gemini, integrated within Google Workspace and Google Cloud, is a useful and easily accessible tool for enterprises looking to boost efficiency and collaboration. For instance, FinQuery, a fintech company, uses Gemini to expedite brainstorming sessions, draft emails more swiftly, manage intricate project plans, and assist engineering teams in debugging code and evaluating new monitoring tools.

Another example is Lawme, a legal tech platform that used Gemini's long-context capabilities to simplify contract drafting and client onboarding, reporting improved efficiency and better context retention across legal workflows.

ChatGPT is widely adopted across various sectors for its adaptability and powerful language processing capabilities. At BBVA, over 3,300 employees across departments—like legal, risk, marketing, and HR—adopted ChatGPT Enterprise. Eighty percent of users said the tool saved them at least two hours per week, significantly improving overall productivity. In healthcare, Radfield Home Care used ChatGPT to support tasks such as staff training, compliance documentation, and client communication, improving service quality while reducing operational load.

Gemini vs. ChatGPT for coding: Final insights

Both Gemini with Gemini 2.5 Pro and ChatGPT powered by GPT-4.1 are formidable AI assistants, but they serve slightly different priorities and ecosystems.

Gemini 2.5 Pro is exceptionally strong in structured reasoning, long-context tasks, and Google Cloud–native applications. Its ability to handle massive token windows and process highly detailed inputs makes it a compelling choice for legal tech, document-heavy workflows, and enterprise systems where integration with Gmail, Docs, or Vertex AI is key. If your team works with complex documentation or large-scale data or already relies on Google infrastructure, Gemini is a natural fit.

ChatGPT with GPT-4.1, in contrast, is designed for speed, flexibility, and general-purpose productivity. It excels in fast prototyping, dynamic API integration, and emotionally intelligent interactions, making it especially valuable in cross-functional teams, client-facing workflows, and creative development. Its wide plugin support and custom GPT ecosystem offer a significant advantage for teams that need quick results across a broad set of tools.

In short, Gemini 2.5 Pro wins on coding depth and scale, while GPT-4.1 wins on versatility and accessibility. However, choosing between Gemini and ChatGPT isn't about which model is better but about which one complements your development style and team culture. As AI continues to evolve, the most successful developers will be the ones who know when to pick the right tool and how to wield it well.

That wraps up the second part of our series comparing leading AI coding assistants. In the final installment, we'll take a look at Microsoft Copilot and explore how it stacks up against the others in terms of real-world development experience.

Get the most out of Gemini and/or ChatGPT

In the Gemini vs ChatGPT for coding debate, both models prove themselves to be excellent AI coding tools that dev teams are already leveraging to great effect. The one you choose will likely come down to ecosystem fit and use case, but it’s helpful to understand how they stack up if you have access to both. Our tests found that Gemini tends to excel in high-structure contexts, while ChatGPT tends to be a bit more dynamic and flexible. Looking forward, knowing how to use both will be key to dev success.

For more developer-focused breakdowns and tool comparisons, subscribe to our blog or follow us on LinkedIn, X, and Bluesky.