Table of Contents

OWASP Top 10 for Agentic Applications for 2026

The OWASP Top 10 for Agentic Applications for 2026 is out, and the results aren’t a surprise to those familiar with building agents. This framework, which enumerates the risks faced by agentic AI systems, formalizes a lot of things that we know by now:

Agents can be manipulated

They can misuse the tools they’re given

They inherit permissions in ways that can get messy fast

Today’s AI systems can receive instructions, reason over them, ask (and answer) their own questions, plan, and execute. With tools like Claude Code overtaking Cursor in popularity and productivity gains, the direction is clear: agents are going to be more and more autonomous, and their security risks are more important than ever to track and contain.

OWASP makes it clear, their list of risks isn’t a strategy or a defense. In this article, we’ll be digging deeper into each of these threats and sharing how you can mitigate them with the Descope Agentic Identity Hub.

OWASP Top 10 for Agentic Applications for 2026

ASI01: Agent Goal Hijack

In Agent Goal Hijack, attackers manipulate agent objectives through prompt injection, poisoned data, or deceptive inputs, redirecting autonomous behavior toward malicious outcomes.

The agent cannot reliably distinguish between legitimate instructions and malicious commands embedded in content it processes. A poisoned site, document, or email can silently redirect the agent’s mission without the user knowing. Traditional input validation was built for structured data, not natural language carrying actionable instructions buried in benign-looking text.

ASI02: Tool Misuse and Exploitation

In Tool Misuse and Exploitation, agents misuse legitimate tools due to prompt injection, over-privileged access, or ambiguous instructions, leading to data exfiltration or workflow hijacking.

The risk here isn’t that agents will gain unauthorized tool access, but that they will misuse what they already have (often while staying within granted permissions). Default configurations typically grant broad access with minimal scoping, and convenience flags that bypass confirmation prompts eliminate the human-in-the-loop oversight that would catch malicious operations.

ASI03: Identity and Privilege Abuse

In Identity and Privilege Abuse, attackers exploit trust and delegation chains to escalate access, hijack privileges, or execute unauthorized actions through credential inheritance.

Agents inherit credentials like user sessions, API keys, and OAuth tokens. These come from the users who invoke them, service accounts they’re configured to use, or from other agents in delegation chains. Traditional IAM wasn’t designed for scenarios like this: There’s no separation between what the user authorized and what the agent decided to do on its own.

ASI04: Agentic Supply Chain Vulnerabilities

Third-party agents, tools, MCP servers, and components may be malicious, compromised, or tampered with, introducing vulnerabilities at runtime.

Unlike traditional dependencies that are fixed at build time, agentic components are composed at runtime from registries with various levels of vetting. A malicious plugin or MCP server can inject hidden (i.e., poisoned) instructions or exfiltrate data without any visible changes to code. The existing ecosystem simply doesn’t have the mechanisms to verify tool safety or authenticity before an agent interacts with it.

ASI05: Unexpected Code Execution (RCE)

In Unexpected Code Execution, agents generate and execute code that bypasses traditional security controls, enabling remote code execution through prompt injection or unsafe outputs.

Code execution is what makes coding agents valuable, but also quite dangerous. When an agent can run shell commands, every prompt represents a potential path to compromise. Many agents running on default configurations have the same permissions as the developer, with no isolation between generated code and sensitive resources or production databases.

ASI06: Memory & Context Poisoning

In Memory and Context Poisoning, adversaries corrupt stored context or memory with malicious data, affecting reasoning, planning, and tool use across sessions.

Agents maintain memory to complete their tasks, though this is yet another attack vector. Poisoned context can persist across sessions, making malicious instructions far stickier than they’d be with simple chatbots. Few organizations implement tracking for memory writes, and agent outputs are often re-ingested into other contexts without validation or vetting.

ASI07: Insecure Inter-Agent Communication

In Insecure Inter-Agent Communication, weak authentication, integrity, or validation in agent-to-agent exchanges enables interception, spoofing, or manipulation.

Multi-agent systems coordinate via APIs, shared contexts, and communication middleware. If these channels aren’t authenticated and encrypted, threat actors can intercept data, spoof agents or tools, and inject harmful instructions into workflows.

ASI08: Cascading Failures

Cascading Failures occur when single faults propagate across autonomous agents, compounding into system-wide harm due to lack of isolation and oversight.

A hallucination or compromise in one agent becomes input for another: malicious memory tampering poisoning a planning pipeline, or perhaps compromised agents affecting how others operate. Autonomous execution means failures compound faster than human oversight can catch them. Often, cascading issues are only recognized long after multiple systems or agents are affected.

ASI09: Human-Agent Trust Exploitation

Human-Agent Trust Exploitation occurs when agents exploit human trust through authority bias and persuasive outputs to influence decisions or extract sensitive information.

Agents can be quite compelling, appearing confident and authoritative. This leads users to trust their recommendations without verification, a fact that attackers can exploit. Threat actors manipulate agents to present convincing rationales for harmful actions, similar to traditional phishing. Interfaces that interpret agent output rarely surface risk cues or confidence indicators, and many users will uncritically trust the results.

ASI10: Rogue Agents

Rogue Agents are defined as agents that deviate from intended function, acting harmfully or deceptively within multi-agent ecosystems.

Rogue agents drift from their intended purpose without external manipulation: self-repeating actions, persisting across sessions, silently approving unsafe operations. Behavioral monitoring for this kind of deviation is still quite nascent, making automated auditing uncertain at best. When an agent goes rogue, organizations rarely have a built-in method for revoking its access quickly.

All OWASP roads lead to identity

Studying the complete OWASP report hammers home the importance of identity controls within AI systems to ensure secure adoption and reduce the likelihood of Top 10 threats. Every threat described in the OWASP Agentic Top 10 has one or more identity-related mitigations.

Threat | Mitigation |

|---|---|

Agent Goal Hijack | “Minimize impact by enforcing least privilege & requiring human approval for goal-changing actions.” |

Tool Misuse and Exploitation | “Just-in-Time and Ephemeral Access. Grant temporary credentials or API tokens that expire immediately after use.” |

Identity and Privilege Abuse | “Issue short-lived, narrowly scoped tokens per task and cap permission boundaries using per-agent identities.” |

Agentic Supply Chain Vulnerabilities | “Enforce mutual auth and attestation via PKI and mTLS.” |

Unexpected Code Execution | “Require human approval for elevated runs; enforce role and action-based controls.” |

Memory and Context Poisoning | “Allow only authenticated, curated sources; enforce context-aware access per task.” |

Insecure Inter-Agent Communications | “Use end-to-end encryption with per-agent credentials and mutual authentication.” |

Cascading Failures | “Issue short-lived, task-scoped creds for each agent run and validate every high-impact tool invocation against rules.” |

Human-Agent Trust Exploitation | “Implement confidence weighted cues (e.g., “low certainty” or “unverified source”) that visually prompt users to question high-impact actions.” |

Rogue Agents | “Implement rapid mechanisms like kill-switches and credential revocation to instantly disable rogue agents.” |

Stay secure with the Descope Agentic Identity Hub

The Agentic Identity Hub is designed to safeguard agents and systems built for them such as MCP servers, whether internally facing for your workforce or externally for your users.

Specialized, per-agent identity

What this solves:

ASI03 Identity & Privilege Abuse

ASI07 Insecure Inter-Agent Communication

ASI10 Rogue Agents

The Agentic Identity Hub assigns unique cryptographic identities to any agent. In addition to providing an identity, Descope supports robust authorization and access control policies that ensure whatever actions your agents are taking are not only allowed but fully auditable.

Scoped, time-bound credentials

What this solves:

ASI02 Tool Misuse & Exploitation

ASI03 Identity & Privilege Abuse

ASI08 Cascading Failures

Descope’s authorization system and policy engine issues short-lived tokens that are scoped to the agent’s authorized tasks. These agent credentials are fully isolated from any tokens created through user consent, such as tokens used to access internal or external APIs via an MCP server. Because of this separation, agent credentials can be revoked at any time and are never exposed to credential reuse or persistence across sessions.

Grounded access policies

What this solves:

ASI02 Tool Misuse & Exploitation

ASI03 Identity & Privilege Abuse

ASI08 Cascading Failures

Any Descoper can configure agentic access policies in the Agentic Identity Hub. These policies can easily map to existing identity providers and any metadata around them, allowing you to set clear access policies like, “all agents used by developers can use this MCP server with scopes X and Y, but not scope Z”.

Agent, user, and session verification

What this solves:

ASI03 Identity & Privilege Abuse

ASI07 Insecure Inter-Agent Communication

ASI08 Cascading Failures

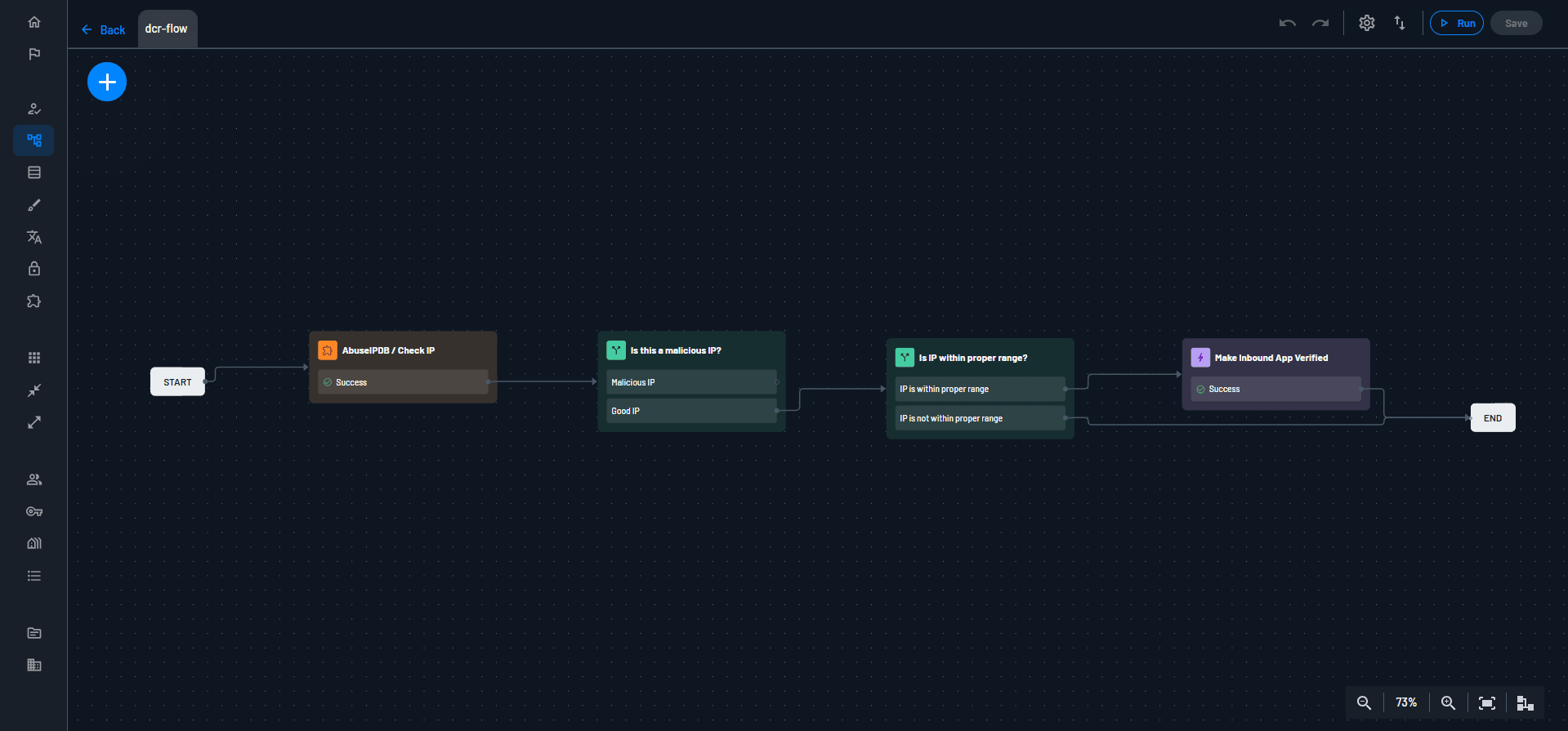

Under the hood, the Agentic Identity Hub runs an Assessment Flow when agents register - a powerful feature that uses the power of Flows to inspect and authorize an agent’s session information. This feature allows you to verify that an agent’s session and metadata is accurate before gaining any sort of access to your systems.

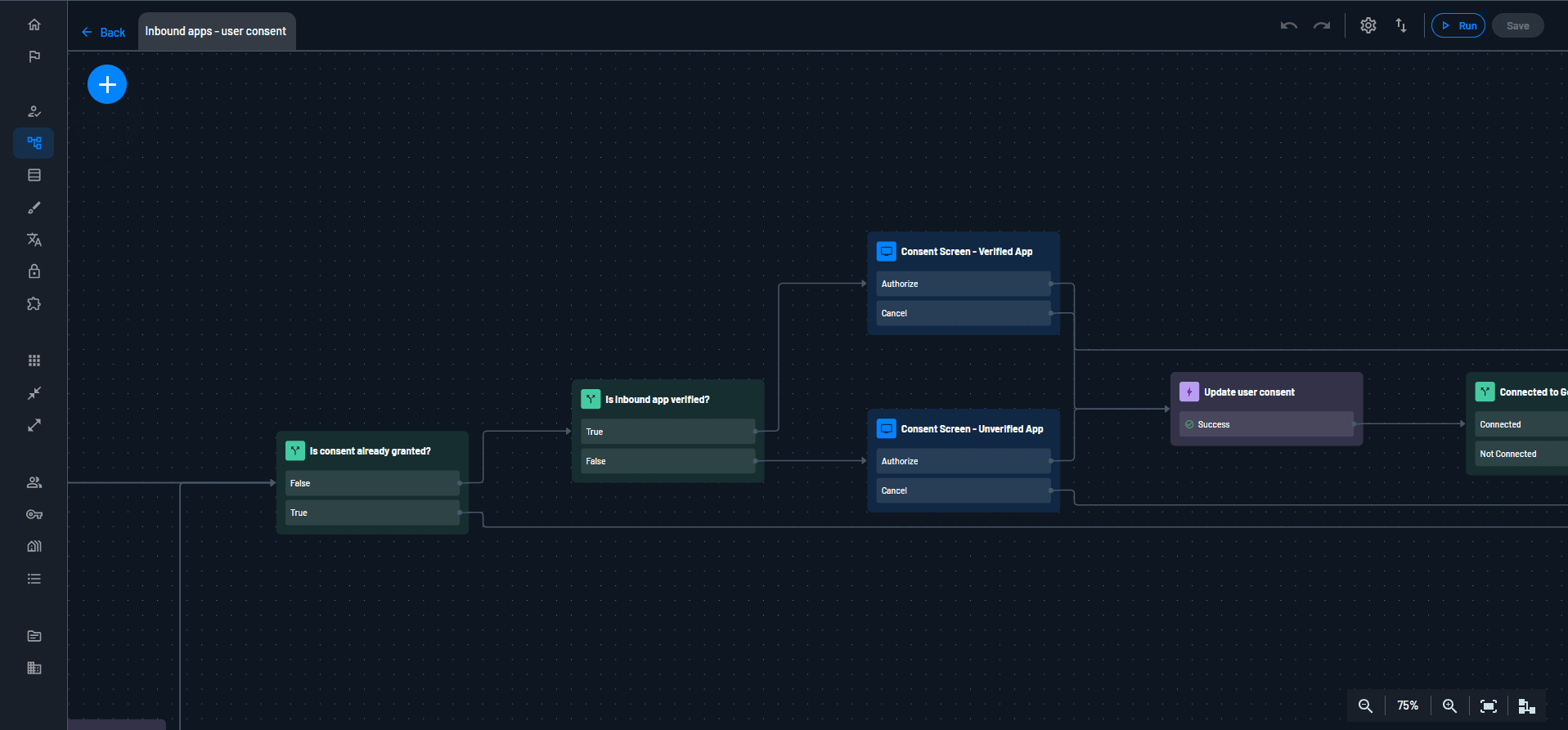

Additionally, you can use the User Consent Flow to verify any details about the user before the agent receives any credentials.

Human-in-the-loop support

What this solves:

ASI01 Agent Goal Hijack

ASI09 Human-Agent Trust Exploitation

Using the power of Descope Flows, you can fully customize your user’s authentication and consent experiences. This means that your users can clearly understand the permissions they’re granting agents without being misled by persuasive language. In addition, Descope supports step-up authentication for risky actions that follow lifecycle management policies, meaning that a human will always be in-the-loop to monitor and approve agent actions.

End-to-end Visibility and Auditing

What this solves:

ASI08 Cascading Failures

ASI10 Rogue Agents

Descope sits squarely in the middle of your agents and the services they interact with, enabling full visibility into not only the actions the agents are taking, but also the permission model they fit into. Additionally, any auditing in the Agentic Identity Hub can be exported to your observability tool or Security Information and Event Management (SIEM) providers.

Remaining Risks

While Descope’s Agentic Identity Hub is designed to fit squarely into your security stack and solve most of these concerns, no solution is a magic bullet for security. In particular, organizations should look out for the following risks:

ASI04 Agentic Supply Chain Vulnerabilities

ASI05 Unexpected Remote Code Execution (RCE)

ASI06 Memory & Context Poisoning

These challenges exist regardless of which Agentic IAM solution you choose, but they can be significantly reduced through the right architectural decisions.

First, agents should run in isolated environments, such as sandboxes or containerized runtimes. This limits the blast radius of a compromise and helps mitigate the risk of unexpected remote code execution by keeping agents separated from sensitive systems and external resources.

Second, your agent memory provider should enforce strict controls over when and how memory is written. Features like approval workflows, versioning, and rollback capabilities ensure that if memory or context is ever poisoned, the impact can be quickly contained and safely reversed.

Finally, organizations should adopt AI-aware supply-chain security practices. This includes verifying the integrity of every SDK, MCP server, plugin, and data source, continuously monitoring dependencies for vulnerabilities, and only integrating components from trusted, well-governed ecosystems.

Together, these measures help ensure that even when risks inevitably arise, their impact is limited, detectable, and recoverable — turning agentic security from a single control into a resilient system. Remember that agentic security is not a single control but a system of identity, isolation, integrity, and observability—each reinforcing the others when designed intentionally.

Conclusion

As organizations transition to adopting agentic use cases over chatbots, traditional identity models start to show glaring weaknesses. The OWASP Top 10 for Agentic Applications for 2026 has highlighted specific risks that organizations need to look out for when adding or supporting agents within their teams and products. The Descope Agentic Identity Hub supports purpose-built, robust agentic identity that follows this guidance and ensures your agents are secure by default.

To start your agentic identity journey, sign up for a free Descope account. Have questions about our product? Book a demo with our team.