Table of Contents

Vague scope requirements and Agent Experience (AX)

As organizations increasingly integrate AI agents, tools, and autonomous workflows into their operations and products, you need to secure your APIs for the users of tomorrow—both human and machine. That means minimizing the scope of access for every action taken.

Giving a user more API permissions than needed is like handing someone a chainsaw when a pocket knife will do. Sure, it gets the job done—messily and dangerously. Whether triggered by a human user or an AI agent acting on their behalf, each API request should carry only the permissions needed for the task at hand.

This principle, known as progressive scoping, focuses on intent—what the tool is trying to do—and whether it has permission to do so.

This guide walks through three critical aspects of progressive scoping:

How ambiguous scope requirements create security vulnerabilities when tokens are compromised

Why defining scopes in a machine-readable format creates a more secure, frictionless flow

How token management systems can cache and serve the right permissions at the right time

Understanding scopes with Descope Inbound Apps

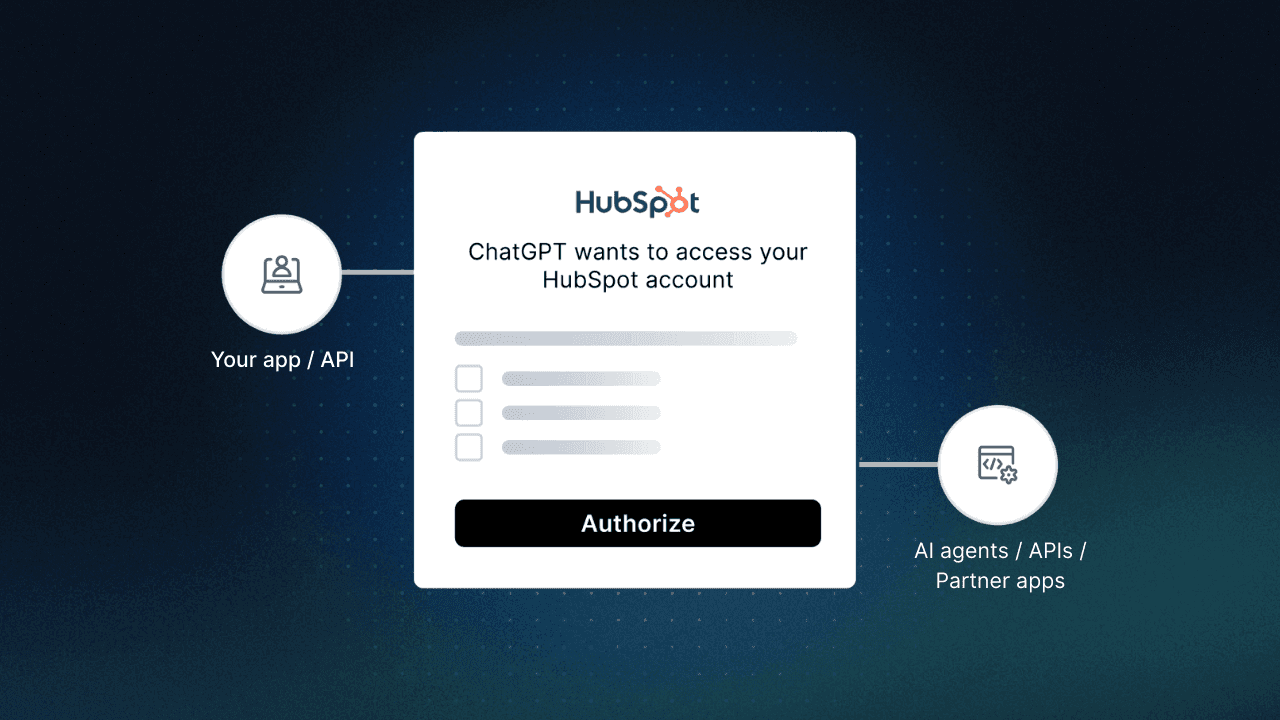

Before diving deeper into progressive scoping, it’s important to first understand what scopes are and how they’re issued—particularly when working with OAuth-based authorization through platforms like Descope.

In OAuth, a scope represents a specific permission that a token grants. These scopes define what an app or agent can do on behalf of a user—whether it’s reading contacts, writing to a CRM, or exporting analytics. The goal is to ensure that tokens are only capable of what they’re explicitly permitted to do.

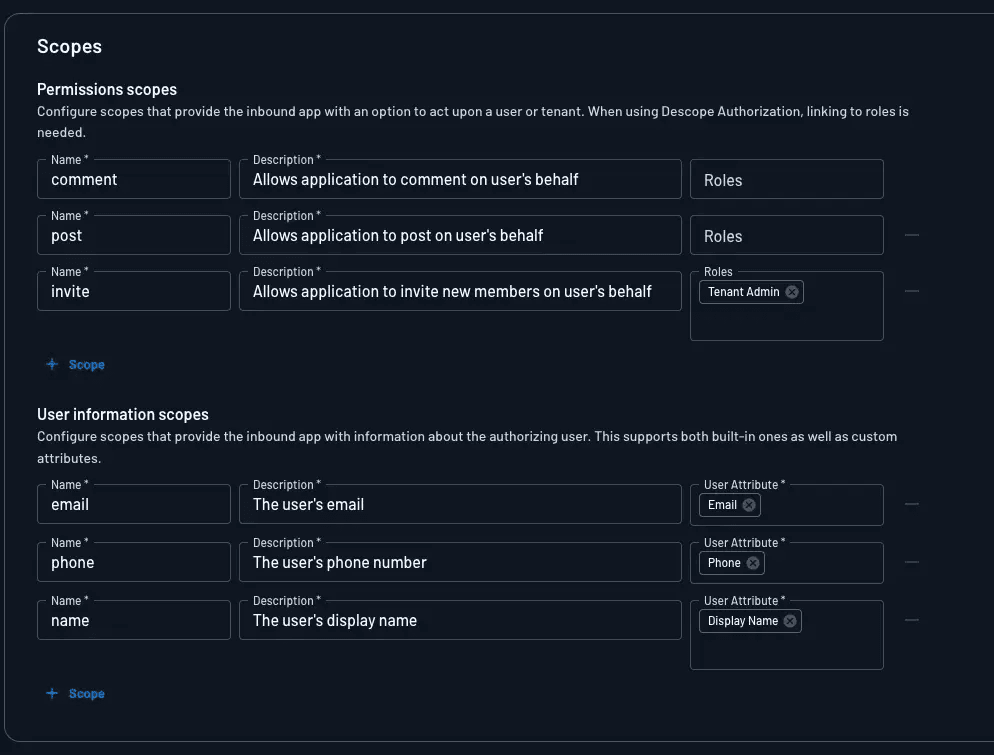

Descope supports this model through Inbound Apps, which allow external tools, AI agents, or partner applications to connect via OAuth and request access tokens for specific scopes. These tokens can be tightly scoped, dynamically issued, and refreshed based on both application context and user-specific permissions.

Roles and scope mapping

Descope also supports a flexible mapping between roles and scopes, making it easier to translate a user’s identity into meaningful API access. For example:

A user with the admin role may receive broad scopes like

contacts:read,contacts:write, andcrm:*A Support Agent role might be limited to

tickets:readandcustomers:viewA Viewer role may only receiveanalytics:read

This mapping can be configured directly in Descope, either statically through role-to-scope templates or dynamically through Flows.

Attribute-based scoping

Beyond roles, user attributes can also be used to generate context-aware scopes. For instance:

A user in the sales department may be granted pipeline.update

A user with subscription=pro might receive access to analytics.export

A user tied to the tenant=acme-inc organization might have all scopes prefixed or filtered accordingly (e.g.,

crm.write:acme)

This approach allows you to tailor the exact permissions a token should carry based on both who the user is and what they’re allowed to do—without requiring every tool or agent to hardcode logic about user roles or departments.

However, simply defining the right scopes is only half the battle. The real challenge lies in ensuring that each token is issued with only the minimum necessary scopes for the specific task at hand. If a token carries more access than needed—even if the scopes themselves are well-defined—it increases the risk surface and violates the principle of least privilege.

That’s where progressive scoping comes in.

Vague scope requirements and Agent Experience (AX)

Most APIs today define their required scopes in ways that frustrate humans and confuse machines—especially AI agents handling tasks on behalf of users. When an agent hits a scoping obstacle, it negatively impacts Agent Experience, or AX. Optimizing for AX is crucial because it creates a shorter path from user prompt to task completion.

If an API’s scopes are even defined in human-readable documentation (they often aren’t), we’re still faced with several challenges:

Developers and tools can’t programmatically discover which scopes they need for a specific endpoint.

Access errors are ambiguous; a 403 Forbidden response doesn’t always include missing scope details.

Developers over-scope by default, requesting every permission to avoid errors—which is a security risk if tokens are compromised.

OAuth 2.0 does include optional guidance on how to respond to missing scopes, but implementations vary, and AX-friendly scope definitions are still rare.

How OAuth flow failures impact AX

Imagine a user tasks their agent with a workflow that invokes your API. The agent requests a token with default scopes, makes an API call, and gets a 403 response because it lacks access to the /contacts/export endpoint.

The agent must:

Struggle to interpret a vague error message

Prompt the user to reconnect with broader permissions

Finally, the agent must retry the request after adding friction and delay

This reactive pattern is inefficient and creates a poor agent and user experience—not a reputation you want your API to earn in an increasingly AX-friendly marketplace.

The progressive scoping advantage

When scope requirements are discoverable up front based on intent, agents request only the necessary permissions per tool or feature.

User experience improves because people aren’t bombarded with consent prompts for irrelevant scopes, and security tightens because agents aren’t given excessive permissions (making their tokens more vulnerable if stolen). Meanwhile, AX improves because the agent is able to carry out their workflow without having to notify the user about unclear scoping issues.

Minimized permission grants: Only strictly necessary access is requested (or granted)

Fewer consent prompts: AI agents don’t ask users to approve over-scoping

Improved agent compatibility: AI tools and orchestrators can understand and request access intelligently

Clear error recovery: If access fails, the missing scopes are explicit

Improving OAuth error handling

The OAuth 2.1 specification states that when a token lacks the necessary scopes, the resource server (your API) must return a 403 Forbidden status. In addition, recent guidance in the MCP specification (SEP-835) clarifies that servers should include details about the missing scopes in a WWW-Authenticate header.

This allows clients and agents to react dynamically instead of failing blindly. The header should include:

error="insufficient_scope"— signals the authorization failure typescope="..."— lists the scopes required for the current operation (recommended to include both the already-granted scopes and the newly-required scopes so clients don’t lose permissions they already had)resource_metadata— URI pointing to the Protected Resource Metadata documenterror_description(optional) — a human-readable explanation

HTTP/1.1 403 Forbidden

WWW-Authenticate: Bearer error="insufficient_scope",

scope="files:read files:write user:profile",

resource_metadata="https://api.example.com/.well-known/oauth-protected-resource",

error_description="Additional file write permission required"OAuth-based error handling flow

[Agent / Tool] → Request token → [Token Manager, e.g. Descope]

[Token Manager] → Return token → [Agent]

[Agent] → API call → [API Server]

[API Server] → 403 Forbidden + (Optional) WWW-Authenticate header with required scopes

[Agent] → Prompt user to reconnect with new scopes

[Token Manager] → Issue new token → [Agent]

[Agent] → Retry call → [API Server]However, many implementations fail today because:

Many APIs still omit the

scopeparameter or provide vague error messages.Inconsistent formats make it hard for tools to parse scope requirements.

Without structured responses, developers over-request broad permissions, which weakens security.

If you’re building APIs with OAuth, you should always return the missing scopes in a consistent, machine-readable format. This makes it possible for agents and token managers to automatically request the correct permissions, avoiding guesswork or over-scoping.

While progressive scoping defined in OpenAPI specs or tool metadata remains the most scalable way to declare scope requirements in advance, proper 403 + scope responses at runtime are critical for step-up authorization and better agent experience (AX).

Defining scopes in OpenAPI (recommended best practice)

While structured error responses make scope recovery possible at runtime, the best experience for both developers and AI agents is to avoid trial-and-error entirely. The recommended way to achieve this is by embedding scope requirements directly in your OpenAPI specification.

paths:

/users:

get:

summary: Get all users

security:

- OAuth2:

- read:users

/users/{userId}:

get:

summary: Get a specific user

security:

- OAuth2:

- read:users

- read:user:{userId}

parameters:

- name: userId

in: path

required: true

schema:

type: stringBy defining scopes in your OpenAPI spec:

Agents, SDKs, and token managers can discover required scopes up front, without waiting for a failure.

AI agents can request just the necessary scopes per tool or endpoint.

User consent flows become clearer, since people are only asked for permissions relevant to their intended task.

Tool-based architectures (e.g. MCP)

For applications using Model Context Protocol (MCP) or similar agent-to-tool orchestration models, this concept extends even further.

Each tool should define its own set of scopes—just like each endpoint.

For example:

A CRM sync tool might need:

contacts.read, pipeline.writeA document summarizer might need:

docs.read, docs.writeA calendar manager might need:

calendar.read, calendar.create

This ensures tokens are scoped not just to endpoints, but to tool-level behavior.

The MCP authorization specification recommends that tools should advertise their capabilities—including their required scopes—so that agents can automatically coordinate consent and token acquisition. This allows AI agents to operate safely, predictably, and securely on behalf of users.

Example: Multi-tool progressive scoping with caching

Let’s say you’re building an orchestrator that uses OpenAI’s function calling or Anthropic’s tool use to route commands like:

“Send a follow-up email and update the CRM.”

The orchestrator maps this to tools:

{

"tools": {

"EmailSender": {

"endpoint": "/email/send",

"scopes": ["email.send"]

},

"CRMUpdater": {

"endpoint": "/crm/update",

"scopes": ["crm.write"]

}

}

}These scope mappings can be fetched once from the OpenAPI specs of each tool (MCP server or otherwise) and cached to improve performance and reduce latency.

Progressive scoping flow with caching:

[Agent / Orchestrator] → Fetch scope definitions → [Cached OpenAPI Spec]

[Agent] → Request token with correct scopes → [Descope]

[Descope] → Return token → [Agent]

[Agent] → Call tool with token → [Tool / API]This avoids repeated scope discovery and reduces the need for trial-and-error authorization.

Token storage & management with Descope

Once you know the required scopes, you need a secure way to manage the tokens. With Descope Outbound Apps, you can:

Store multiple tokens per user or tenant, each with different scopes

Retrieve the appropriate token based on tool or endpoint

Automatically refresh tokens as needed

Query tokens from your backend using the Management SDK

You can even store tokens at a tenant level, allowing shared access for a group of users without requiring individual consent for each one.

This approach works seamlessly with AI tools, orchestrators, and MCP clients. You never need to manage token persistence or refresh logic yourself—Descope handles it all.

Preparing your APIs for the users of tomorrow

Protecting your APIs has never been more critical. With the advent of autonomous agents, now is the time to think critically and surgically about how your APIs interact with future users, both human and machine. Creating a secure foundation for your APIs is essential for security, but it’s also a key ingredient in supporting the AI agents of tomorrow.

By adopting progressive scoping and embedding scope definitions into your OpenAPI specs, you enable:

Safer permission boundaries

Better consent experiences

Smarter and more efficient AI agent behavior

Combine this with Descope as your token manager, and you’ll have a scalable architecture that works across tools, APIs, and users. Descope's external IAM supports a wide range of agentic scenarios, offering a complete authentication and authorization solution for AI sytems—without your developers needing to become OAuth experts.

Preparing your API for the AI landscape of tomorrow? Learn more about Descope’s agentic auth solutions on our microsite, catch up on AI Launch Week, or connect with our friendly dev community on Slack, AuthTown.