Table of Contents

1. LangChain AI agent builder

This tutorial was written by Manish Hatwalne, a developer with a knack for demystifying complex concepts and translating "geek speak" into everyday language. Visit Manish's website to see more of his work!

If you're a tech professional, you've likely used LLM apps—like ChatGPT, Claude, or Gemini—to write code, generate documentation, or summarize technical content. AI agents, however, have only recently started appearing on our tech radar.

AI agents represent a shift from reactive chat tools to proactive, goal-oriented systems that handle real-world tasks end to end. Agents are not just a UI layer; they are a new architecture. Building them means rethinking how software can plan, adapt, and operate semi-independently. As the AI agent ecosystem has grown over the past year, multiple frameworks and platforms have emerged. Some take a code-first approach for developers, while others offer visual, no-code, or low-code builders.

This article explores two categories of AI agent builders: code-first frameworks like LangChain and AutoGen (which also has limited visual interface), and no-code/low-code platforms including Botpress, Gumloop, and FlowiseAI. Vertex AI Agent Builder offers a no-code interface alongside its code-first Agent Development Kit (ADK).

These seven agent builders are evaluated based on how they handle the following task:

Build an AI-powered task manager that accepts natural language commands (eg "Remind me to buy milk tomorrow"), creates actionable tasks, stores them, and allows retrieval.

In the context of this task, the following agent builders are evaluated based on their ease of use, flexibility and robustness, performance, integrations and ecosystem, community and documentation, and pricing:

LangChain

AutoGen

CrewAI

Botpress

Gumloop

FlowiseAI

Vertex AI Agent Builder

1. LangChain AI agent builder

LangChain doesn't offer a standalone agent builder tool, but it's a flexible, powerful framework for building agents in code. It's a Python-based library that provides building blocks, like chains, agents, tools, memory, and retrieval. Agents can be built programmatically using methods such as create_tool_calling_agent(), create_react_agent(), and similar factory methods.

LangChain approach and developer experience

LangChain uses a code-first approach for building agents, which typically involves using the trio of LLM model, tools, and prompt.

Here's a Python code snippet that creates a simple AI task manager using LangChain:

# Initialize LLM model

llm = ChatGoogleGenerativeAI(

model="gemini-2.0-flash",

temperature=0.25,

google_api_key=api_key

)

# Define tools

@tool

def add_task(task_description: str) -> str:

"""Add a task given a natural language instruction."""

task = {

'id': len(tasks) + 1,

'description': task_description,

'created_at': datetime.now().date().isoformat()

}

tasks.append(task)

return f"Task added successfully. Task ID: {task['id']}."

@tool

def list_tasks() -> str:

"""List all stored tasks."""

if not tasks:

return "No tasks found."

task_list = "\n".join([

f"ID: {task['id']}, Description: {task['description']}, Created: {task['created_at']}."

for task in tasks

])

return task_list

# List of available tools

tools = [add_task, list_tasks]

# Create prompt for task-management agent

prompt = ChatPromptTemplate.from_messages([

("system", """You are a task management assistant with access to these tools:

1. add_task: Creates a new task

2. list_tasks: Displays all existing tasks

Instructions:

- When a user wants to add a task (containing action items, deadlines, priorities, etc.), use the add_task tool

- When a user wants to view their tasks, use the list_tasks tool

- For non-task inputs, respond with: "Well, I'm just a simple task agent—I can't handle that one."

- Always confirm successful actions with clear, friendly feedback

Remember to interpret the user's intent and respond accordingly, whether they use direct commands or natural language requests."""),

("user", "{input}"),

MessagesPlaceholder("agent_scratchpad"),

])

# Create agent with LLM model, available tools and custom prompt

agent = create_tool_calling_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

# CLI demo

if __name__ == "__main__":

print("LangChain Task Manager (type 'exit' to quit)")

while True:

user_input = input("-->> ").strip()

if user_input.lower() == "exit":

print("Bye...")

break

result = agent_executor.invoke({"input": user_input})

print(result["output"])

```

This task manager agent is created using an LLM model (Gemini), the tools (add_task() and list_tasks()), and a customized prompt.

The following is a sample conversation with this agent:

```

(venv) $ python langchain_agent.py

LangChain Task Manager (type 'exit' to quit)

-->> There is no milk at home, I must get it in the evening.

OK, I've added it to your task list. You need to get milk in the evening.

-->> Remind me to call mom tomorrow.

OK, I've added "Call mom tomorrow" to your task list.

-->> I need to confirm my dentist appointment this Friday.

OK, I've added "Confirm dentist appointment this Friday." to your task list!

-->> Which is the best AI agent builder currently?

Well, I'm just a simple task agent—I can't handle that one.

-->> What do I have on my plate this week?

OK. This week, you have to: Get milk in the evening, Call mom tomorrow, and Confirm dentist appointment this Friday.

-->> Show my tasks, please

OK. Here are your tasks: ID: 1, Description: Get milk in the evening, Created: 2025-05-13.

ID: 2, Description: Call mom tomorrow, Created: 2025-05-13.

ID: 3, Description: Confirm dentist appointment this Friday., Created: 2025-05-13.

-->> exit

Bye...This output shows that the agent can comfortably add relevant tasks to your task list based on simple, conversational English. It also responds with an "I can't handle that" message when the input is not related to tasks. It's important that you add guardrails for your AI agent to prevent its misuse.

The LangChain framework is comprehensive and has a significant learning curve, but it's ideal for experienced developers because it offers the highest level of flexibility and control for building AI agents.

Note: Currently, LangGraph is the recommended approach to building AI agents programmatically.

LangChain evaluation on benchmark task

Here is a closer look at LangChain based on the evaluation criteria:

Ease of use: Powerful Python-based framework with a steep learning curve. It uses multiple abstractions with an evolving API. Best for experienced programmers.

Flexibility and robustness: Highly flexible and model agnostic, LangChain supports both simple chains and complex multiagent setups. Developers have fine-grained control over agent behavior.

Performance: Delivers strong performance for both prototypes and production, provided chains and models are well optimized.

Integrations and ecosystem: Offers a rich ecosystem with over 600 integrations for vector databases, APIs, tools, and memory providers; supports major LLMs, such as OpenAI, Anthropic, and Gemini.

Community and documentation: The LangChain community includes over 4,000 contributors, with its main repo—langchain-ai/langchain—boasting more than 108,000 stars and 17,500 forks and over 20 million monthly downloads. Its official docs cover how-to guides and API references and include free courses via LangChain Academy.

Pricing: Open source and free to use. Costs depend on the LLMs and tools you integrate.

Best for: LangChain is best suited for experienced Python developers who want to build powerful, highly customized agents. It's a great choice when you want control and flexibility but not ideal if you're looking for quick UI-driven prototyping.

2. AutoGen AI agent builder

AutoGen by Microsoft is an open source Python framework for building AI agents. It also includes AutoGen Studio, a visual interface for designing agent workflows with minimal code. However, as of version v0.4.2.1, AutoGen Studio has limited features—for example, it lacks in-memory storage—so this article focuses on the Python framework.

AutoGen approach and developer experience

Much like LangChain, AutoGen allows you to build AI agents by specifying an LLM model, available tools, and a system prompt. Here's a code snippet for a task management agent using AutoGen:

# Initialize LLM model

model = OpenAIChatCompletionClient(

model="gemini-2.0-flash",

temperature=0.25,

api_key=api_key

)

# Define available tools

tools = [add_task, list_tasks]

# Create a system prompt for the agent

system_prompt = """You are a task management assistant with access to these tools:

1. add_task: Creates a new task

2. list_tasks: Displays all existing tasks

Instructions:

- When a user wants to add a task (with action items, deadlines, or priorities), use the add_task tool.

- When a user wants to view their tasks, use the list_tasks tool.

- For unrelated inputs, reply with: "Well, I'm just a simple task agent—I can't handle that one."

- Always confirm successful actions with clear, friendly feedback.

Interpret the user's intent and respond appropriately, whether they use natural language or direct commands."""

# Create the AI agent

agent = AssistantAgent(name="assistant", model_client=model, tools=tools, system_message=system_prompt)

```

The prompt is the same one used for the LangChain agent earlier. However, AutoGen uses its own LLM wrapper, autogen_ext.models.openai.OpenAIChatCompletionClient—which supports OpenAI (default) or any other compatible API model—and autogen_agentchat.agents.AssistantAgent for creating different agents. Additionally, AutoGen uses Python's asyncio for running agents asynchronously.

Here's this task manager in action:

```

(venv) $ python autogen_agent.py

AutoGen Task Manager (type 'exit' to quit)

-->> There is no milk at home, I must get it in the evening.

Task added successfully. Task ID: 1.

-->> Remind me to call mom tomorrow.

Task added successfully. Task ID: 2.

-->> What do I have on my plate this week?

ID: 1, Description: Get milk in the evening, Created: 2025-05-14.

ID: 2, Description: Call mom tomorrow, Created: 2025-05-14.While this AutoGen agent works fine, the confirmation messages aren't as friendly as they are with the LangChain agent, even though the same prompt and LLM are used. However, they are more useful for structured output, where you need to specify the exact format for the output.

AutoGen evaluation on benchmark task

See how AutoGen actually fares on the evaluation criteria:

Ease of use: Its Python-based framework is capable but comes with its own learning curve. Although it's not as exhaustive and complex as LangChain, it still requires good Python coding skills and a knowledge of AutoGen concepts and abstractions.

Flexibility and robustness: Supports multiagent workflows and offers fine control through code. Primarily supports Microsoft's OpenAI models, with limited support for other API-compatible LLMs.

Performance: Performs well with efficient agents and lightweight models. Real-time or storage-heavy tasks may require optimization.

Integrations and ecosystem: Extensible but lacks the broad integration support across diverse tools found in frameworks like LangChain. External API calls and tool integrations are possible using a code-first approach.

Community and documentation: The AutoGen community is growing and active, with the main Microsoft/AutoGen GitHub repository boasting over 44,000 stars and 6,800 forks currently. It has more than 14,000 members on Discord and recorded over 87,000 downloads by May 2025. Documentation is fairly detailed but leans more toward advanced, multiagent use cases.

Pricing: Open source and free to use. Costs depend on the LLMs and external services used.

Best for: AutoGen is best suited for quickly prototyping multiagent workflows where agents collaborate through structured conversations. It simplifies coordinated agent setups with minimal configuration and is especially useful for handling tool use without complex wiring. While less flexible than LangChain, being a Microsoft product, it is likely to offer smoother integrations with its own Azure ecosystem.

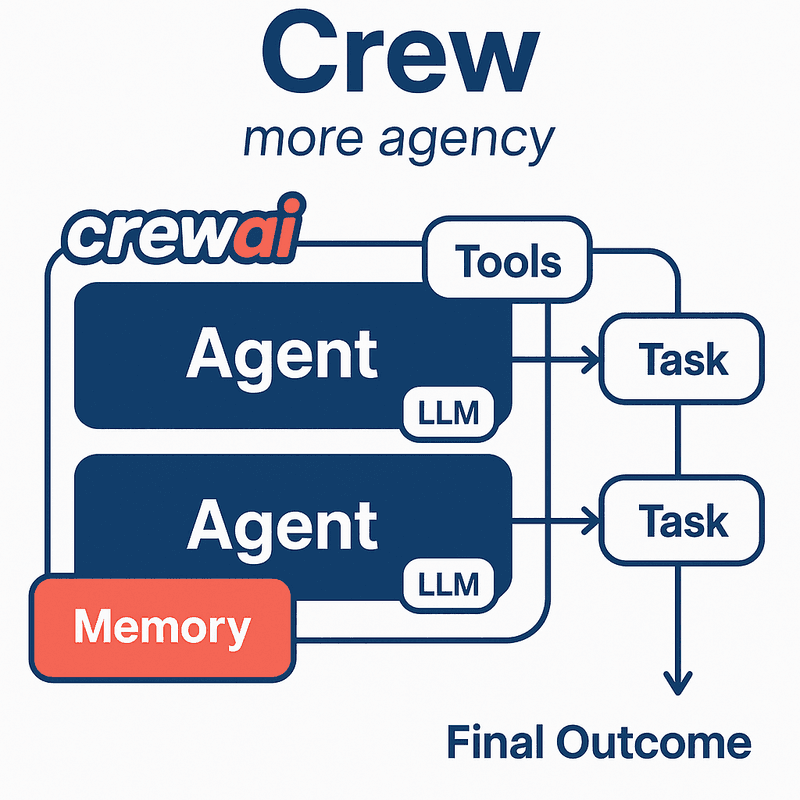

3. CrewAI agent builder

CrewAI is a lightweight Python framework for building multiagent systems. It introduces the concept of a "crew": a team of agents, each with a defined role, goal, and optional tools. Agents collaborate by passing tasks and context to each other, functioning like a coordinated human team. Unlike AutoGen's message-based approach, CrewAI uses structured workflows with clear agent handoffs. This workflow is illustrated in the diagram below:

Note: CrewAI's enterprise version includes the visual UI Studio for building multiagent systems without writing code.

CrewAI approach and developer experience

CrewAI is built for multiagent coordination. "Agents" (LLM-powered AI workers) use "tools" (eg search or custom functions) to complete specific "tasks." These agents operate within a crew that manages their interactions and execution flow using a defined "process," either step by step (where processes orchestrate the execution of tasks by agents with a predefined strategy) or via a manager agent (an agent designated as manager_agent in a crew to manage other agents). Individual prompts guide each agent's reasoning, enabling the team to work toward shared goals.

CrewAI supports building agents purely in Python, but it encourages defining agents, tasks, and prompts in separate YAML files for cleaner orchestration. A simple agent definition might look like this:

# ./taskmanager_agent.yaml

task_manager:

role: "Task Management Assistant"

goal: "Process natural language commands for task management"

backstory: "Specialized in interpreting user requests to add/list tasks."

llm: "gemini/gemini-2.0-flash"

verbose: trueTo build an AI task manager, you'd define custom tools—like AddTaskTool and ListTasksTool (extended from CrewAI's BaseTool)—create an agent from the YAML, and assign it to a task with a user input and expected output. A crew ties everything together—agents, tasks, and the execution flow in Python code:

agents_config = load_yaml_config("./taskmanager_agent.yaml")

# Create agent from configuration, LLM model, and tools

task_agent = Agent(config=agents_config['task_manager'], tools=tools, use_system_prompt=True)

# Task to handle user input (single-agent crew)

def process_command(user_input: str) -> str:

task = Task(

description=user_input,

agent=task_agent,

expected_output="Processed task command or error message. For non-task related input, respond with: 'Well, I'm just a simple task agent—I can't handle that one.'"

)

crew = Crew(agents=[task_agent], tasks=[task])

result = crew.kickoff()

return resultThis config-driven approach is modular and especially helpful for multiagent systems. But for a simple task manager, it feels like cutting a lemon with a sword.

CrewAI evaluation on benchmark task

Here's a more critical look at the CrewAI framework based on the evaluation criteria:

Ease of use: It's an open source framework that requires Python knowledge and follows a YAML-based configuration approach, which adds significant setup complexity but better organization for multiagent systems.

Flexibility and robustness: Supports multiple LLMs and offers a strong architecture for building structured, multiagent workflows.

Performance: Performs well for structured workflows and multiagent systems.

Integration capabilities and ecosystem: Supports its own tools, LangChain tools, custom integrations, and even Amazon Bedrock agents. The CrewAI framework reportedly powers more than 60 million agents monthly.

Community and documentation: The CrewAI's main crewAIInc/crewAI GitHub repository has over 31,000 stars and 4,300 forks, with more than 900,000 downloads by May 2025. It also has good documentation with AI-powered search, a growing community, and a DeepLearning.AI course focused on multiagent systems.

Pricing: The framework is free and open source. Enterprise features are paid, as are LLM or tool usage costs.

Best for: CrewAI is best suited for building role-based multiagent systems where each agent has a defined responsibility and works within a structured workflow. It excels in use cases like research assistants, code reviewers, or job-posting agents. It's a great choice for quickly prototyping modular, extensible multiagent setups in Python.

4. Botpress agent builder

Botpress is a low-code platform designed for building conversational AI agents and chatbots. It combines a visual flow editor with support for natural language understanding (NLU), custom actions, and integrations.

Botpress approach and developer experience

To build an AI task manager in Botpress, you need to use its visual studio to design a workflow. This includes an AI node to understand user intent (like adding or listing tasks) and branching logic to handle each case. The process is intuitive—you primarily connect functional blocks rather than dealing with complex NLP setups or heavy coding.

Botpress simplifies natural language handling and lets you script task actions (like "Add task" or "List task") using JavaScript. For knowledge-based agents, you can plug in tables, websites, Notion, or other data sources and choose your preferred LLM in settings.

While Botpress doesn't require deep coding skills, you'll need to get familiar with its interface and know some JavaScript to create custom actions. The studio is powerful enough to build and deploy complex agents and workflows.

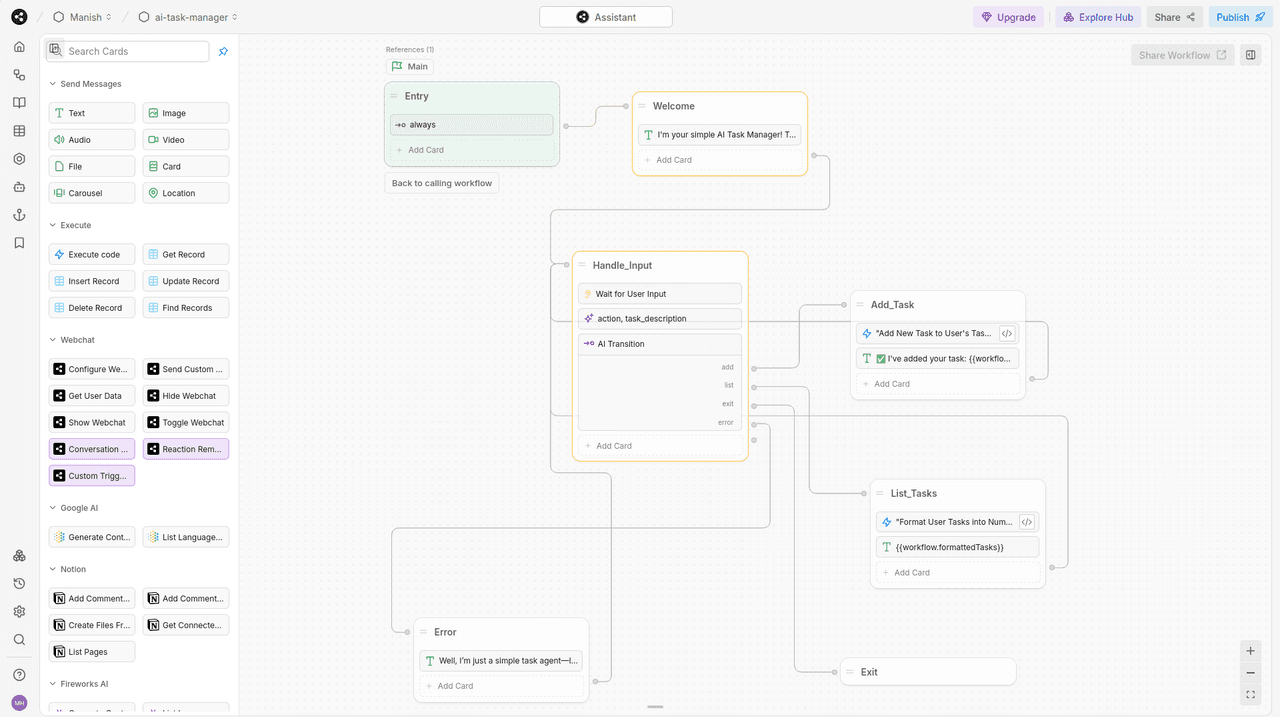

See this Botpress workflow for creating a task manager:

In this workflow, the AI-powered input node Handle_Input processes user input, processes their intent, and accomplishes its goal using either the Add_Task or List_Tasks action node. It also has an Error node for handling non-task-related inputs.

In action, the agent can extract a relevant task from natural language conversation, confirm it with friendly feedback, list current tasks, or even respond with an "I can't handle that" message for any non-task-related input:

You can learn more about building Botpress agents here.

Botpress evaluation on benchmark task

Let's see how Botpress ranks on the evaluation criteria:

Ease of use: Easy to get started with, especially for common use cases like customer support bots. Its visual builder has a short learning curve and is intuitive even for noncoders. JavaScript actions add flexibility, and built-in tools can be integrated easily.

Flexibility and robustness: Ideal for structured workflows and chatbot-style agents. You can pick different LLMs and knowledge bases, but handling complex logic, vague inputs, or memory-heavy tasks can be challenging.

Performance: Studio UI is powerful but can lag in the browser as workflows grow. Deployed bots perform reliably for chat-style tasks but may face challenges for complex agents.

Integration capabilities and ecosystem: Offers over 150 integrations, including Slack, WhatsApp, and Telegram, though it's not as extensive or agent-focused as LangChain. The platform is evolving, with new integrations being added.

Community and documentation: The Botpress community is growing, with over 25,000 members on Discord and reports of hundreds of thousands of bots in production, serving over half a million users in May 2025. It also has good documentation, tutorials, and a helpful in-studio "AI assistant." Each node comes with video guidance, making the learning process easier.

Pricing: The free tier includes limited LLM credits. Paid plans differ by workspace limit (eg message your bot can receive and total collaborators) and AI spend (eg LLM usage and text to audio).

Best for: Botpress is suitable for building structured, conversational bots with clear user flows, such as customer support or internal assistants. It's more chatbot-focused than agent-focused, making it a good fit if you want a visual, low-code platform with minimal scripting. It's not suitable for multiagent systems for more complex goals.

5. Gumloop agent builder

Gumloop is another simple, low-code agent builder focused on helping users visually create and deploy AI agents by connecting LLMs, tools, APIs, and logic blocks. It offers a drag-and-drop canvas similar to flowchart builders, allowing users to define how an agent should process inputs, call functions, and respond.

Gumloop approach and developer experience

Building AI agents with Gumloop's visual studio and built-in assistant, Ask Gummie, is very straightforward. It's the simplest visual builder in this roundup. You design workflows by connecting nodes, such as input/output blocks, AI prompts, and JavaScript functions. The AI node handles natural language parsing via custom prompts, while function nodes manage operations like "add task" or "list tasks." Gumloop abstracts away NLP complexity, so there is no need for external AI services, just well-crafted prompts.

Workflow branching uses if-else blocks based on extracted keywords (eg "add task"). The Gumloop visual builder works well for simple prototypes with little or no coding. However, as logic grows (eg task tracking, ambiguous inputs, or multistep LLM tasks), the canvas can get cluttered. Gumloop is best for agents of moderate complexity. Developers with basic scripting skills will find it approachable, though they will need to learn Gumloop's conventions for state and flow control.

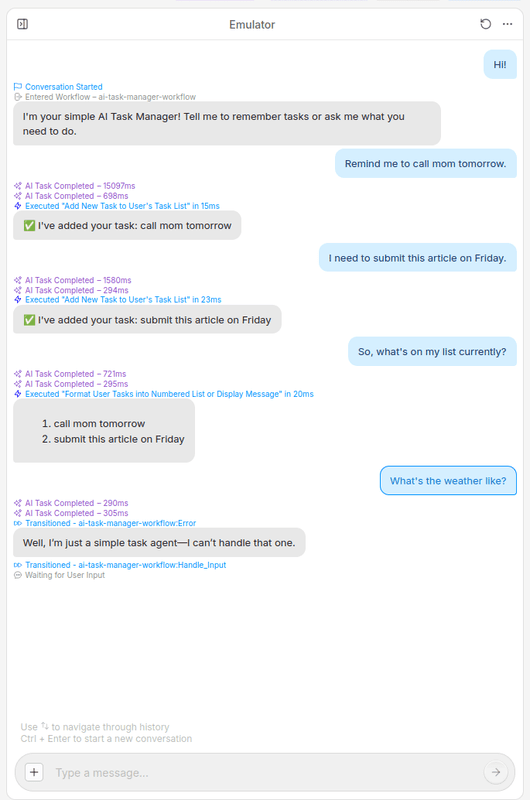

The following is an example "add task" workflow that extracts a task from natural input using an AI node:

For natural language input like There is no milk at home, I must get it in the evening., this workflow correctly extracts Buy milk in the evening.

You can explore more in their introductory guide.

Gumloop evaluation on benchmark task

Here is a careful look at Gumloop based on the evaluation criteria:

Ease of use: Simple and beginner-friendly. Its visual builder and Ask Gummie assistant make it easy to create prototypes with little or no code, even when you're using tools like web search.

Flexibility and robustness: Good for rapid prototyping and moderate workflows. You can customize logic with JavaScript function nodes, but advanced control is limited. Only OpenAI models are supported currently.

Performance: The builder is smooth for small workflows but can become cluttered and slow with complex agents. Created agents are fast for basic tasks but may struggle with more complex agents.

Integration capabilities and ecosystem: Supports popular services like Slack, Airtable, Notion, Supabase, and Google services. Webhooks can be used to extend functionality, but building complex workflows can be challenging.

Community and documentation: The platform is relatively small, but it's growing. Documentation is clean but limited for advanced scenarios. While its user-base number isn't publicly available, it has a community forum for support.

Pricing: Offers a free tier for experimentation and paid plans with more credits, features, and support.

Best for: Gumloop is ideal for noncoders who want to prototype and iterate quickly on agent workflows. It's simpler and more affordable than Botpress, making it a great choice for internal tools, demo agents, or user-facing assistants where speed and ease are more important than deep customization.

6. FlowiseAI agent builder

FlowiseAI is an open source, low-code platform built around LangChain concepts. It provides a visual node-based editor where developers can create AI agents and pipelines by connecting prebuilt components like LLMs, tools, memory, and prompts.

FlowiseAI approach and developer experience

FlowiseAI provides a clean, intuitive visual interface and familiar abstractions (eg ToolAgent) for building different AI agent workflows.

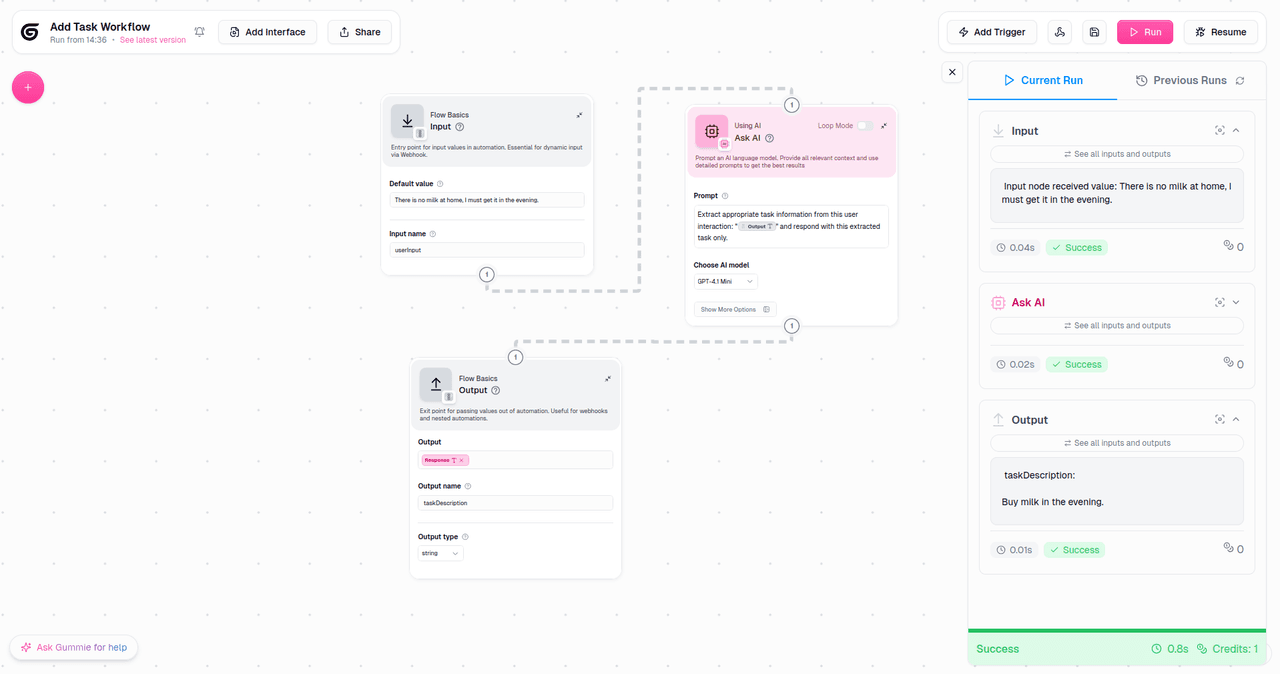

You can simply create a workflow for your agent (called "chatflow") by connecting functional nodes, such as preconfigured agents (eg ToolAgent), LLM models, and tools. The process typically starts with a chatflow input node to handle user input, followed by an LLM node (using an OpenAI, Gemini, or Anthropic model) to interpret natural language commands. You then connect tool nodes that run custom JavaScript functions (eg adding or retrieving user tasks). See the sample workflow below for a visual reference.

FlowiseAI combines the flexibility of LangChain with a low-code visual builder, allowing you to create language-driven agents without managing complex infrastructure. It automatically handles communication between LLMs and tools (prebuilt and custom), so you can focus on business logic, not intricate backend plumbing.

Its visual canvas remains comparatively cleaner due to fewer nodes (better abstractions) and custom tools/functions that offer much better control.

Below is a sample chatflow (workflow of the agent) for building an AI task manager:

The FlowiseAI canvas is much cleaner compared to Botpress for the same use case, and it provides a sample agent interaction on the right-hand side. You can explore more in their official documentation.

FlowiseAI evaluation on benchmark task

Here is how FlowiseAI fares on the evaluation criteria:

Ease of use: Reasonably beginner-friendly, with an intuitive node-based design, prebuilt agents, and tools. For the evaluation task, it could build a benchmark agent in about one-third the time it took in Botpress. Developers familiar with LangChain will find it especially productive. Local setup is possible via Docker.

Flexibility and robustness: Among visual agent builders, FlowiseAI offers the highest flexibility. You can extend workflows with code nodes, external APIs, or LangChain components.

Performance: For simple agents like task managers, performance is solid. More complex flows with multiple LLM calls can introduce latency. The visual builder remains smooth for small to medium workflows but may slow down with large, complex setups.

Integrations and ecosystem: Supports multiple LLM models, vector databases, and external APIs. You can also create custom tools. FlowiseAI reports that it powers over a hundred LLMs, embeddings, and vector databases.

Community and documentation: The FlowiseAI community is substantial, with its main GitHub repository showing over 38,000 stars and 20,000 forks. The documentation is comprehensive, covering numerous integrations, including popular LLM providers (eg OpenAI, Anthropic, Google, Ollama, and Mistral AI); a wide array of document loaders (eg Airtable, Confluence, and Figma); and various tools (eg Tavily), as well as integrations with monitoring platforms, like Prometheus and Grafana. Community support is available via Discord.

Pricing: Free and open source and supports self-hosting. The cloud version has free and paid tiers. The free tier also requires your own LLM API key.

Best for: FlowiseAI is ideal for developers who want to build LLM workflows visually without sacrificing flexibility. It's great for prototyping, internal tools, or small agent systems that may later be migrated to LangChain code. If you prefer structured logic over starting from scratch in Python, Flowise is a great option.

Unlike Gumloop or Botpress, it also handles multiagent systems more cleanly and efficiently.

7. Vertex AI agent builder

Vertex AI is Google Cloud's low-code platform for building and deploying conversational agents through a web-based interface. It lets you visually design LLM-powered workflows by linking user inputs, function calls ("tools"), and model responses. You can also connect custom APIs and use system instructions for added control.

Vertex AI approach and developer experience

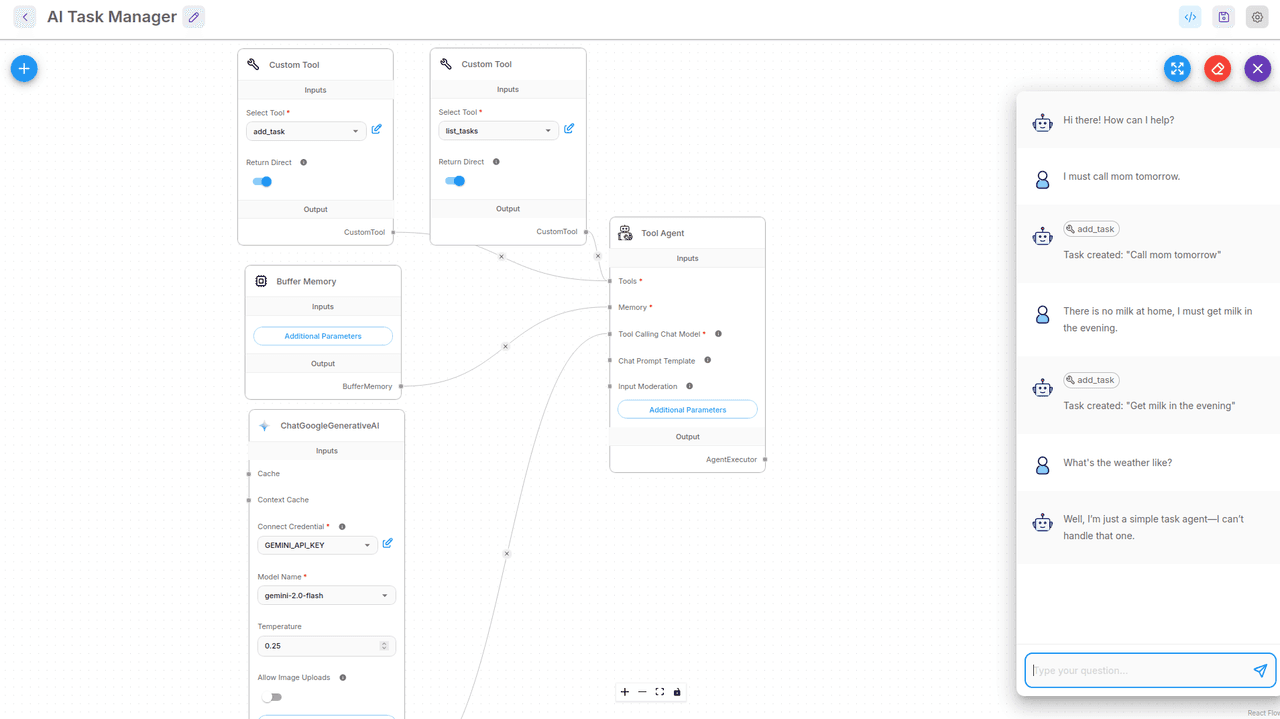

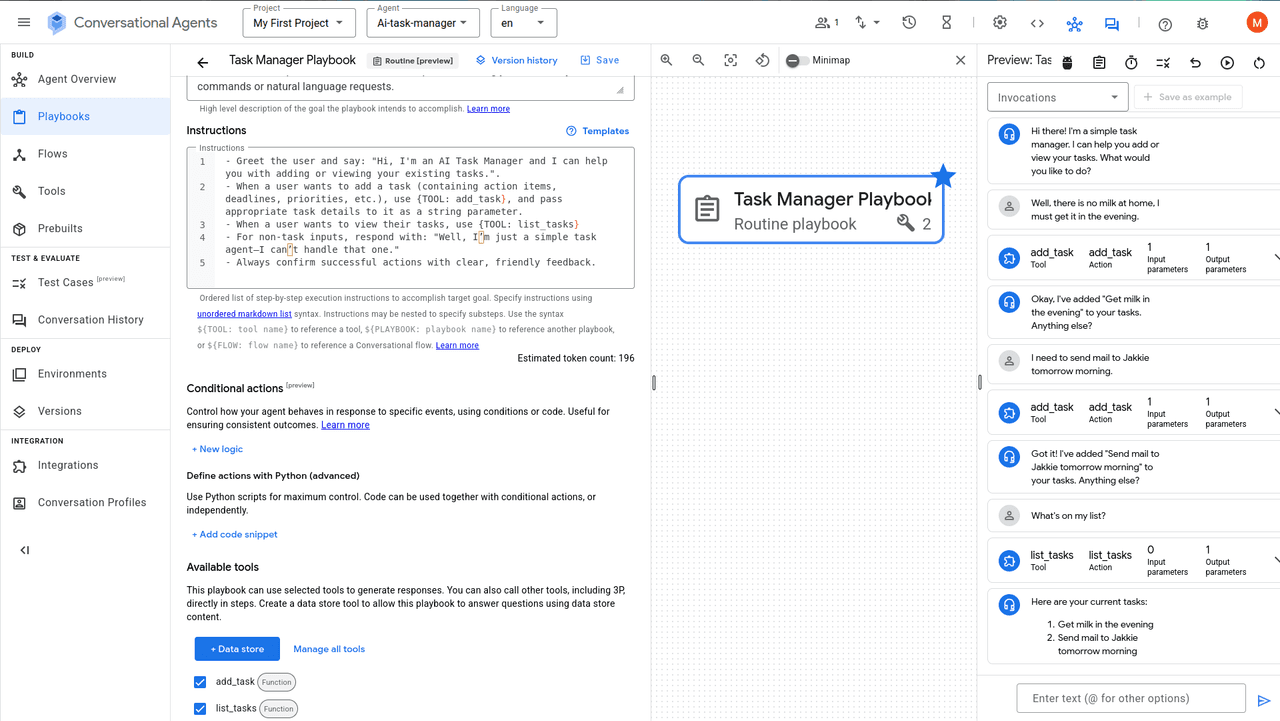

Vertex AI's agent builder is powerful but can leave you feeling like "coder Alice lost in GCP wonderland" due to its complex interface and multiple settings. However, once you're familiar with it, building an AI agent is fairly straightforward using its playbook-based approach. A playbook defines how the agent handles natural language input, processes it step-by-step using tools (like add_task and list_tasks), and returns structured responses. Tools can be custom functions, OpenAPI-compliant APIs, or connected data stores.

Vertex keeps the canvas clean (e.g., in the screenshot below, two tools are embedded directly within the agent) and includes a conversation preview panel that shows how the agent responds in different scenarios. It abstracts away NLP complexity while allowing precise control via conditions, flows, and tool configurations. Simple agents require little to no coding.

As you can see in the screenshot, the task manager playbook appears on the left (with selected custom tools at the bottom), the canvas is centered, and the agent interaction preview (including tool calls) is shown on the right.

You can explore Google's official guide to learn more.

Note: For full programmatic control, Google also offers the Vertex Agent Development Kit (ADK) to build agents in Python.

Vertex AI evaluation on benchmark task

Examine how VertexAI fares on the evaluation criteria:

Ease of use: Overwhelming interface, but building AI agents is relatively straightforward. The agent's preview feature is especially useful for debugging, clearly showing tool calls, parameters, and outputs.

Flexibility and robustness: Handles structured workflows, branching logic, and contextual responses well. However, the visual builder is limited to Google's Gemini models. For more control or external integrations, you'll need the Agent Development Kit (ADK) and external tools. Less flexible than FlowiseAI but has better security features.

Performance: Highly responsive and scalable on Google Cloud. Model/API latency may vary, but overall performance is good. The playbook-based canvas stays clean and manageable, even for complex agents.

Integrations and ecosystem: Strong integration with its own Google Cloud services (eg Gemini, BigQuery, and Cloud Storage). Third-party integrations are possible but require setup via Google Cloud tooling.

Community and documentation: The GoogleCloudPlatform/generative-ai repository, containing notebooks and code samples for generative AI on Google Cloud with Vertex AI, has over 10,600 stars and 3,000 forks. It has extensive official documentation, Google Cloud support, and community forums. However, as with many large Google products, documentation and examples are scattered across official platforms—such as Cloud docs, GitHub, blogs, and forums—making it harder to quickly find what you need.

Pricing: No extra cost for the builder itself; you pay only for the GCP services (eg model usage, cloud functions, or storage). There is a free tier for testing and experimentation, but you'll need a credit card to activate it.

Best for: Vertex AI Agent Builder is suitable for quickly prototyping Gemini-powered agents within the Google Cloud ecosystem, especially if you're already using GCP. It suits use cases with playbook-based (LLM) logic, minimal coding, and reliable infrastructure deployment. For complex workflows, the Vertex ADK offers more flexibility.

Comparing the 7 agent builders at a glance

Here's an overview of all the tools compared in this roundup:

Agent Builder | Ease of Use | Flexibility & Robustness | Performance | Integrations & Ecosystem | Community & Docs | Pricing | Best For |

|---|---|---|---|---|---|---|---|

LangChain (Code-First) | Powerful but steep learning curve | Highly flexible, model-agnostic | Fast | Vast integrations (APIs, DBs, tools) | Occasionally outdated docs | Free | Complex, customizable agent workflows |

AutoGen (Code-First/Studio) | Studio is basic; framework has moderate learning curve | Multiagent, OpenAI-compliant LLM models | Reliable when built with code | Limited ecosystem, integrations via functions | Decent docs, growing community | Free | Multiagent coordination, LLM-to-LLM workflows |

CrewAI (Code-First/Studio) | YAML-based components, moderate learning curve (Enterprise studio available) | Multiple LLM and multiagent support | Fast and reliable | Own tools and LangChain tools | Good documentation with AI-based search | Free and paid Enterprise plan | Role-based multiagent systems |

Botpress (Visual Builder) | Intuitive interface, easy to set up and deploy | Multiple LLM and knowledge-base support | Responsive for typical bots | Decent set of integrations via actions and APIs | Good documentation with AI assistant | Free trial and paid tiers | Knowledge-based bots |

Gumloop (Visual Builder) | Simple and beginner-friendly | Limited flexibility, only supports OpenAI models | Fast for simple workflow agents | Decent set of integrations | Limited documentation | Free and paid tiers | Rapid prototyping for nontechies |

FlowiseAI (Visual Builder) | Intuitive abstractions, beginner-friendly | Most flexible: multiple LLM models, tools | Fast for demo as well as production | Vector DBs, LangChain tools, APIs | Comprehensive docs, helpful Discord community | Free, self-hosting, and paid cloud tiers | Prototyping small-scale agent systems |

Vertex AI (Visual Builder/Code-First ADK) | Visual builder: intuitive low-code UI for structured workflows | Only Gemini models in UI mode, offers code-first ADK | Fast, secure infrastructure | Strong GCP ecosystem support | Dense documentation, difficult to locate the correct one at times | Free trial and pay as you go (GCP rates) | Enterprise-grade agents using structured workflows on GCP |

Conclusion

This roundup explored seven agent-building platforms ranging from powerful code-first frameworks to simple visual builders.

LangChain and CrewAI are code-first frameworks that offer full control over prompts, memory, and multiagent workflows, while AutoGen (from Microsoft) adds multiagent orchestration with an optional but limited visual builder.

Among the low-code visual builders, Botpress and Gumloop are well suited for quickly building simple drag-and-drop chatbots and workflows with minimal coding. FlowiseAI offers a visual editor with LangChain-style abstractions, allowing integration of different LLM models and custom tools. Finally, Vertex AI Agent Builder (by Google Cloud) offers a low-code interface (also a code-first ADK), suitable for teams already invested in GCP who need scalable agents with native Google service integrations.

The world of agent builders is changing and growing rapidly. You should start small, test often, and keep an eye on new tools and updates as the space evolves. For more developer updates from the AI world, subscribe to our blog or follow us on LinkedIn.