Table of Contents

Microsoft Copilot

This tutorial was written by Kumar Harsh, a software developer and devrel enthusiast who loves writing about the latest web technology. Visit Kumar's website to see more of his work!

AI has quietly slipped into our developer toolchains, and now it's writing our boilerplate, reviewing our pull requests, and occasionally hallucinating imports from alternate dimensions. What started as autocomplete on steroids has quickly grown into full-blown coding assistants that understand context, generate functions, refactor legacy code, and even explain what you were thinking six months ago.

This three-part series is putting the biggest AI assistants to the test: Microsoft Copilot, ChatGPT, Claude, and Gemini. Part one pitted Claude against ChatGPT and part two compared Gemini against ChatGPT. This third and final part of the series compares ChatGPT and Microsoft Copilot—two of the most widely used assistants in real-world development. You'll see where each shines, where they fumble, and which one might deserve a permanent spot in your IDE.

If you've ever wondered which one actually helps you ship apps faster (and which one just politely pretends to understand your tech stack), this is for you.

Microsoft Copilot

Microsoft Copilot is a suite of AI-powered assistants integrated across Microsoft's ecosystem, including Microsoft 365, Windows, and GitHub. Created with the objective of enhancing productivity, Copilot assists users in tasks ranging from drafting emails and summarizing documents to writing and debugging code. It uses advanced language models to provide context-aware suggestions and automate routine tasks.

Here are some key features of the Microsoft Copilot suite:

Integration with Microsoft 365: Copilot is embedded within applications like Word, Excel, and PowerPoint, enabling users to generate content, analyze data, and create presentations through natural language prompts.

GitHub Copilot: Built for developers, this tool offers code suggestions, autocompletion, and even entire function generation within code editors like Visual Studio Code.

Windows integration: Copilot is accessible directly from the Windows taskbar, providing users with quick assistance across various tasks.

AI agents: At the 2025 Build conference, Microsoft introduced AI agents capable of handling delegated tasks, further enhancing Copilot's capabilities.

Microsoft Copilot operates by combining large language models with Microsoft's proprietary data and services. It utilizes Microsoft Graph to access user data and context, ensuring personalized and relevant responses. For instance, in Microsoft 365 applications, Copilot can analyze a user's documents and emails to provide tailored suggestions. GitHub Copilot leverages OpenAI's Codex model to understand and generate code snippets based on the context within the code editor.

Microsoft Copilot uses a combination of OpenAI's models, including GPT-4 and GPT-4-turbo, depending on the product and subscription tier. There's very limited publicly available information about the underlying models across the range of its Copilot offerings. GitHub Copilot Chat currently runs on GPT-4-turbo (internally referred to as "GPT-4" by Microsoft), with a 64,000-token context window, which is especially useful for understanding large codebases across multiple files.

However, Microsoft does not expose direct control over the model, and performance may vary across tools like Visual Studio, VS Code, and Microsoft 365 apps. Additionally, the actual usable context (in the case of GitHub Copilot) is mediated by GitHub's own AI layer and editor integrations. While enterprise users benefit from deeper Microsoft Graph integration for context-aware suggestions, they don't get transparency into the underlying model version or full token usage.

ChatGPT

ChatGPT is OpenAI's flagship conversational AI, widely adopted for coding, research, content creation, and data analysis. Since its public debut in November 2022, it has become nearly synonymous with consumer AI, supporting use cases across full-stack development, academic research, UX writing, and spreadsheet modeling. ChatGPT supports multiple models from OpenAI's model family, spanning natural language, vision, and audio capabilities.

As of May 2025, the default model in ChatGPT is GPT-4o ("omni"), a fast, multimodal model optimized for chat-based interactions across text, vision, and speech. However, OpenAI recently introduced GPT-4.1 and GPT-4.1-mini models on May 14, 2025. GPT-4.1 is specifically positioned as OpenAI's most capable coding model to date, designed for accuracy, long-context comprehension, and advanced instruction following.

Here are some key features of the ChatGPT ecosystem:

Enhanced coding capabilities: GPT-4.1 delivers state-of-the-art results in developer benchmarks like SWE-bench Verified, outperforming GPT-4o and GPT-4.5 in real-world coding tasks.

Improved instruction following: The model shows stronger performance on multistep tasks, nested logic, and prompt clarity, making it more predictable and reliable for developers.

Massive context window: GPT-4.1 is capable of operating with up to 1 million tokens of context. However, OpenAI's pricing page still lists a 32,000-token maximum for ChatGPT Plus users, limiting practical usage unless accessed via the API or enterprise plans.

Multimodal input support: GPT-4.1 retains image input capabilities, making it useful for code screenshots, diagrams, and UI mockups, although real-time vision support remains stronger in GPT-4o.

Under the hood, ChatGPT is built on OpenAI's GPT-4.5 architecture, refined through a combination of unsupervised pretraining, supervised fine-tuning, and reinforcement learning with human feedback (RLHF). While GPT-4.1 offers advanced performance, it still depends heavily on prompt clarity and conversational history for consistent results.

GPT-4.1's extended memory and strong instruction following make it ideal for writing, reviewing, and refactoring code across files. However, community reports suggest that the context limit isn't always consistent in practice, especially under heavy load or via third-party integrations.

General overview of the tools

Here's a quick overview of the tools:

Reasoning, problem-solving, and analytical skills: Microsoft Copilot performs well on structured business tasks and integrates reasoning within Microsoft 365 apps. ChatGPT (GPT-4o/4.1) is stronger at open-ended problem-solving and creative code synthesis, with more conversational flexibility and support for multistep reasoning.

Document analysis and summarization: Copilot excels at summarizing and editing Office documents, emails, and meeting notes using Microsoft Graph context. ChatGPT can handle a broader range of document types (PDFs, academic papers, and web pages) with its file-upload and retrieval-augmented capabilities.

Emotional intelligence and conversation style: ChatGPT is more conversational and emotionally nuanced, able to shift tone and style based on context. Copilot is more functional and task focused, though recent updates have added more natural language capabilities.

Real-time data and web access: Both tools support real-time browsing. Copilot uses Microsoft Search and Graph context; ChatGPT (with GPT-4o in Plus) has a built-in browser for live web lookups and plugin/API integrations.

Cost, access, and plans: ChatGPT has a free tier and a $20/month Plus plan with GPT-4o and full tool access. Microsoft Copilot offers enterprise licensing Copilot Pro for $20/month (with GPT-4 access) but requires Microsoft 365 apps for full functionality.

Code generation quality

Similar to the comparison of Claude and ChatGPT in part one and Gemini and ChatGPT in part two, let's use two coding prompts—one for a frontend component and another for a backend script—to evaluate the coding abilities of the two.

These prompts will define requirements but also be vague intentionally in some aspects to see how (or if) the models address the missing parts on their own.

Frontend code generation

For the first test, let's start with a basic task—creating a React component. Here's the prompt for this test:

Create an Astro component that fetches a list of books from an API endpoint. For each book, display the title, author, and publication year. Format the publication year as a four-digit number (e.g., 2023). Show a loading indicator while fetching data, and display an error message if the API call fails. Ensure the component manages asynchronous data fetching and UI state transitions appropriately.

This prompt:

specifies a framework (Astro) rather than a plain JavaScript or React component (although the ideal solution for this problem should involve using a React-like component, so it would be nice to see if the AI bots can figure that out on their own);

requires fetching data from an API endpoint and handling the asynchronous nature of the request;

instructs on data formatting (publication year as a four-digit number);

includes UI states (loading indicator while fetching data and error handling with an error message); and

asks for multiple data fields per item (title, author, and publication year).

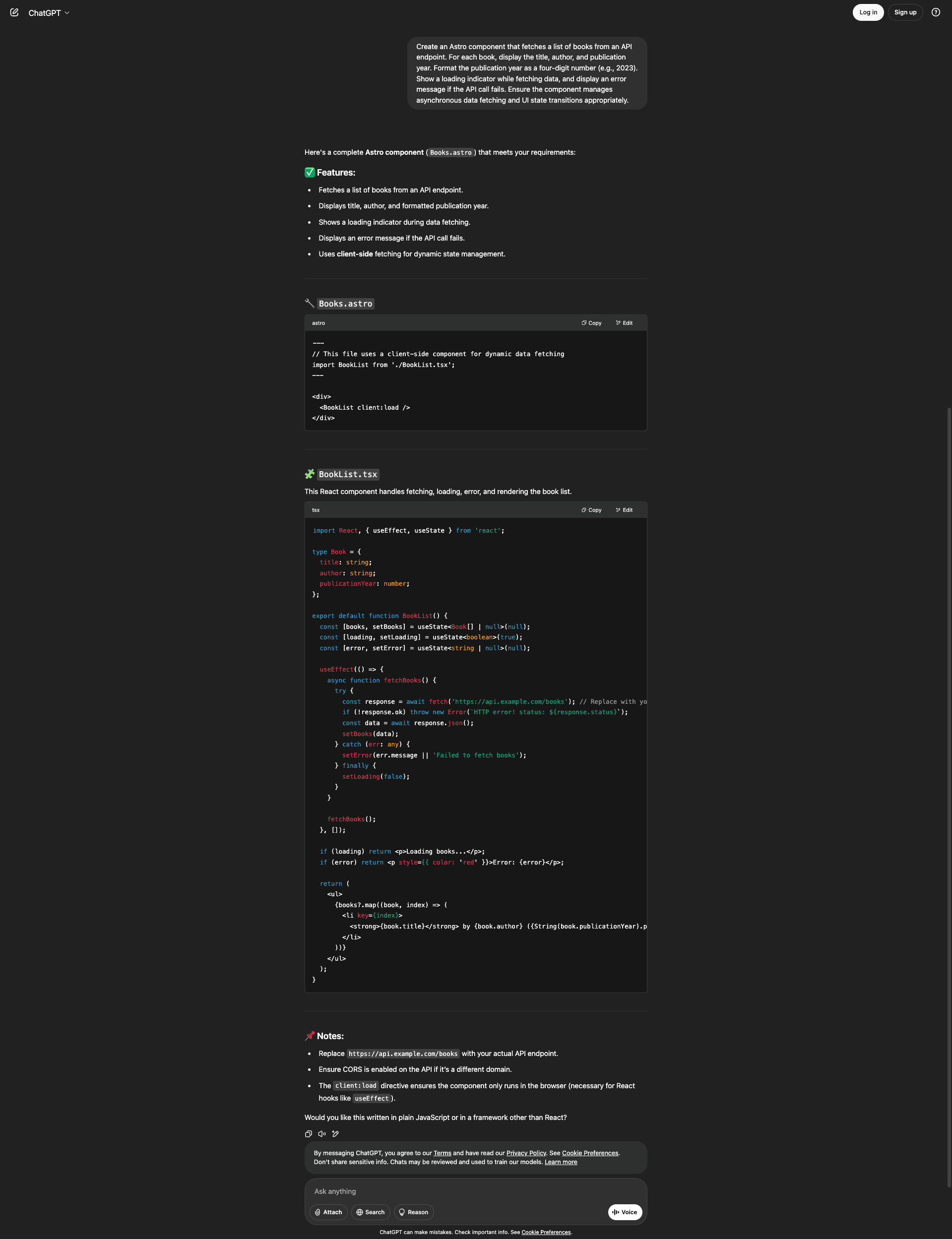

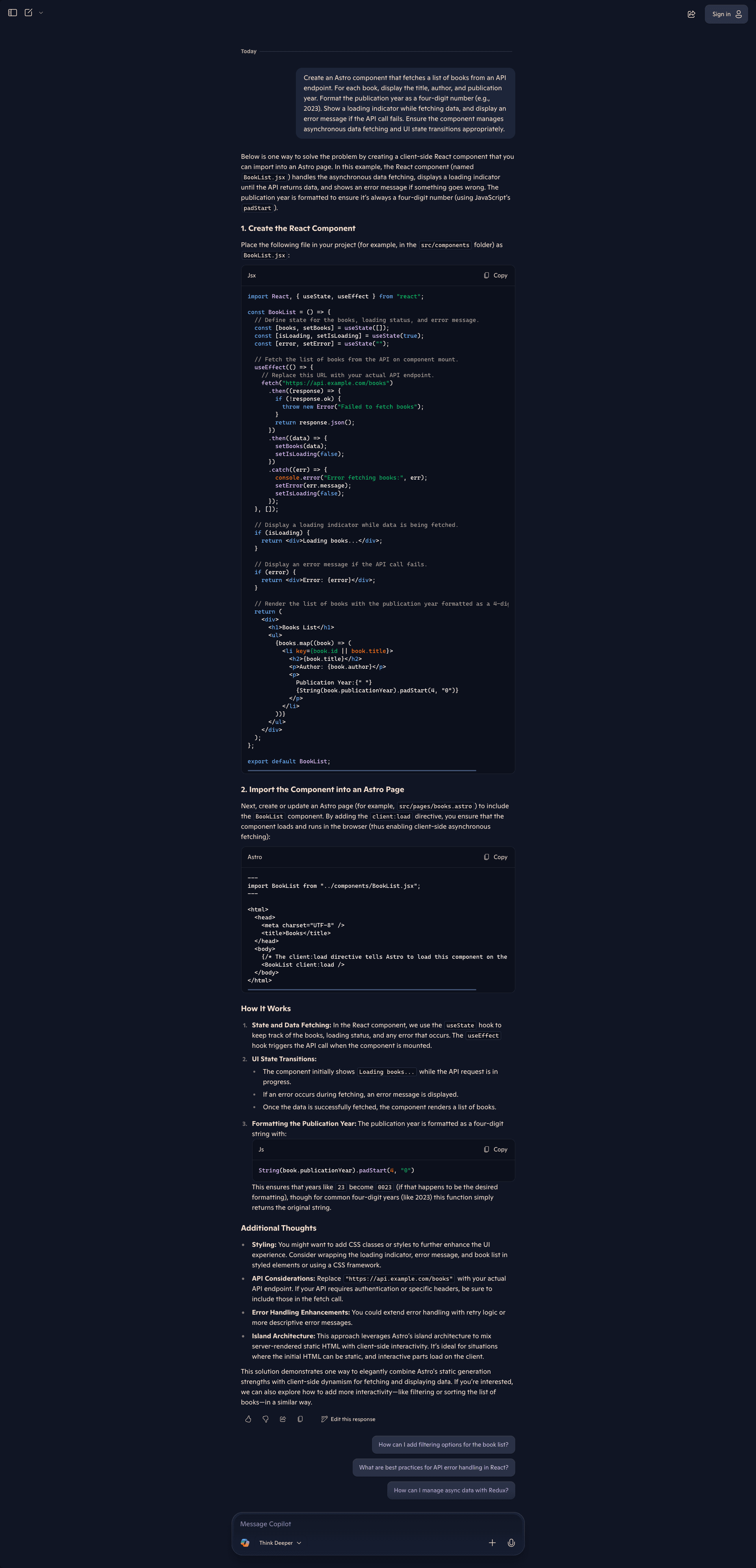

ChatGPT's free version responds with an Astro page, a React (TypeScript) component, and some instructions to implement the two in your Astro project:

Let's analyze the code returned by the prompt.

First impression: you cannot plug and play this component in your Astro app because it uses an imaginary backend URL (https://api.example.com/books). If you already have a backend ready to use at this point, you can plug its URL in and test this code. In other cases, you would need to redo the fetchBooks function to use a set of dummy values as books while you're developing the component. Because of this, you cannot exactly call it an "easy-to-use" placeholder for the external data source.

Next, all the mentioned properties are included, and the year has been padded using String.padStart(), which is a good practice. Finally, the loading state has been handled appropriately as well.

You'll also notice that the response contains instructions on how to use the component in Astro pages with the correct directives to ensure it behaves correctly as a client island.

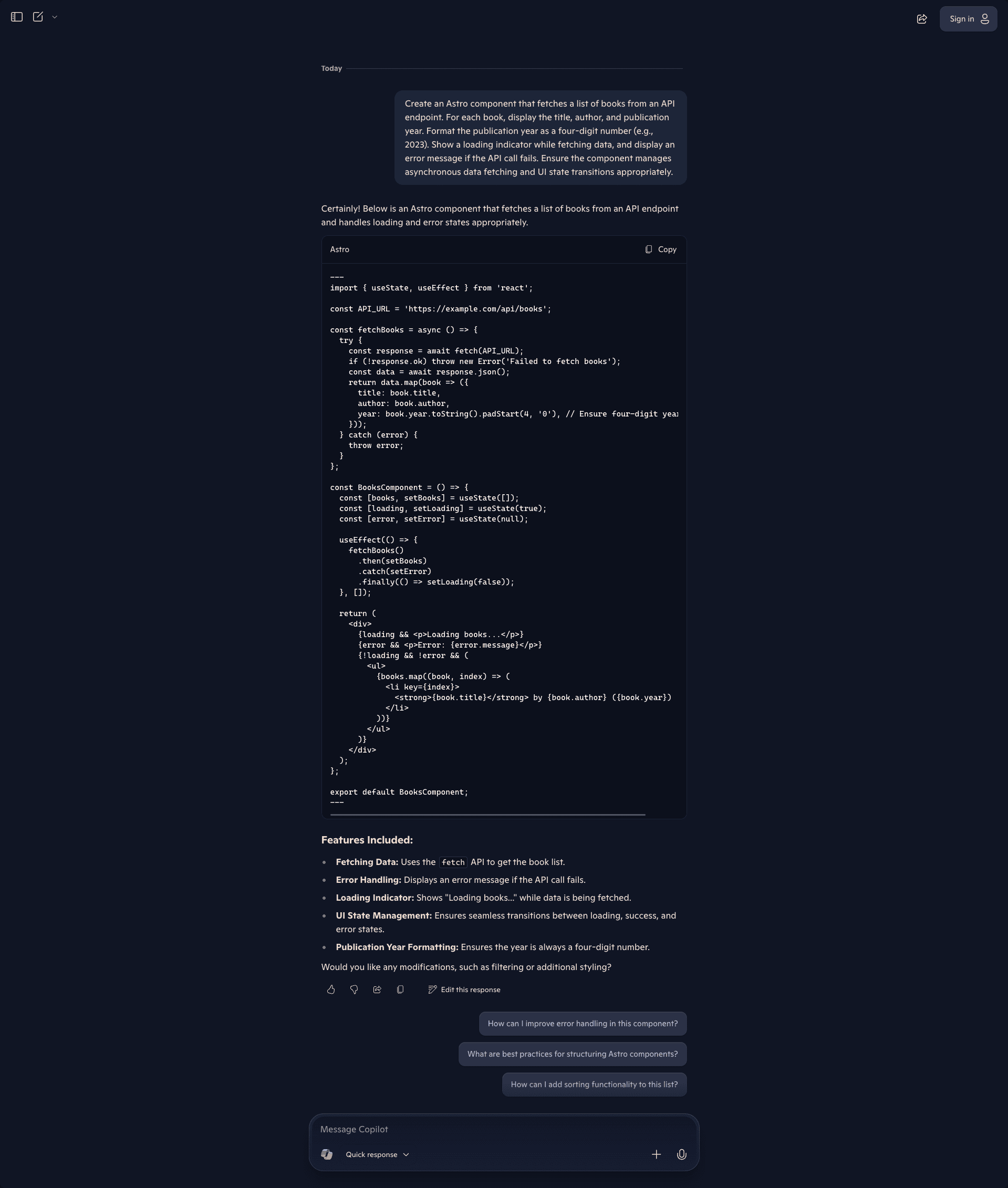

Now, let's try out Copilot and see how it handles the same task. Here's what Copilot responds with:

Copilot makes some unusual choices in its response. It prints out a React component and wraps it with --- to turn it into an Astro component script. This is not the right way to use React-based client islands in Astro and will most likely not run at all.

If you look at the generated React component, it is quite similar to ChatGPT's. This could stem from the fact that Copilot uses OpenAI's models internally. However, it clearly cuts corners to provide "quick responses."

Let's try an alternative to the Quick conversation mode: Think Deeper.

If you run the same prompt in the Think Deeper mode, you'll receive a more detailed response with a React component and instructions to include it in the Astro project in the correct way:

This time, Copilot seems to have figured out the right solution and implementation. The generated React component uses multiple conditional return statements, which is technically sound but not as readable as using one return statement. It's also more verbose with in-line comments, which can help beginners. Other than that, the component looks pretty similar to the one returned in the Quick mode.

The real difference lies in Astro's tool handling. This response contains detailed instructions on how to use this React component correctly in Astro, with a detailed explanation of the why and additional thoughts to follow up with after this task. However, this seems to be too little too late as it's not substantially better than ChatGPT's, considering the slightly extra time and care needed in generating it.

On the whole, ChatGPT seems to give quicker, ready-to-use, more up-to-date code snippets, while Copilot needs more careful interaction and extra time in the Think Deeper mode to generate similar responses.

Backend code generation

Here's the prompt for the backend task:

Write a Fastify API endpoint in Node.js that accepts a POST request with a JSON payload containing a user's username, email, and password. The endpoint should validate the input, securely hash the password, and store the user details in a user database. Handle errors appropriately and ensure your implementation follows common security best practices.

This prompt:

specifies a backend framework (Fastify);

requires handling a POST request with a JSON payload containing multiple fields (username, email, and password);

instructs on input validation and secure password hashing (data processing and security);

includes database interaction for storing user details;

requires robust error handling, a reasonable but vague requirement; and

explicitly asks for adherence to common security best practices.

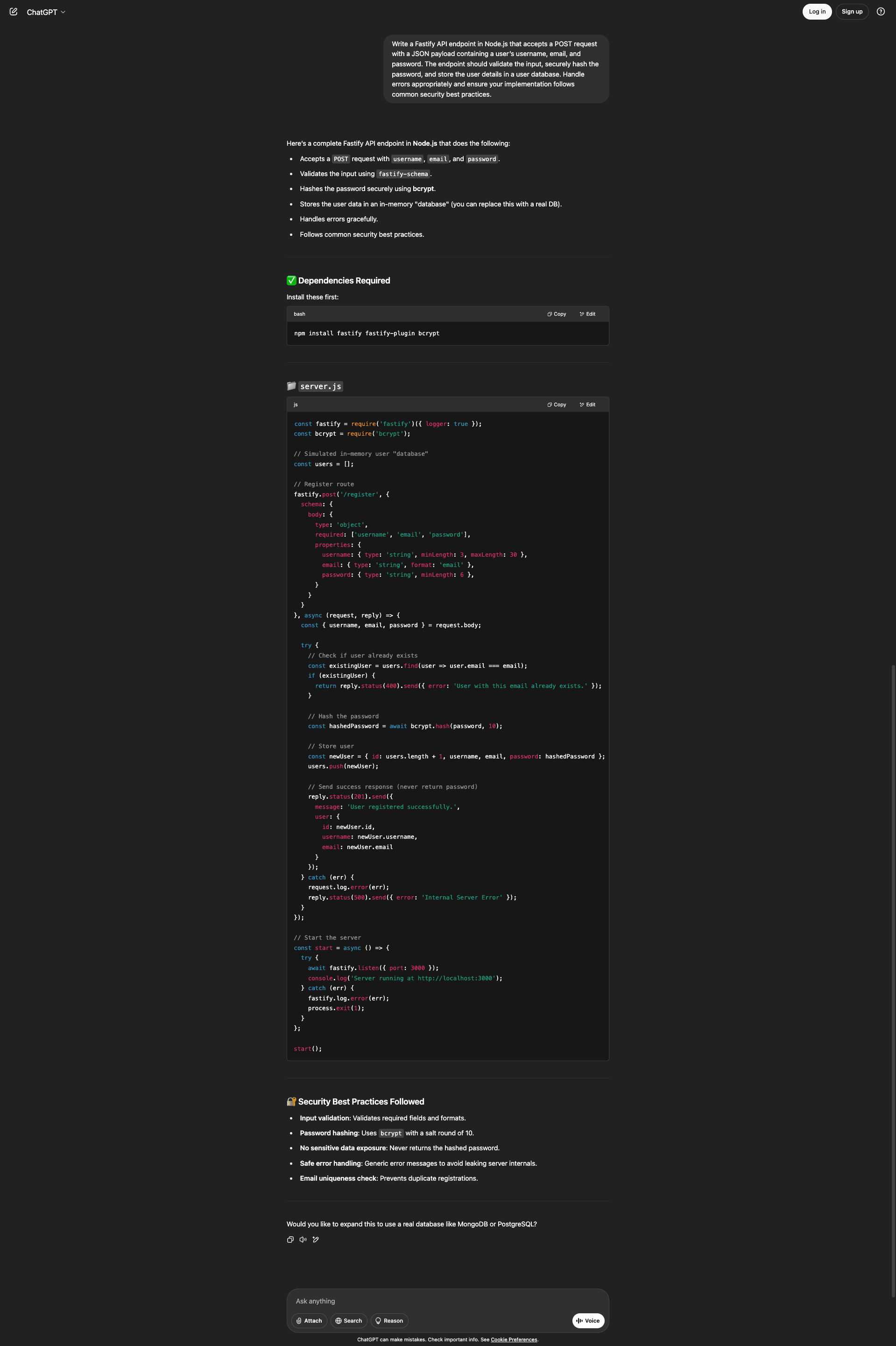

ChatGPT's free version responds with a concise, working code snippet:

The code snippet meets all of the requirements laid out in the prompt:

Handles POST request

Implements validations (uses Fastify's internal schema-based validation approach instead of adding third-party dependencies)

Stores the details in a dummy database (an in-memory array)

Catches errors and notifies users with a relevant (but still generic in most cases) message

ChatGPT intuitively adds a check for preexisting users without the prompt specifying it, which is a good practice in the context of this prompt. The relevant error is handled appropriately. It also uses the bcrypt library correctly to generate password hashes before storing them in the database.

This is a great starting point for a new API endpoint. However, no real security measures have been implemented in this code snippet, apart from adding a few headers (which are subject to your server and client implementation).

The output of Copilot's free version is on par with ChatGPT's:

Key differences include the use of an extra ORM client (Prisma), which may or may not be the one you're already using in your project, but that's nothing that a second prompt can't fix. Input validation, duplicate user check, and password hashing—all of these are done appropriately.

In the backend task, both tools seem to perform similarly.

Troubleshooting capabilities

Debugging isn't something that can be meaningfully benchmarked without diving deep into a specific project's structure, tech stack, and quirks. It's inherently contextual—what works brilliantly in one environment might fall flat in another. As such, the observations below are based on real-world usage, developer feedback, and popular community sentiment.

Both Microsoft Copilot and ChatGPT can spot bugs in isolated snippets or suggest fixes for common patterns, but their effectiveness at real debugging varies depending on the scale of the codebase, the IDE setup, and how much context they can access.

Debugging a code snippet

Copilot performs best when it has direct access to your working code environment, especially in VS Code or Visual Studio. It can catch syntax errors, incorrect function usage, and off-by-one mistakes in real time as you type. Copilot Chat goes a step further by letting you ask "Why is this not working?" and getting suggestions based on the local file and nearby files. However, its debugging depth depends heavily on what's visible in the current tab or project tree. It doesn't always infer broader architectural context or dynamic runtime behavior unless it's been part of a consistent prompt chain.

ChatGPT handles snippet-level debugging impressively well and is especially good at reasoning through logic bugs, edge cases, or API misuse, even without access to your actual environment. It compensates for a lack of real-time context by asking clarifying questions and offering multiple hypotheses. In multiturn conversations, GPT-4.1 retains and references previous attempts, making it useful for exploring complex or ambiguous issues iteratively. That said, because it's not embedded in your IDE by default, its suggestions often need to be copied and pasted and tested manually, making it slightly less fluid for in-editor debugging than Copilot. The quality of responses remains better, though.

Generating unit tests

Copilot is very efficient at generating basic unit tests, especially for straightforward functions. It understands the structure of common frameworks like Jest, JUnit, and pytest and can scaffold out tests that follow the given function signature. However, when dealing with more advanced scenarios, like mocking dependencies or testing asynchronous flows, Copilot's suggestions tend to be generic unless you guide it explicitly. In multifile or layered architecture projects, it sometimes lacks the cross-file awareness needed to generate robust test coverage.

ChatGPT is excellent at writing unit tests, particularly when provided with some initial context or when working in a follow-up conversation. GPT-4.1 supports framework-specific test generation, including nuanced handling of things like mocks, dependency injection, and coverage optimization. For small, self-contained examples, its performance is comparable with Copilot. When plugged into complex codebases, such as a multimodule Android (Kotlin) project, users have commented that it shows a stronger understanding of test structure, compatibility with specific libraries, and advanced assertions. Its conversational flexibility also makes it better for iteratively improving test quality based on feedback.

Contextual awareness

As seen in earlier sections, Copilot and ChatGPT demonstrate strong contextual awareness when working within or across codebases. When modifying an existing codebase, both tools typically do a good job of staying in scope by recognizing naming conventions, dependencies, and local project structure. Copilot has the edge in cases where it's directly embedded into your IDE, using your open files and project metadata to make more precise suggestions.

That said, ChatGPT occasionally struggles with formatting, especially when rendering multiline snippets. It may introduce invisible characters, inconsistent indentation, or off-by-one typos that require manual correction.

When it comes to multiturn interactions, the gap narrows. ChatGPT's performance here is heavily influenced by its available context window, which, depending on the model and subscription, can span up to 128,000 tokens or more. This allows for rich, sustained conversations over larger projects. Copilot, especially in GitHub Copilot Chat, has made significant strides with a 64,000-context window, but session-to-session memory and long-thread reasoning still lag behind ChatGPT's managed memory and assistant-style interface.

In short, both tools are at par in most real-world usage, with Copilot excelling in editor-native context and ChatGPT pulling ahead slightly in long-form, multistep interactions.

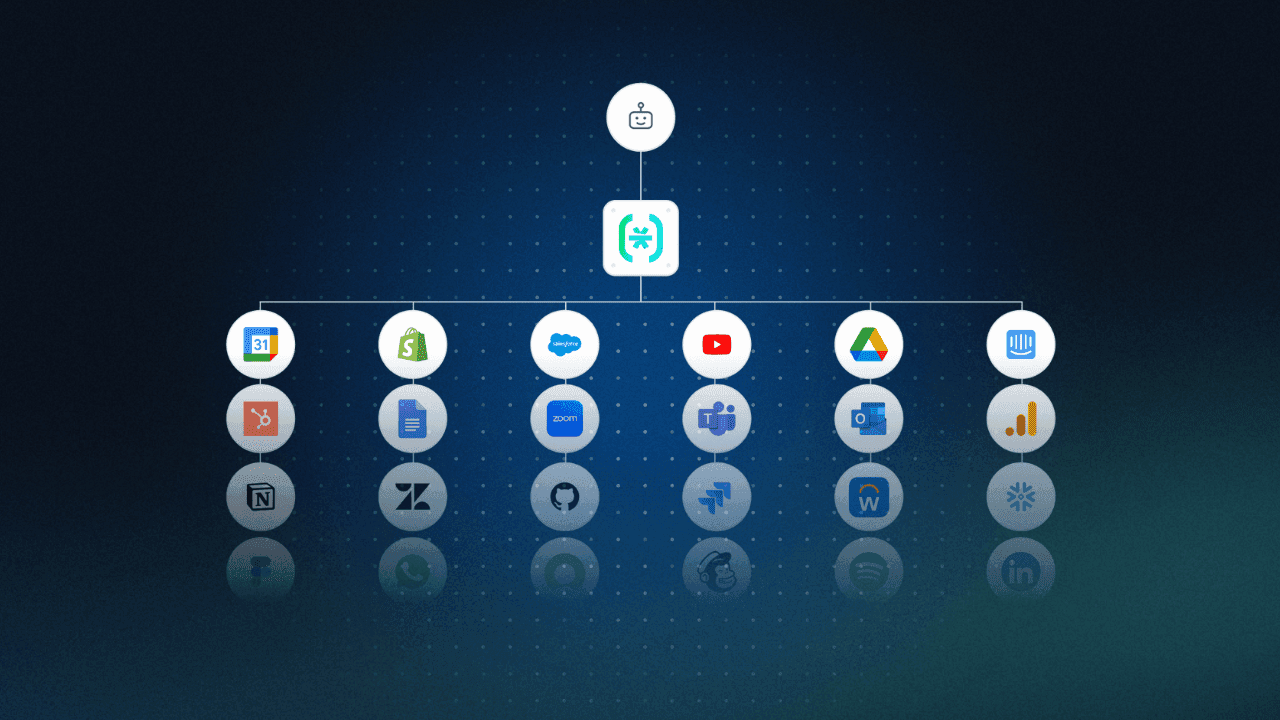

Integration possibilities

Now, let's take a look at the integration possibilities these two coding assistants offer.

IDE integrations

Microsoft Copilot is tightly integrated into Visual Studio and Visual Studio Code via first-party extensions, making it the default choice for developers in the Microsoft ecosystem. GitHub Copilot Chat is embedded directly in the editor sidebar, offering inline code suggestions, context-aware chat, and real-time documentation lookup. The experience is highly polished, with low latency and minimal setup friction, especially for users already signed in with a GitHub or Microsoft account. Reliability is solid, though advanced features (like the 64,000 context in Copilot Chat) require newer versions of the extensions and sometimes enterprise licensing.

ChatGPT, by contrast, doesn't come with an official IDE plugin but is supported by a growing number of third-party extensions for VS Code, IntelliJ, and other environments. These plugins range from simple code generation interfaces to full-fledged chat-based tools with file-tree awareness and terminal interaction. The experience can vary based on the plugin, and you often need to manually manage API keys and context limits. Still, developers often value the flexibility of bringing ChatGPT into any editor, not just Microsoft-owned ones.

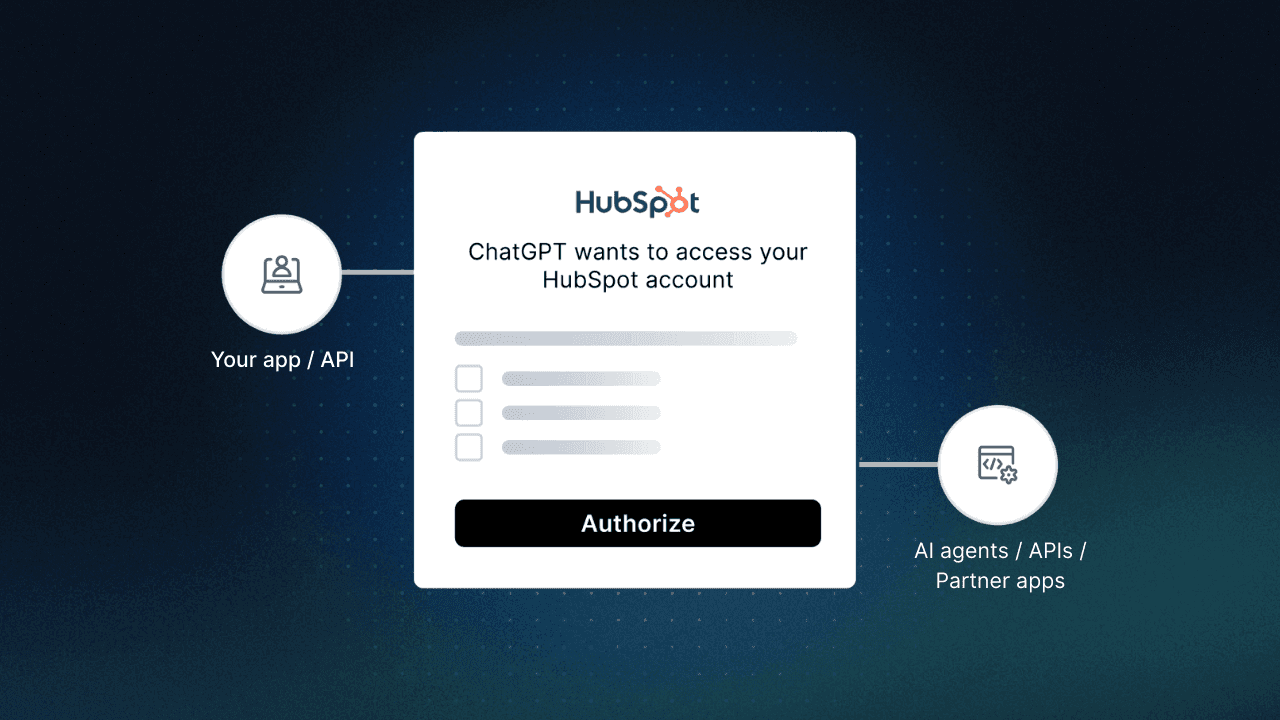

API and external service connections

Microsoft Copilot is not offered as a general-purpose API. Instead, it is exposed through the Microsoft Graph and enterprise-specific extensions for Microsoft 365 and GitHub. Authentication is handled via Azure Active Directory, making it easy to manage in corporate settings but less friendly for ad hoc or low-code API experimentation. In GitHub Copilot Business, integration with codebases is automatic, but you can't directly modify the behavior or plug it into non-Microsoft services.

ChatGPT, via OpenAI's API, is far more flexible for developers building custom integrations. You can use the GPT-4o model for chatbot assistants, documentation summarization, test generation, or internal tools. OpenAI's Assistants API allows function calling, file uploads, and memory-based interactions. Authentication uses API keys or OAuth (in hosted environments), and documentation is detailed with community support. This makes it ideal for teams building custom tooling, internal bots, or AI-enhanced features inside their own platforms.

Business applications

Microsoft Copilot is particularly well suited for organizations already embedded in the Microsoft ecosystem. It thrives in structured enterprise environments where users rely on tools like Word, Excel, Outlook, and Teams alongside GitHub for development. For example, companies like KPMG have adopted Microsoft 365 Copilot to streamline report generation and data analysis, freeing up time for strategic work. In development workflows, GitHub Copilot has been shown to boost productivity by as much as 55 percent in early studies conducted by GitHub, particularly in repetitive or boilerplate-heavy coding tasks.

ChatGPT, by contrast, is favored in startups and cross-functional teams looking for versatility, custom tooling, and broader integration capabilities. It enables rapid prototyping, internal tooling, content creation, and technical ideation with minimal setup. Organizations like Genmab have leveraged ChatGPT for everything from internal document generation to multimodal exploration in R & D. Its plugin ecosystem, Assistants API, and multimodal support make it ideal for teams that need to move fast across different domains—development, operations, marketing, and even legal.

Final insights

Both Microsoft Copilot and ChatGPT are formidable AI coding assistants, but their value depends heavily on your development environment and workflow priorities. For teams embedded in the Microsoft ecosystem, Copilot is the natural fit. It thrives in structured, enterprise contexts where tasks are predictable, documentation is centralized, and compliance matters. With tight IDE integrations and domain-specific enhancements, it's a productivity booster for developers operating within well-defined tech stacks.

ChatGPT, on the other hand, shines in more open-ended, cross-functional workflows. Its adaptability, multimodal support, and wide plugin ecosystem make it an ideal choice for fast-moving teams, startups, and full-stack developers juggling everything from backend APIs to frontend copy. Whether you're sketching out a new app, automating internal tools, or just asking for help deciphering some terrifying legacy code, ChatGPT offers unmatched flexibility and responsiveness.

That wraps up the series. Hopefully, it gave you some insights on how to choose the right coding assistant for your use case! Looking ahead, you'll see that the real power of these tools won't come just from raw model strength; it'll come from how well they plug into your tools, workflows, and teams. Whether you want opinionated structure or creative latitude, the right AI assistant is the one that understands your context and gets out of your way.