Table of Contents

Architecture and design philosophy

This tutorial was written by Manish Hatwalne, a developer with a knack for demystifying complex concepts and translating "geek speak" into everyday language. Visit Manish's website to see more of his work!

An AI model without external tools is like a talented musician without instruments—it knows the music but can’t play a single note. On their own, language models can only generate text based on what they’ve learned. Their real power emerges when they can connect to external resources: querying databases, calling APIs, fetching real-time information, or performing actions. This transforms a text generator into an AI agent that can actually solve real problems.

For some time, function calling (introduced by OpenAI in June 2023) has been the go-to way to connect models with external systems. You define functions, the model decides when to call them, and your application executes the requests. It works, but every new tool needs its own setup, schemas differ across providers, and using multiple tools often means rewriting similar code. Inefficiencies quickly add up as projects grow.

The Model Context Protocol (MCP), introduced by Anthropic in November 2024, is an open standard that makes connecting AI models to tools and data much simpler. Instead of building custom integrations for each tool, MCP provides a universal framework for exposing context and capabilities. This reduces repetitive work and offers more consistent/reliable integrations. Overall, it makes it easier to build AI agents that can use a wide variety of tools.

This article compares MCP and function calling across five key dimensions:

Architecture and design: how each approach handles model-tool interactions

Implementation complexity: the effort required to set up and maintain integrations

Authentication and security: how access and permissions are handled

Vendor lock-in and portability: the portability of your integrations

Performance, scalability, and maintenance: how well each approach supports growth and ongoing upkeep

By comparing MCP and function calling, you can see how they both work in practice and understand the trade-offs and benefits while building AI agents.

Architecture and design philosophy

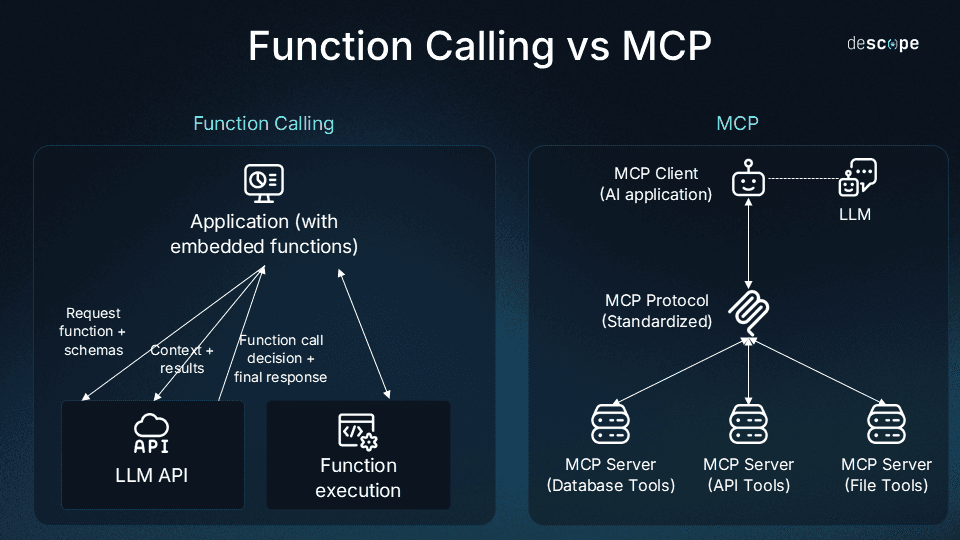

Function calling embeds tool definitions directly into your LLM requests. Every time you call the model, you send along a list of available functions with their parameters and descriptions. The model decides which functions to invoke based on the user’s query, and your application executes them, returning the results to the model. This creates tight coupling: Tool definitions live alongside your prompt, and any change requires updating the request payload.

MCP, by contrast, uses a client-server architecture with a standardized protocol. MCP servers expose tools, resources, and prompts through a consistent interface, while MCP clients (like Claude Desktop or your AI agent) connect to these servers to make capabilities available to the model. The protocol handles communication, so you don’t need custom logic for each integration. Tool logic lives independently from your AI application, allowing servers to be developed, tested, and deployed separately.

You can see the difference in the diagram below:

On the left, function calling tightly couples the model and tools; on the right, MCP’s client-server architecture separates them, enabling modular, reusable integrations.

Why MCP shines: Modularity makes all the difference. You build an MCP server once, and any MCP-compatible client can use it. If you switch AI models, your tools keep working without any code changes. If you want to share a database integration across multiple projects, you can package it as an MCP server and plug it in anywhere. This reusability significantly reduces duplicated effort as your AI ecosystem grows.

When function calling works better: For simple, lightweight integrations, function calling can be more pragmatic. If you’re building a small prototype with two to three custom functions used only by your application, setting up an MCP server may be overkill. Function calling keeps everything in one place, with no extra processes or protocol to learn. It’s quick to implement and ideal for one-off tools that don’t need to be shared or reused.

Implementation complexity and developer experience

The developer experience differs significantly between these approaches, and the right choice depends on the project’s stage and scale.

Function calling is straightforward. Tools are defined as JSON schemas directly in your code, described in-line, and executed immediately when the LLM requests them. There’s no separate infrastructure or protocol to learn. Everything lives in the main application code, so the flow is easy to follow and debug with familiar tools.

MCP requires more up-front work. You need to create an MCP server (either your own or by configuring an existing one), implement the protocol’s communication via JSON-RPC, and manage the connection between client and server. At least two separate processes must communicate reliably, adding some initial complexity.

This simple weather API integration illustrates the difference:

Function calling approach:

# Define the function schema

tools = [{

"name": "get_weather",

"description": "Get current weather for a location",

"parameters": {

"type": "object",

"properties": {

"location": {"type": "string", "description": "City name"}

},

"required": ["location"]

}

}]

# Make LLM request

response = client.messages.create(

model="claude-sonnet-4-20250514",

messages=[{"role": "user", "content": "What's the weather in Paris?"}],

tools=tools

)

# Execute if function is called

if response.stop_reason == "tool_use":

tool_input = response.tool_input # Extract parameters sent by the model

weather_data = get_weather_from_api(tool_input["location"])

# Send results back to the LLM for further processing…MCP approach:

# weather_server.py - Separate MCP server

import os

import httpx

from mcp.server.fastmcp import FastMCP

WEATHER_API_BASE = "https://api.weatherapi.com/v1"

API_KEY = os.getenv("WEATHER_API_KEY")

# Initialize FastMCP server

mcp = FastMCP("weather-server") # Handles protocol specific schema, transport etc.

# Other code: _send_api_request(...), _parse_weather_data(...) etc.

@mcp.tool()

async def get_current_weather(location: str):

api_endpoint = f"{WEATHER_API_BASE}/current.json?key={API_KEY}&q={location}"

data = await _send_api_request(api_endpoint) # Function defined earlier in the code

if not data or "current" not in data or not data["current"]:

return "Unable to obtain weather information at this time."

return _parse_weather_data(data) # Function defined earlier in the code

if __name__ == "__main__":

# Initialize and run the server

mcp.run(transport='stdio')

# client.py - Your application

# Connect to MCP server and use tools through the protocolThe function calling version is about twenty lines of straightforward code. The MCP version involves a separate server file, protocol handling (mostly via the MCP SDK), and client connection logic, requiring significantly more code for this simple example.

Where function calling excels: For rapid prototyping and MVPs. You can move from idea to working integration in minutes, with direct control over the entire flow, which is ideal for experimentation and quick iteration.

Why MCP is superior for production: MCP’s initial complexity pays off in production or larger projects. MCP servers become reusable, version-controlled components that can be tested independently and shared across applications. For teams building multiple AI tools or planning long-term maintenance, this infrastructure-as-code approach prevents the tangled, hard-to-maintain code that often emerges from in-line function definitions scattered across projects.

Authentication and security

Security is one of the biggest practical differences between function calling and MCP; this difference becomes particularly important once your AI agents start handling sensitive data or performing real-world actions.

Function calling manages security at the application level. Credentials, API keys, and access tokens typically live in your main app, usually as environment variables or configuration files. When the LLM decides to call a function, your application uses these credentials directly to execute the request:

# Function calling security - credentials in main app

import os

def get_database_records(query):

db_password = os.getenv("DATABASE_PASSWORD")

connection = connect_to_db(password=db_password)

return connection.execute(query)

# Tools exposed to LLM

tools = [{

"name": "get_database_records",

"description": "Query the customer database",

"parameters": {...}

}]Everything runs in the same process with the same permissions. If the application is compromised, an attacker gains access to all credentials and can execute any function the LLM can call.

MCP takes a different approach by isolating credentials at the server level. Each MCP server runs as its own process with independent authentication, keeping credentials inside the server environment. The client application doesn’t access backend systems directly; it only communicates with the MCP server through the protocol:

# MCP server - credentials isolated in server

import os

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("database-server")

# Credentials stay on the server

DB_PASSWORD = os.getenv("DATABASE_PASSWORD")

# Other code...

@mcp.tool()

async def get_database_records(query: str):

# Server has credentials, client doesn't

connection = connect_to_db(password=DB_PASSWORD)

return execute_query(connection, query)

# Client application has no direct database access

# It can only make requests through the MCP protocolThis follows the principle of least privilege: The AI application only gets access to what the MCP server explicitly exposes, not the full backend.

Security model comparison:

Aspect | Function Calling | MCP |

|---|---|---|

Credential storage | Application environment | Server environment (isolated) |

Access control | Application level | Server level (granular) |

Audit logging | Manual implementation | Protocol level support |

Privilege scope | All or nothing | Least privilege by default |

Attack surface | Entire application | Per-server isolation |

Why MCP excels for enterprise: Security isolation is a major advantage in production environments. If your AI application is compromised, attackers can only reach what specific MCP servers allow, protecting the rest of your infrastructure. You can implement centralized authentication, add role-based access control (RBAC), and maintain detailed audit logs of tool usage:

# MCP with role-based access control

@mcp.tool()

async def delete_records(context: dict, query: str):

user_role = context.get("user_role")

if user_role != "admin":

raise PermissionError("Admin access required")

# Audit logging built into the server

audit_log(user=context["user"], tool="delete_records", args=query)

connection = connect_to_db(password=DB_PASSWORD)

return execute_query(connection, query)Function calling considerations: For trusted internal tools or personal projects, function calling’s simpler security model is often adequate. If your app runs in a secure environment or you’re building a private AI assistant for personal use, managing separate MCP servers can be an unnecessary overhead.

Vendor lock-in and portability

One of the hidden downsides of function calling is vendor lock-in. Each LLM provider has its own version: OpenAI calls it function calling, Anthropic calls it tool use, and others have their own takes. While they all aim to do the same thing, their schemas and APIs differ enough to make switching providers tedious. If you migrate from one AI model to another, you’ll need to rewrite or adapt every function definition and integration layer. The pain multiplies when your system uses dozens of tools.

MCP avoids this problem by being provider-agnostic. It defines a universal protocol for exposing and accessing tools, independent of which LLM you use. The same MCP server can work seamlessly with both OpenAI and Anthropic models, no code changes required. This makes it easy to experiment, switch providers, or even run multiple models side by side without breaking existing integrations.

Consider a practical example: You’ve built an AI application using OpenAI that connects to a weather API and a database. Now you want to switch to Claude for better reasoning on complex queries. With function calling, you’d have to rewrite all your tool definitions, update the schemas to match Anthropic’s format, and retest everything. With MCP, you simply point your Claude client to the same weather and database MCP servers you already have, with zero rewrites and immediate compatibility.

In short, MCP offers flexibility and long-term resilience. It frees you from vendor constraints, keeps your tools portable across ecosystems, and allows your AI setup to evolve as models change. Function calling, while slightly smoother and faster within a single provider’s ecosystem, trades that convenience for long-term flexibility.

Performance, scalability, and maintenance

As AI applications mature from quick prototypes to production systems, scalability and maintenance become as critical as functionality.

Function calling tightly couples tools to your app’s runtime. Every tool execution competes for the same CPU and memory, and updating or adding one means editing core code, testing, and redeploying. For small teams with a handful of tools, this works fine. But as complexity grows, every tool change risks touching production code.

MCP’s distributed design works differently. Each tool runs on its own server and scales independently. You can scale your database server under heavy load while leaving your weather API untouched. Teams can maintain and deploy updates separately through CI/CD pipelines without impacting the main app. This approach offers cleaner separation, easier scaling, and faster iterations.

In short, function calling fits small apps and experiments; MCP shines once performance, scale, and maintainability start to matter.

MCP as the standard for agentic AI

In less than a year, MCP has seen remarkable adoption. Anthropic’s Claude Desktop already supports it natively, and an expanding ecosystem of prebuilt servers now covers everything from GitHub to Postgres to Slack. Major platforms are adding MCP support too, drawn by its core advantage: Build once, connect anywhere. Developers can reuse the same tool definitions across providers, while platforms instantly tap into a growing library of ready-to-use tools.

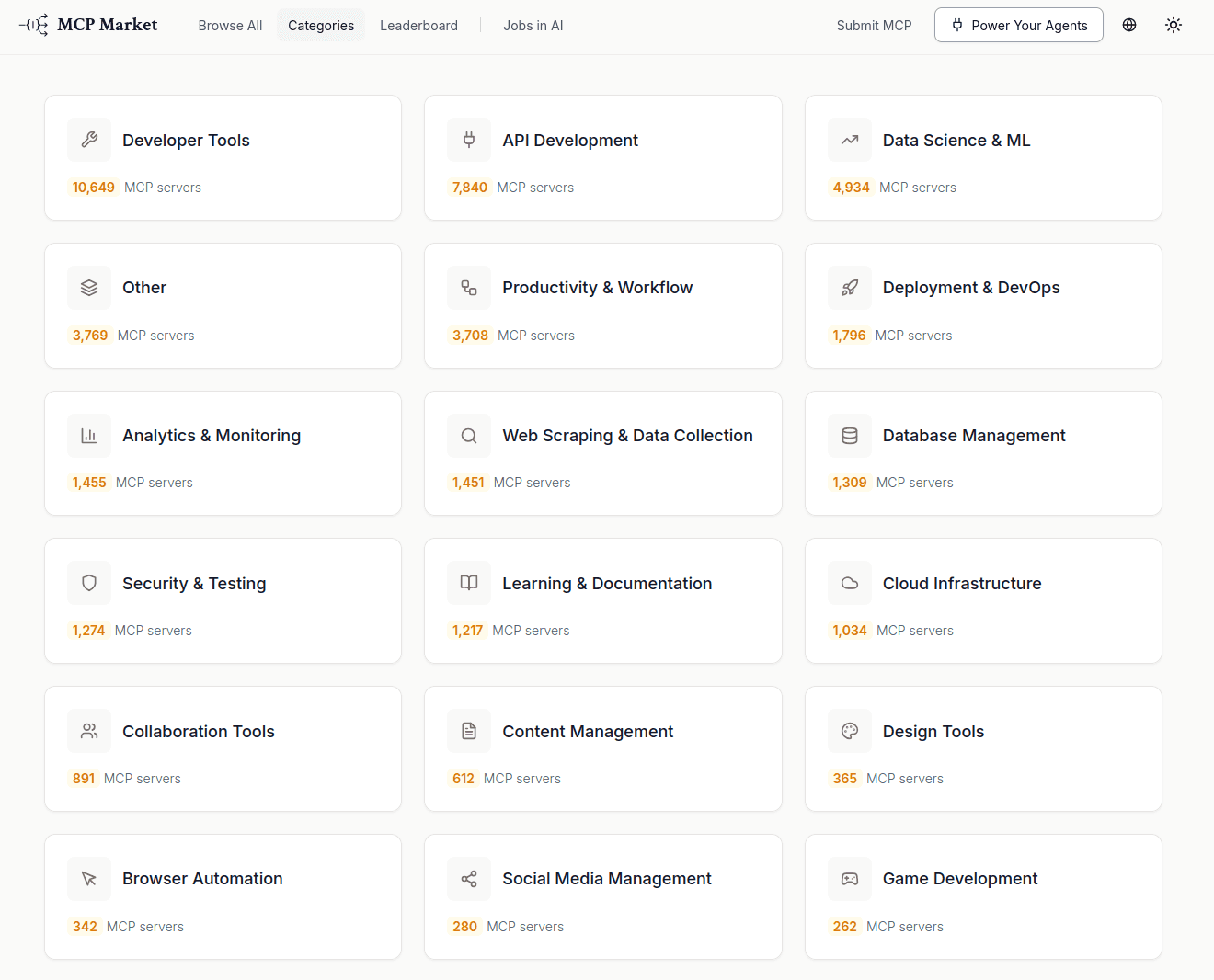

Here’s a glimpse from MCPMarket.com, showing over eleven thousand MCP servers across categories:

MCP’s rapid growth signals a deeper shift in AI design. Early AI agents were monolithic, with everything bundled into one deployment. Modern agents, by contrast, rely on specialized external tools for real-time data, domain logic, and third-party APIs. MCP provides the universal interface that makes this modularity possible. It’s considered the “USB-C port” for AI: a standard connector that lets any tool plug into any agent without custom wiring.

The trajectory is upward: Just as REST standardized web services and Docker standardized deployments, MCP is fast becoming the standard for AI tool integration. Function calling will stay useful for simple, single-provider setups, but the future of AI lies in interoperability, reuse, and flexibility. MCP isn’t just a new way to connect tools; it’s emerging as the foundation for the next generation of agentic, multiprovider AI systems.

Evolving LLM connections

Function calling was the first bridge between LLMs and external tools, but its provider-specific, fragmented approach creates friction as applications scale. MCP takes a different path: a standardized protocol that transforms repetitive, custom integrations into reusable, composable infrastructure. Across architecture, security, portability, and scalability, a clear pattern emerges: Function calling favors immediate simplicity, while MCP focuses on long-term maintainability and ecosystem growth.

MCP represents the natural evolution of AI integration, moving from one-off implementations to modular, production-grade infrastructure. Building on shared protocols rather than reinventing connections for every tool or provider is the most sustainable path forward. As MCP becomes the standard for connecting AI agents to external tools, managing identity and authorization across this ecosystem becomes critical.

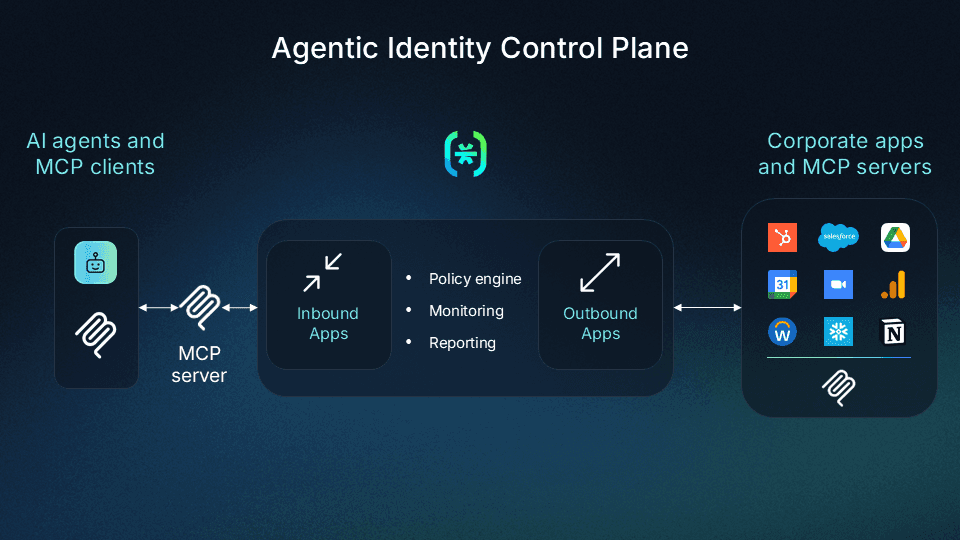

For developers building remote MCP servers, Descope's MCP Auth SDKs and APIs simplify implementing OAuth-based authorization, abstracting away the complexity of from-scratch MCP auth development. For enterprise agent security, the Agentic Identity Control Plane delivers scope-based access control, monitoring, and identity lifecycle management across agentic and MCP ecosystems.

Whether you're building AI agents that need to connect to third-party services or securing MCP servers that expose sensitive capabilities, Descope provides the identity infrastructure to make your agentic AI production-ready without sapping developer time.

Learn more about the use cases Descope can help you tackle in your next AI project. Looking to deploy an MCP server with auth built-in? Try Descope's FastMCP integration or Vercel starter template.