Table of Contents

What is MCP?

As large language models (LLMs) move deeper into enterprise workflows, the need for seamless, scalable integration has become clear. Two emerging protocols—Model Context Protocol (MCP) and Agent-to-Agent (A2A)—address this challenge from different angles. Both are designed to reduce complexity, streamline operations, and support smarter automation.

Below, we break down what each protocol does, how they work, and why they’re better together than apart.

What is MCP?

MCP is an open-source protocol that standardizes how LLMs such as ChatGPT or Claude connect with data sources and tools. Without MCP, connecting an LLM with Google, GitHub, and other external sources often requires several unique application programming interfaces (APIs). With MCP, you can leverage one protocol across most use cases.

Anthropic developed MCP to address issues faced by developers and end users related to this complexity. For developers, the core issue is the N×M problem, where N is the number of LLMs and M is the number of systems they need to integrate with. Each combination requires custom logic or infrastructure, leading to unsustainable complexity. For users, the main pain point is the friction caused by manually copying and pasting content between applications and interfaces.

MCP introduces several innovations to address these challenges, including:

Standardized contextualization, enabling models to receive structured context across tools.

State synchronization, allowing tools and agents to share memory or history with the model.

System interoperability, ensuring consistent behavior across tools, apps, and services.

The benefits of MCP include faster and more scalable integration of LLMs into existing tech stacks, reduced development overhead, and smoother user workflows. However, as with any system that facilitates access to third-party tools, security considerations—particularly around authorization mechanisms such as OAuth—remain critical and must be carefully managed at the implementation level.

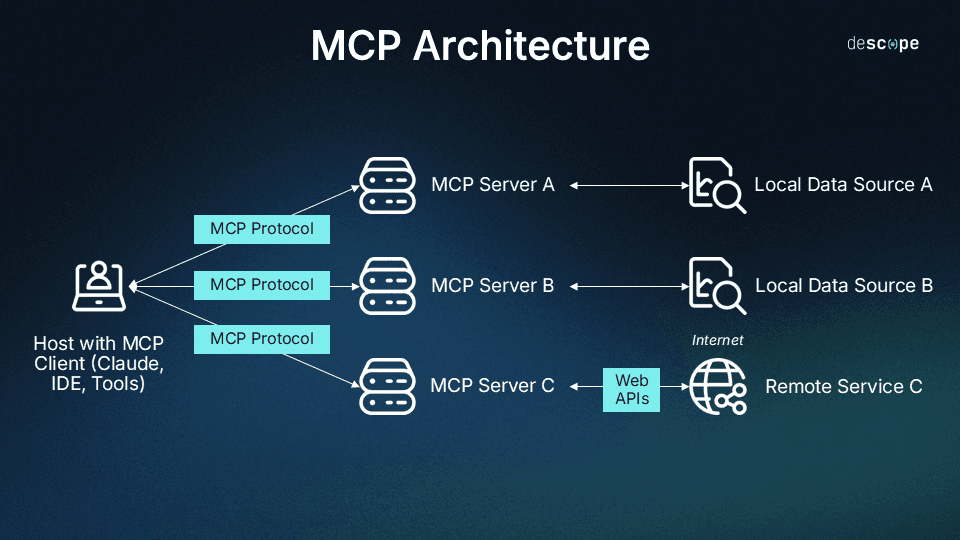

How MCP works

The underlying mechanisms that make MCP work are connections and trust between an LLM application and the bodies of data it’s authorized to access and process. This starts with a “protocol handshake” in which the client connects to MCP servers, determines what each dataset can do, and registers capabilities for future use in answering user demands.

Then, when users make a request that requires external data, the following steps typically occur:

Request analysis: The LLM analyzes the user’s prompt and determines that it needs information beyond its current context.

User consent: The LLM prompts the user to authorize access to the relevant external system or dataset, if needed.

Access request: The LLM sends a structured request to the MCP server using a standardized schema.

Data provisioning: The MCP server evaluates the request, checks permissions, and returns the appropriate data or tool output.

Context integration: The LLM incorporates the new information into its session context.

Response generation: The LLM uses the enriched context to generate a more accurate, informed response.

Though this may seem like a multi-step workflow, the exchange typically happens in milliseconds, allowing users to receive responses without the hassle of repeatedly feeding information into the LLM to answer similar questions.

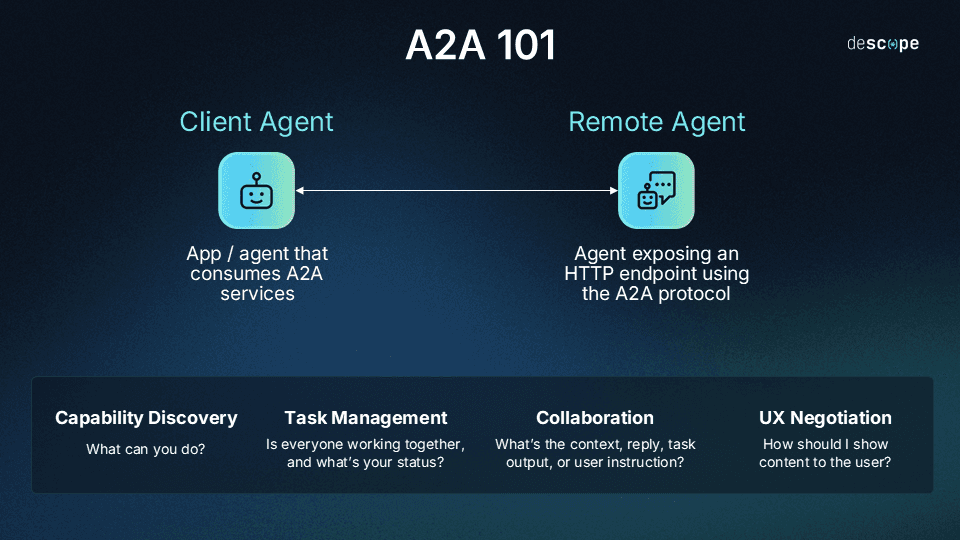

What is A2A?

While MCP focuses on helping LLMs interact with external data sources and tools, Google’s A2A protocol enables autonomous agents to communicate and collaborate with one another. Google announced A2A in April of 2025, touting the massive collaborative effort that went into its development. The result of 50+ partners coming together was a solution that allows agents to work in concert in their raw states, even without sharing direct access to the same resources that agent-to-agent synchronicity typically needed.

Like MCP, this protocol was born out of a necessity to simplify and scale LLM and agent utility. Additionally, Google lists security, support for legacy processes, and modality agnosticism (i.e., not tied to specific input/output formats like text, images, or speech) among the core design principles that make A2A what it is today.

Through this structure, A2A enables four foundational capabilities:

Capability discovery: Agents can query one another to identify which tools, actions, or processes are best suited to fulfilling a user's request.

Task management: Agents can determine and report on status dynamically as a task is being completed, communicating with each other to ensure synchronization throughout.

Collaboration: Agents can ask each other questions, answer them semi-independently, and generate useful outputs such as artifacts or instructions for optimal user visibility.

UX negotiation: Agents talk with each other to determine the best ways to present information to users, taking into account format, accessibility, and user background.

Together, these features allow agents to function more cooperatively across ecosystems, offering a more seamless experience for users and less custom integration work for developers. However, A2A is still in its early days. It doesn’t yet support fully autonomous agent networks, and organizations must actively manage the evolving risks associated with early-stage interoperability protocols.

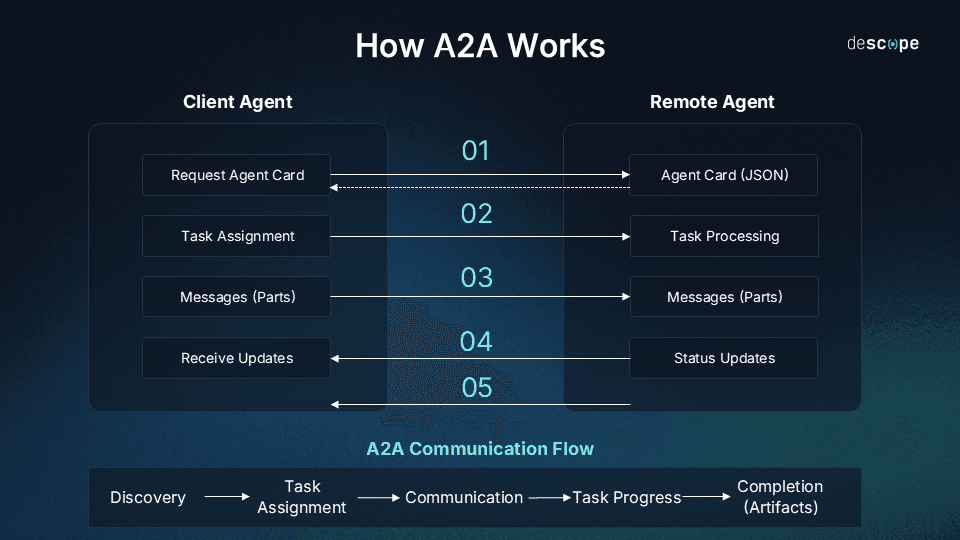

How A2A works

A2A enables AI agents—powered by LLMs or similar models—to communicate and collaborate via standardized protocols like HTTP, using structured JSON messages sent over an A2A server.

Before agents begin working together, they establish mutual trust through the exchange of Agent Cards. These cards describe an agent’s capabilities and supported actions without exposing proprietary implementation details, allowing interoperability without sacrificing privacy or security.

The typical A2A interaction unfolds as follows:

User request: A user prompts an AI agent to perform a complex task.

Agent coordination: The initiating agent determines that it needs help from other agents to fulfill the request.

Agent card discovery: The initiating agent reviews available Agent Cards to assess which other agents are best suited for specific subtasks.

Delegation: The initiating agent delegates parts of the task to selected external agents.

Dynamic communication: The agents communicate in real time, coordinating efforts and sharing intermediate results.

Progress updates: The initiating agent provides the user with task status updates throughout the process.

Artifact generation: Once the task is complete, the initiating agent compiles final outputs (e.g., reports, recommendations, or structured data artifacts).

This approach effectively helps a single AI tool to punch above its weight and marshal the expertise and functionality of multiple external agents. Thus delivering better results with less manual input from the user.

Read more: Outbound Apps: Connect AI Agents With External Tools

MCP vs. A2A or MCP + A2A?

For development teams considering how to streamline authentication, authorization, and system interoperability, understanding the difference between MCP and A2A is essential, but not always straightforward. Both protocols are designed to improve communication between systems and agents, and both can play a role in modernizing enterprise workflows.

The truth is: MCP and A2A are more complementary than competitive, and they’re designed to work together.

MCP focuses on helping a single LLM or agent interact with external tools and data sources.

A2A, on the other hand, facilitates communication and collaboration between multiple agents, regardless of whether they share direct access to those same resources.

Security considerations

Both protocols can expand the "surface area" of interaction, introducing new layers of logic and communication. But neither MCP nor A2A inherently introduces more risk. Their security posture depends heavily on the configuration and vulnerabilities of the systems they connect to. In both cases, standard best practices—such as robust authentication, least privilege access, and monitoring—are essential.

Choosing (or combining) the right protocol

The best fit depends on your specific use case:

Use MCP when your primary need is for a single LLM to draw from internal data or tools to complete user tasks.

Use A2A when you’re focused on coordinating multiple AI agents to carry out more distributed or collaborative workflows.

But in most cases, the ideal approach is not either/or—it’s both. When used in combination, they provide a foundation for LLMs to both access the tools they need and collaborate with other agents across systems.

For optimal results, both protocols should be implemented alongside a secure authorization stack, including features like multi-factor authentication (MFA) and fine-grained access controls, to ensure safety without sacrificing flexibility. All of this can often be achieved with minimal code overhead.

MCP and A2A: The beginning of a new era

Together, MCP and A2A represent a shift toward a more modular, cooperative future for AI systems—one where agents can access the right data, coordinate effectively, and adapt to complex workflows with less overhead. Whether you're building smarter interfaces, streamlining internal tools, or experimenting with agent-based automation, these protocols offer a solid foundation.

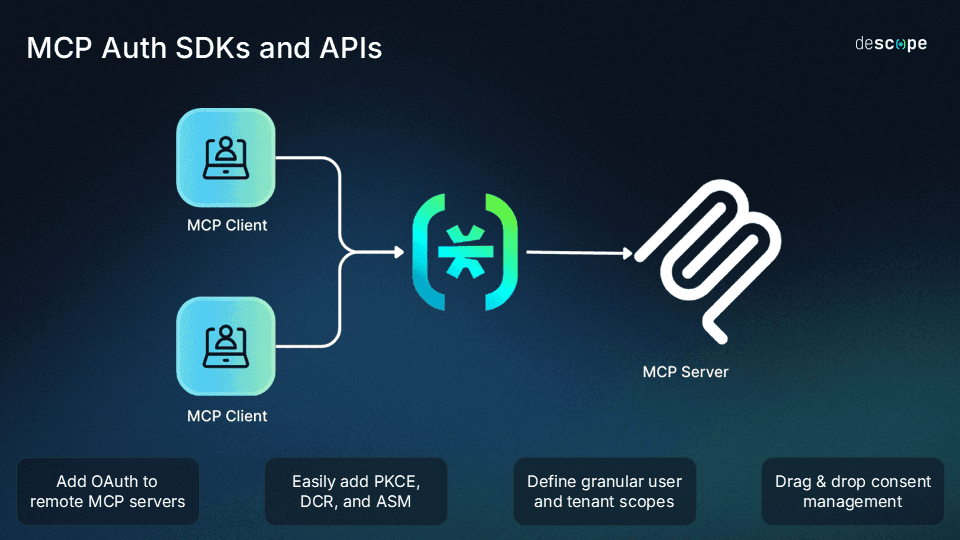

If you're looking to secure AI apps, agents, or chatbots, Descope can help. Using our drag & drop editor, you can add frictionless, secure authentication and access controls with built-in support for OAuth, SSO, SCIM, and fine-grained access control. Moreover, Descope Inbound Apps and MCP Auth SDKs help developers add OAuth to their APIs and remote MCP servers for secure, scoped, and consented access.

Sign up for a free Descope account to get started or book a demo with our team to learn more.