Table of Contents

What is a bot attack?

Cybercriminals are constantly looking for ways to bypass security deployments and gain access to sensitive accounts and information. In recent years, one of the most troubling developments on this front has been the rise in bot attacks.

Even the most well-defended systems and components are not immune to a bot attack. Relatively secure accounts with multi-factor authentication (MFA) in place of traditional password logins may also fall victim to a persistent threat perpetrated by malicious bots.

In this article, we’ll explain what a bot attack is, what kinds to look out for, and what steps you can take to detect and prevent them.

Main points

Constant, automated threat: Bots outnumber humans on the web, and malicious bots can operate with minimal oversight from cybercriminals to enable persistent, large-scale attacks.

Diverse attack methods: Automated bot attacks target a variety of different vectors, including credential stuffing, web scraping, account takeovers (brute force and malware), and DDoS attacks.

Widespread business impact: Bad bots have a huge initial impact, but the resulting loss of trust and customer attrition can compound the damage far beyond the attack itself.

What is a bot attack?

Bot attacks are cyber threats that use automated software to infiltrate systems, steal credentials, and manipulate digital services at scale. Unlike manual attacks that require regular (or constant) human oversight, bots can run continuously and target thousands of endpoints simultaneously, making them particularly effective against authentication gaps and unsecured user databases. While “good” bots are benign or helpful, like those employed by search engines, “bad” bots are specifically designed with ill intent.

The automation advantage of bot attacks fundamentally changes how cybercriminals operate. A single threat actor can deploy persistent campaigns that run 24/7 with minimal maintenance, coordinating strikes against login pages, APIs, and user management systems across multiple platforms and organizations.

With the advent of GenAI making malicious coding more accessible, many of today’s bad bots are far from simple scripts running on a loop. Some bot deployments have become particularly sophisticated and evasive. These utilize techniques like headless browsers, proxy networks (often partnered with botnets), and behavioral mimicry designed to evade automated detection.

Impacts of bot attacks

Although attackers are almost always after financial gains, their actions create cascading impacts that extend far beyond direct monetary theft. Bot attacks impact victim organizations across multiple operational dimensions:

User friction and operational strain

User friction and operational strain from bot attacks aimed at websites degrade performance for legitimate users, often causing complete downtime. High-volume bot traffic overloads servers, increases infrastructure costs, and floods customer support teams with complaints from frustrated users unable to access services. Organizations frequently need to scale up server capacity and support staff just to handle bot-based problems.

Financial loss and hidden costs

Financial losses can be catastrophic: according to a 2024 report from Imperva, companies lose up to $186 billion annually due to bot-driven attacks. Kasada’s 2024 Bot Mitigation Survey notes that 98% of organizations attacked by bots over the previous year lost revenue, with more than a third reporting losses exceeding 5% of their annual revenue. These direct costs compound when factoring in the operational expenses of fighting endless bot campaigns.

Distorted analytics and wasted marketing spend

Skewed marketing and analytics data is one result of bot traffic, both malicious and benign. Bots poison business intelligence by generating fake traffic, inflating conversion metrics, and distorting user behavior analytics. Marketing teams make misguided divisions based on bot-contaminated data, while sales teams waste time pursuing fake leads. In some cases, bot traffic can quickly churn through expensive paid marketing spend.

Legal and compliance risks

Legal trouble and compliance risks are both consequences of data breaches, one of the primary goals of bot attacks. HIPAA, PCI DSS, GDPR, and other widely applicable frameworks stipulate severe fines for sensitive data breaches. Bot-driven incidents can carry additional scrutiny due to their widespread nature (and likelihood of exposing sensitive data en masse).

Reputation damage

Customer attrition and trust erosion can result from successful bot attacks, leading to losses of current and potential customers. Any customers impacted by a data breach may lose faith in the organization or software platforms they believe were compromised. Even non-breached users will think twice about entrusting their personal information or credentials with a service provider known to have bot security issues.

The stakes of a bot attack depend heavily on the kind of attack waged, the data targeted, and the parties impacted. But in many cases, they can be extremely severe. That’s why robust fraud prevention controls are essential.

Read more: Cybersecurity in Retail: Key Threats & Defense Mechanisms

How bot attacks work

The growing sophistication of modern bot attacks is characterized by several key components that make them harder to detect and, subsequently, more damaging than ever before. Today’s attackers employ a combination of tools and tactics that allow their bots to appear like legitimate users while operating at massive scale.

Botnets

Botnets leverage vast numbers of compromised endpoints, with some (like the 911 S5 Botnet) containing over 19 million infected devices. Botnets frequently partner with proxy networks that route malicious traffic through legitimate home and business internet connections, making the attacks appear to originate from trusted IP addresses. By masking their coordinated bot attacks behind seemingly innocuous sources, cyber threats are able to bypass IP reputation checks and avoid surface-level bot detection systems.

Headless browsers

Headless browsers are web browsers (e.g., Google Chrome) without a graphical interface. These have become the weapon of choice for sophisticated bot operators that want their automation to appear human. While headless browsers were originally designed for legitimate automated testing, they can now simulate human-like interactions such as mouse movements and clicks. Popular automation script frameworks like Selenium, Puppeteer, and Playwright are increasingly seen in the wild driving large-scale attacks.

IP rotation

IP rotation involves rapidly changing to new endpoints in proxy networks, allowing bots to avoid rate limiting and IP-based blocking. When these bots are flagged for suspicious behavior and encounter CAPTCHA challenges, they submit them to what are known as “CAPTCHA farms.” These services employ human workers to solve challenges well within the timeout threshold. Fortunately, “invisible” reCAPTCHA (v2) and noCAPTCHA (v3) have evolved to counter this—they use behavioral analysis scoring as opposed to the user identifying images.

Human impersonation

Mimicking human behavior to bypass detection is a major element of modern bot attacks. As bots become more advanced and adept at impersonating real humans, security teams face the difficult task of identifying malicious traffic without adding friction for legitimate users. The most sophisticated bots can simulate human-like mouse movement, typing patterns, and browsing behaviors while varying their request timing to appear more “organic.”

Why bot attacks are growing in number and complexity

Bots accounted for more than half of internet traffic in 2024, with malicious bots making up 37% of all web activity—a 5% increase from the previous year. But why are bot attacks increasing in sophistication and frequency?

Several converging factors have led to a hyper-acceleration of both bot attack volume and technical complexity:

The rise of generative AI

GenAI has greatly democratized malicious coding. It’s well-established that threat actors employ LLMs (Large Language Models) in the creation of malicious software, despite the AI systems’ creators attempting to set guardrails to prevent it. Where creating highly agile, evasive bots once required deep technical expertise, AI-powered coding assistants now help less technical “script kiddies” generate and refine attack scripts.

Botnets-as-a-Service boom

Botnets-as-a-Service ecosystems have matured. The commercialization of cybercrime is nothing new, but botnet services have recently hit an inflection point. There’s now a thriving underground economy where budding cybercriminals can rent access to massive botnets, deploying malicious code and coordinating attacks with minimal setup. For example, Trend Micro documented the monetization of threat actor group Water Barghest’s 20,000+ IoT device botnet, which they listed on a residential proxy marketplace.

Vulnerable APIs

API proliferation has expanded attack surfaces. Application security firm F5 estimates that companies contended with over 400 APIs in 2024, making the job of monitoring and securing all of them a taxing affair. Meanwhile, APIs often lack the same security controls as user-facing applications, creating easier entry points for automated attacks. Supply chain attacks often target APIs amongst vendors and third-party partners with the intent of breaching a connected target.

The combination of these factors has created a perfect storm where bot attacks are attractive to both seasoned cybercriminals and novices alike. The ready access to coding LLMs, botnets-as-a-service, and a massive attack surface filled with vulnerable APIs all look like a gold mine. This has led to bot attacks becoming more frequent and effective at bypassing conventional defenses, forcing organizations to rethink their approach to automated threat prevention.

Types of bot attacks

As noted, bot attacks are not unique from other forms of cybercrime. Most varieties of cyberattacks can incorporate scripts. However, some attack vectors are particularly apt for automation and algorithmically-driven activity. Attacks that include large volumes of similar actions, such as repeated requests or messages, are effective when they are bot-led.

Four particular vectors are often partially or wholly bot-led:

Credential stuffing

Scraping attacks

Account takeover

DoS and DDoS attacks

Credential stuffing

In credential stuffing attacks, attackers obtain usernames and passwords (from the dark web or from another breach) and then try those same credentials on other websites via brute force. Over the past few years, credential stuffing attacks have hit PayPal, DraftKings, and Norton LifeLock.

Passwords are easy for computers to guess but hard for users to remember. If users are asked to create strong passwords for every account (a good practice), chances are they will cycle between 2-3 passwords across all their accounts. This means that once a password is leaked, all their accounts that use the same password are at risk.

With the aid of bots and automation, attackers can perform high-volume credential stuffing attacks and put sites at risk besides the site where the data breach initially took place.

Scraping attacks

Scraping is extracting information from a website or other digital location and compiling it, often for analysis. When it targets publicly available information, scraping is within the bounds of legality and standard business practice.

Scraping attacks, however, cross the line into extracting private information for nefarious ends.

Scraping attacks often involve bots seeking ways to extract data from websites on false pretenses. For example, cybercriminals may use bots to scrape data from private profiles on social media by implanting the bots within users’ following lists, bypassing restrictions.

A recent high-profile scraping incident impacted Facebook, leaking information from 553 million accounts, roughly 20% of the platform’s users. The attack was reported on and analyzed thoroughly in 2021, but the data in question had been scraped in 2019. The extent of this breach is unparalleled, encompassing personal information that has the potential to fuel subsequent attacks and enable unauthorized access to accounts, making it a commodity on the dark web.

Account takeover

Account takeover (ATO) attacks surged in 2024, according to threat detection firm Flare. The average annual growth rate of ATOs was 28%, with infostealer malware proliferated by bots leading the charge. Once bots gain unauthorized access to user accounts (often through methods like credential stuffing), they quickly pivot to monetization strategies that directly impact business outcomes.

Once cybercriminals have gained the ability to access an account illegitimately, they may use bots further to infiltrate and exploit it. There, they can steal or otherwise corrupt sensitive data, engage in fraud, or access other closely linked accounts. Compromised accounts become platforms to launch various malicious activities, leveraging the trust and privileges associated with legitimate user profiles:

Payment fraud and carding: Breached accounts quickly become test beds for stolen credit card data or making fraudulent purchases. Cybercriminals prefer established accounts because they bypass many fraud detection systems that flag new account activity or unusual payment patterns. Bots allow these compromised accounts to be employed at scale, testing out countless fraudulently obtained credit card numbers until one works on an existing profile.

Inventory manipulation and hoarding: Bots use hijacked accounts to engage in scalping (rapidly purchasing limited inventory like concert tickets or product drops) and inventory hoarding (filling shopping carts without completing purchases to prevent legitimate customers from buying). Established accounts with purchase history are less likely to trigger anti-bot measures during high-demand periods.

Account farming and authenticity laundering: After taking over legitimate accounts, bots often spend time making themselves appear more authentic by adding connections, posting content, or building engagement history. These “seasoned” accounts are then used for fake reviews, social media manipulation, or sold on underground markets for higher prices than newly created accounts.

Read more: Authentication in Ecommerce: Best Methods & CIAM Tips

DoS and DDoS attacks

Denial of service (DoS) attacks aim to jam up organizations’ systems with an influx of traffic. An individual or bot will send requests to a given server repeatedly until it causes traffic to slow to a halt. The goal is to interfere with business operations or take security features offline to render the organization vulnerable to additional attacks.

In a distributed denial of service (DDoS) attack, the same tactic is amplified with requests from numerous initiators. These attacks are not always bot-driven, but they are easy to automate. A lone attacker or group of attackers can write a single script, create duplicates and variations, and send near-infinite requests at relatively modest compute requirements.

These attacks are among the fastest-growing across every industry in 2023. By some estimates, DDoS attacks were up 200% in the first half of 2023, specifically because of automation i.e., bots.

How to detect and prevent bot attacks

Effective bot detection requires moving beyond basic traffic monitoring to implement specific, actionable detection methods that can identify automated behavior in real time. Modern bot detection combines multiple signals and risk indicators to build a comprehensive view of user authenticity.

Device fingerprinting and behavioral analysis

Implement device fingerprinting to collect signals from the device and browser that help distinguish legitimate users from automated scripts. Key indicators include inconsistent browser properties, missing JavaScript capabilities, headless browser signatures, and unnatural interaction patterns like perfect mouse movements or impossibly fast form completion.

Risk-based authentication scoring

Deploy real-time risk scoring that analyzes multiple factors at once. These include device reputation, geolocation data, and impossible travel detection. Risk scoring is an essential component of fraud prevention systems, which can enable organizations to set risk thresholds that trigger additional validation (adaptive authentication) only when suspicious activity is detected. This maintains a strong user experience while blocking high-risk login attempts.

Network-level bot detection

Monitor for bot-specific network patterns such as rapid-fire requests from the same IP, requests that bypass normal user flows, or traffic originating from known bot infrastructure like proxy networks. Implement strict rate limiting and analyze request timing patterns. Non-human entities usually look robotic and programmatic in their behavior. For example, bots often exhibit consistent intervals between actions that humans rarely maintain.

Passwordless authentication

Passkeys, one-time passwords (OTP), time-based OTP, and other login methods that forego traditional passwords can prevent bot traffic originating from stolen or cracked credentials, all while improving UX and customer satisfaction. These login methods are also resilient against bad bots because they rely on non-knowledge factors, like possessions and inherence.

Specialized bot protection integrations

Leverage third-party detection and prevention services that provide granular risk controls optimized for different attack vectors. Tools like reCAPTCHA Enterprise, Fingerprint, and phone intelligence services like Telesign can be integrated into authentication flows to enable deeper detection capabilities.

The key to effective bot defenses lies in layering multiple detection methods and mitigation policies based on the specific risks your organization faces. By combining these approaches within a flexible authentication system, security teams can build a more resilient auth stack that evolves to counter increasingly complex bot-based threats.

Stop identity-driven bot attacks with Descope

The prevalence and sophistication of bot attacks make them a clear and present danger to businesses and individuals alike. Descope can help.

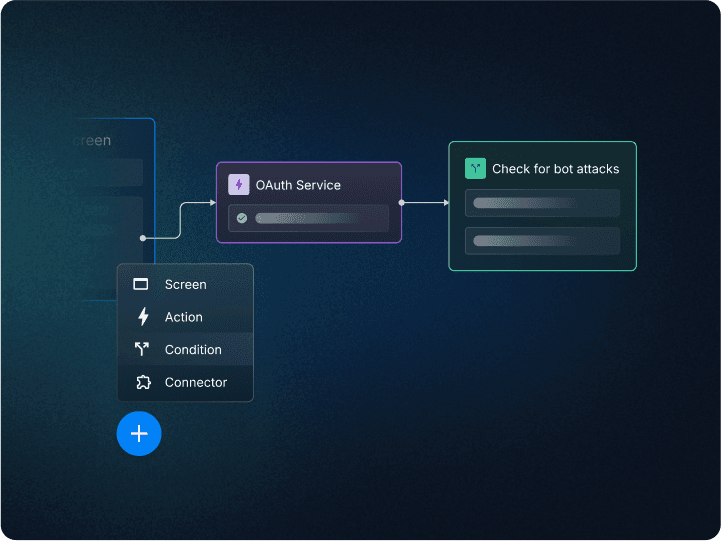

Descope helps developers and IT teams easily add authentication, authorization, and identity management to their apps using no-code workflows. In these workflows, you can add conditional steps to check if the login attempt is originating from a bot and block it if so.

Moreover, Descope offers third-party connectors with services such as Google reCAPTCHA Enterprise, Traceable, and Have I Been Pwned to ingest granular risk scores into your user journey and create branching paths for bots and real users.

Sign up for a Free Forever Descope account and begin your bot protection journey today. Have questions about our platform? Book time with our auth experts to learn more.